That’s it for now.

To follow the Maui Project’s development or say hi, you can join us on Telegram: https://t.me/mauiproject.

We are present on Twitter and Mastodon:

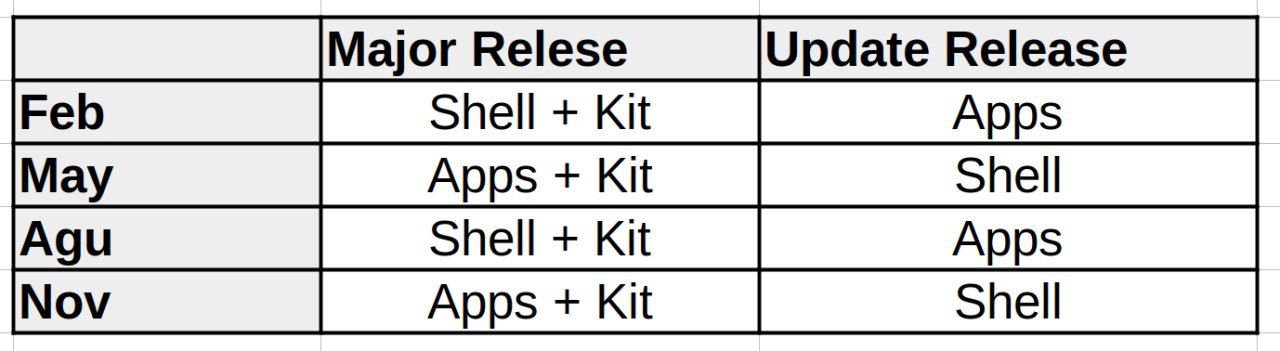

New release schedule

Signing Keys

```

stable/maui/agenda/0.5.0/ c75197601b15d7816153027ea3b4d090816365d7c6c9f6ed2c8011a31a3b68aa agenda-0.5.0.tar.xz

stable/maui/agenda/0.5.0/ cddbf790d71712e1389caac49a794f2b1c2c96bd4df1166d383e36717949abea agenda-0.5.0.tar.xz.sig

```

```

stable/maui/arca/0.5.0/ 131a0e55834ac9d764cca4451aa5d18ac6fafe9fd5cf60e4b23807d85b997889 arca-0.5.0.tar.xz

stable/maui/arca/0.5.0/ 9d7dcbf34598aecd192307b294445d2376de3c7a2a80ee31188d647c8389aceb arca-0.5.0.tar.xz.sig

```

```

stable/maui/bonsai/1.1.0/ 5ba6178b4267f8d263d7cc97ee5dd5085bdfde92a2176874521321835fb2a995 bonsai-1.1.0.tar.xz

stable/maui/bonsai/1.1.0/ 4297606dddcc18f6c00f78b4ecf8b263f2800e07ee1a7f0c14da2c96c2603178 bonsai-1.1.0.tar.xz.sig

```

```

stable/maui/booth/1.1.0/ ec594127fac21cee8e10921a47002297cb526f411d64bdb1375f8646bc886558 booth-1.1.0-signed.apk

stable/maui/booth/1.1.0/ 384cbfa64ee0abedb4c62d224959801eb7619a05661a9f04d4166f1a148c25cb booth-1.1.0.tar.xz

stable/maui/booth/1.1.0/ 1c825949e760747ce26a577c46befd61b78c15771b555f3f68ed31b3dfed870f booth-1.1.0.tar.xz.sig

```

```

stable/maui/buho/3.0.0/ c4034244e57273fa0d7bbb86616500973fde7276cefc9379e37a18a7e2095453 buho-3.0.0-signed.apk

stable/maui/buho/3.0.0/ 07145d1581b49aaee450df96dccac7f735d2d2868c42e55fff195194795a4690 buho-3.0.0.tar.xz

stable/maui/buho/3.0.0/ 08dbfe1df8e20d792812930af5179a230c4e0bd4963164a060c08440e607da23 buho-3.0.0.tar.xz.sig

```

```

stable/maui/clip/3.0.0/ ebb49d2ae2225d8c091d1441ebd797eaf21416d5fe13d4857f4a409c653d917c clip-3.0.0-signed.apk

stable/maui/clip/3.0.0/ 93e52de3473b15a8054f5a4f193a34ade3b59702ae9d716ff1758e9b0b24df28 clip-3.0.0.tar.xz

stable/maui/clip/3.0.0/ 16d93db2184428263e92ccda7d77950f587e2f92212d42c5c01c792d55fbee8a clip-3.0.0.tar.xz.sig

```

```

stable/maui/communicator/3.0.0/ 8ffce2e2e8b2bc0ea3a9c0a90fd9c8b4376ef745996d2b4b9061ec4b45f32eb6 communicator-3.0.0-signed.apk

stable/maui/communicator/3.0.0/ 206e4129029024a91eee59d2819ed7e2a67fafa16d0494ac4674742573c40f07 communicator-3.0.0.tar.xz

stable/maui/communicator/3.0.0/ 017f83366c8ac9d8518417a91c6411e77b44f26f6f69c17f7194a5cdf5cfc61f communicator-3.0.0.tar.xz.sig

```

```

stable/maui/fiery/1.1.0/ fd9215a225a75975bd71515c3dfe90606ceaf48a27e9d411132bffce9f897c9a fiery-1.1.0.tar.xz

stable/maui/fiery/1.1.0/ f7fc6c416a4c215b2dd65c080eb82908687dbbd4c9c655ff01c1f422c9bc93e6 fiery-1.1.0.tar.xz.sig

```

```

stable/maui/index/3.0.0/ 5beccbb39f0ef00ca7bb3dc0cc0e23b738e408a4b3d6d790d460343c8345506b index-3.0.0-signed.apk

stable/maui/index/3.0.0/ 1ca0ed4b4af6bfe6bbdd8165872756ff9774c682185d004642c36f7f2c6f2ef1 index-fm-3.0.0.tar.xz

stable/maui/index/3.0.0/ 324995147d2c18c8dd5e7e263e98fc088347e5581f7dab6d14bc1d50e02bac26 index-fm-3.0.0.tar.xz.sig

```

```

stable/maui/mauikit/3.0.0/ de7381e957d61f6e81bca1349f12f4a992ded3010083c4aa4b35a9f6387325d9 mauikit-3.0.0.tar.xz

stable/maui/mauikit/3.0.0/ d864414f58a060141238640a9d813a2f4ae584b8ab7f474cac0df8add19b0d5f mauikit-3.0.0.tar.xz.sig

```

```

stable/maui/mauikit-accounts/3.0.0/ fb39a0ae0ac89e991ef952299a13a0bdd1fef6f8abdce516b7da61b869fec739 mauikit-accounts-3.0.0.tar.xz

stable/maui/mauikit-accounts/3.0.0/ c80d030b7d41012d9431d3eb736f8407e53789e4aa3eb084e4f3d5a6852f6472 mauikit-accounts-3.0.0.tar.xz.sig

```

```

stable/maui/mauikit-calendar/1.1.0/ fdd57eee74a67859756182acd306a89f350f269ce5c2fe03d75128c37bb36294 mauikit-calendar-1.1.0.tar.xz

stable/maui/mauikit-calendar/1.1.0/ 160d68cd9bbad197e6a07a16bfc4f568d52567772e17cb2374cc2a605b5cfb8b mauikit-calendar-1.1.0.tar.xz.sig

```

```

stable/maui/mauikit-documents/1.1.0/ 3af374204aa86225c3289cbf37a76f281707d4305fae071a4c3ee79a8193251b mauikit-documents-1.1.0.tar.xz

stable/maui/mauikit-documents/1.1.0/ 1a17541156f502591127551b0d498fb1d17305d3b43750c508ce2cb2e0aad921 mauikit-documents-1.1.0.tar.xz.sig

```

```

stable/maui/mauikit-filebrowsing/3.0.0/ d3df8154a156d14f83367d609eafd5cf0f57cb06ef57e8348bd7c046388f0d0f mauikit-filebrowsing-3.0.0.tar.xz

stable/maui/mauikit-filebrowsing/3.0.0/ 7d43f85f78ff461c8c28f19cfc912a8d16259039d43173a3ce806ec24d3fc420 mauikit-filebrowsing-3.0.0.tar.xz.sig

```

```

stable/maui/mauikit-imagetools/3.0.0/ 2d3b7ad6a611c03a29db0d20515294bb5cc0dbc6104ac1181f3e7674a6694852 mauikit-imagetools-3.0.0.tar.xz

stable/maui/mauikit-imagetools/3.0.0/ 875eb5ba66227495ea1d40226bcda4b3d3d27e63ebc817216519bac8e7a07879 mauikit-imagetools-3.0.0.tar.xz.sig

```

```

stable/maui/mauikit-terminal/1.1.0/ ca18baf4ca158d856179659f86cb0497ead1e8f7af55b307b64c789f4635712a mauikit-terminal-1.1.0.tar.xz

stable/maui/mauikit-terminal/1.1.0/ 66fb8e0d8cd6385f51f86c786418e167f18eaa0989ee0f78e6241ff134c1a436 mauikit-terminal-1.1.0.tar.xz.sig

```

```

stable/maui/mauikit-texteditor/3.0.0/ ee6902ce23fa7f25ba1503a5330f8824e9399ed59fee1e6adacf8574b37e8bba mauikit-texteditor-3.0.0.tar.xz

stable/maui/mauikit-texteditor/3.0.0/ 67fa61e504ae2e7d5dd1f19fa643d8537516e154013a003f2efd6ef6d85146ef mauikit-texteditor-3.0.0.tar.xz.sig

```

```

stable/maui/mauiman/1.1.0/ 6523705d9d48dec4bd4cf005d2b18371e2a4a0d774415205dff11378eee6468f mauiman-1.1.0.tar.xz

stable/maui/mauiman/1.1.0/ c84083254f5fcceba2529aa111d47f39bcb87e958d6de79057c24fb3c8ab801c mauiman-1.1.0.tar.xz.sig

```

```

stable/maui/nota/3.0.0/ 3f6533194fcf732677251b19f898d791e1c4072c108898960c0dfd289dc26d30 nota-3.0.0-signed.apk

stable/maui/nota/3.0.0/ 31b8ee1b703d9329e30c99a9ad9886074468ef16b9926a2f29d674363b46614a nota-3.0.0.tar.xz

stable/maui/nota/3.0.0/ 9cff3b22653b4457c209e9bfe603d10527b5f5177034bc127721eb87b420e989 nota-3.0.0.tar.xz.sig

```

```

stable/maui/pix/3.0.0/ 41efab544465f584c0cabcd77ecea6e058f0007f7d79e279070f63fff7faca95 pix-3.0.0-signed.apk

stable/maui/pix/3.0.0/ d186edc1d993922398510b4595d5efeb6d1b532b58d5e04a8c43871bfb6002c1 pix-3.0.0.tar.xz

stable/maui/pix/3.0.0/ 545d26cd625a2ec19d17cbd1031db9d2e530cd47f1dd2d13df4a752a86aaf43a pix-3.0.0.tar.xz.sig

```

```

stable/maui/shelf/3.0.0/ c97f2379be4f83c41dbb789f534bea7f77b552cb2a42008bd98b273dbdc8a2ad shelf-3.0.0-signed.apk

stable/maui/shelf/3.0.0/ cd3770580732801f2589d5d6de6cf9d34bba6705403bad505bde778f8d3084a4 shelf-3.0.0.tar.xz

stable/maui/shelf/3.0.0/ a792580de49a9f081b8501b9d2016966a37a943328273d4addbd8854f11d83aa shelf-3.0.0.tar.xz.sig

```

```

stable/maui/station/3.0.0/ 2641b59ff1f3c4e19e7edf4612cff16d66f3ba5b2562930345ae33882a6e7112 station-3.0.0.tar.xz

stable/maui/station/3.0.0/ 0ee62ea885a167d54d220537c505fe4244822d35f054b21a885e40e2052d3ff5 station-3.0.0.tar.xz.sig

```

```

stable/maui/strike/1.1.0/ cabd7046fd982bca2297d4f2328198bd656e2ea67a877072784e89b978e15fad strike-1.1.0.tar.xz

stable/maui/strike/1.1.0/ b77bcd61b0a6324826f57131a084ded901ebb560a3d5c07dd98b7f424df2fa7d strike-1.1.0.tar.xz.sig

```

```

stable/maui/vvave/3.0.0/ fc292587bd576be65dfe7f576c895f34659da569585ba1d0d5896ce8e7828c69 vvave-3.0.0-signed.apk

stable/maui/vvave/3.0.0/ c8c53df23cf9761cfa2cfb3f681db49b6e136a2f13d5464c7f534c3c585d5bf4 vvave-3.0.0.tar.xz

stable/maui/vvave/3.0.0/ d6194db2b947f716ec5728186801de2461b313736828a310a1034b20b32bdf7c vvave-3.0.0.tar.xz.sig

```

@sriru:matrix.org

@sriru:matrix.org GSoC

GSoC

nicofee

nicofee

noahdvs

noahdvs