Saturday, 3 January 2026

This post will show the NixOS way of adding a custom package and explain the benefits of this approach in the context of system immutability.

Plasma Pass

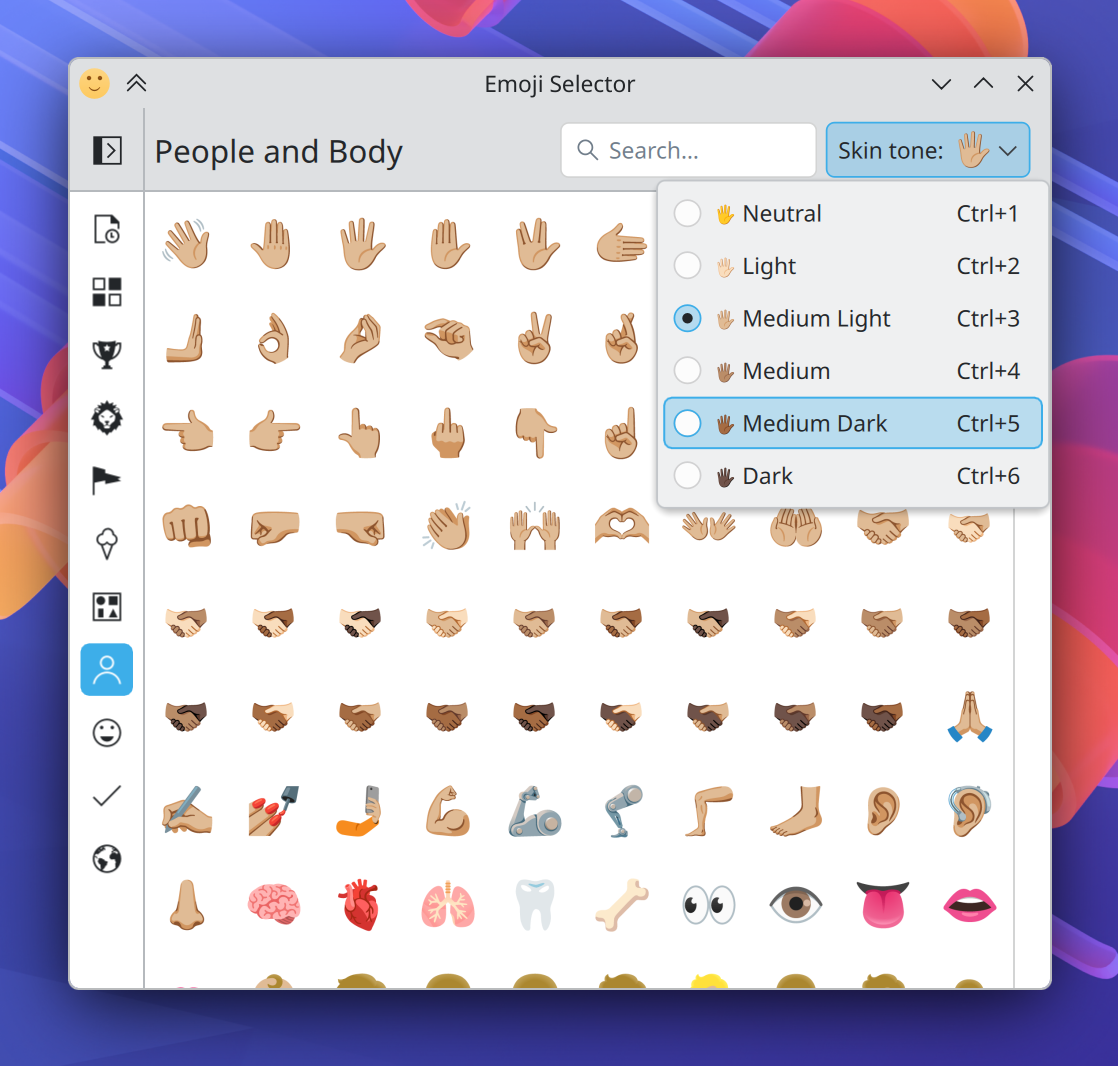

KDE Ni! OS recently got a new package installed by default – Daniel Vrátil’s Plasma Pass applet.

Plasma Pass is a Plasma applet to access passwords from

pass, the standard UNIX password manager. You can find more

information about the applet in Dan’s blog post.

As NixOS doesn’t currently offer Plasma Pass in its repositories, the package is installed in Ni! OS from the sources as in some other BTW, I use … distributions.

In NixOS, this is easily done via overlays. We can create an overlay

that defines the plasma-pass package so that it can be

installed as if it were a real NixOS package.

Package definition

This is the overlay definition used in Ni! (ni/packages/plasma-pass.nix):

self: prev: {

kdePackages = prev.kdePackages.overrideScope (kdeSelf: kdeSuper: {

plasma-pass = kdeSelf.mkKdeDerivation rec {

pname = "plasma-pass";

version = "1.3.0-git-59be3d64";

src = prev.fetchFromGitLab {

domain = "invent.kde.org";

owner = "plasma";

repo = "plasma-pass";

rev = "59be3d6440b6afbacf466455430707deed2b2358";

hash = "sha256-DocHlnF9VJyM1xqZx/hoQVMA/wLY+4RzAbVOGb293ME=";

};

buildInputs = [

kdeSelf.plasma-workspace

kdeSelf.qgpgme

self.oath-toolkit

];

meta = with prev.lib; {

description = "Plasma applet for the Pass password manager";

license = licenses.lgpl21Plus;

platforms = platforms.linux;

};

};

});

}Most of this file is self-explanatory (except for the strange looking syntax of the Nix language :) ).

Since Plasma Pass is a KDE project, we want it visible as a part of

kdePackages collection, and as it uses the common build

setup that all KDE projects use (or should use), it uses

mkKdeDerivation to define the plasma-pass

package. This defines some basic dependencies, commonly used by KDE

projects and adaptations needed for them to work properly in NixOS. For

non-KDE-friendly packages, you’d base your package on the standard

mkDerivation instead.

The project sources are located on the KDE’s GitLab instance at invent.kde.org, therefore the package

definition uses fetchFromGitLab to retrieve the sources. It

is also possible to clone repositories on GitHub, fetch and use source

tarballs, etc. All fetchers are described at NixOS Manual >

Fetchers.

The rev field in the fetchFromGitLab

command is the GIT revision that you want to install, and

hash you can get by using the nix-prefetch-git

command:

nix shell nixpkgs#nix-prefetch-git

nix-prefetch-git https://invent.kde.org/plasma/plasma-pass \

--rev 59be3d6440b6afbacf466455430707deed2b2358The buildInputs part defines additional dependencies

needed by Plasma Pass, and meta defines some meta

information about the package such as the description and the

license.

Using the definition

After defining the package, we have to add it to

nixpkgs.overlays in any of our NixOS configuration files.

In the case of Ni! OS, this is done in ni/modules/base.nix which

defines the UI software that Ni! OS installs by default.

nixpkgs.overlays = [

(import ../packages/plasma-pass.nix)

];With this overlay, plasma-pass can be used as if it was

a normal NixOS package.

environment.systemPackages = with pkgs; [

...

kdePackages.plasma-pass

...

];When plasma-pass gets added to the nixpkgs

repository, the only action that will be needed in Ni! OS to switch to

the official version is to remove the

import...plasma-pass.nix from the overlays (this is the

reason why we explicitly placed it in kdePackages

collection – otherwise, we could have just put it top-level).

Custom packages and immutability

The main point of this post is not really to announce that a single new package is added to the Ni! OS setup. Even if it is a cool one like Plasma Pass.

The point is to show how a custom package that is not available in

the vast collection of nixpkgs can be added to a

NixOS-based system.

The custom package becomes a proper regular Nix package and gets all the benefits of Nix’s particular approach to immutability. If Plasma Pass gets broken after an update (either if new Plasma version breaks Plasma Pass, or if the new version of Plasma Pass no longer works as expected), you can always boot into the version before the bad update.

With distributions with immutable core and custom applications installed as Flatpaks, downgrading is possible, but a bit more involved and relies on 3rdparty keeping the old package versions still available for download.

With NixOS, all the previous versions remain on your system until you decide to remove them.

@ivan:kde.org

@ivan:kde.org

ngraham

ngraham