Thursday, 18 December 2025

The Kdenlive team is happy to announce the release of version 25.12.0, just in time for the holiday season. For this release, we concentrated on improving the user experience. Many of these changes were discussed during our very productive Berlin sprint last September, where the team met for three days of brainstorming.

Interface and Usability

Docking System

We introduced a new, more flexible docking system, allowing you to group the widgets you want together or easily show/hide them on demand. Each layout is now saved in its own file, opening the possibility for sharing. It is also now saved inside the project file, so when reopening a project the layout used when saving it is loaded and you are immediately ready to continue where you left off editing!

The downside is that existing layouts are not compatible, so that you will need to recreate your custom layouts.

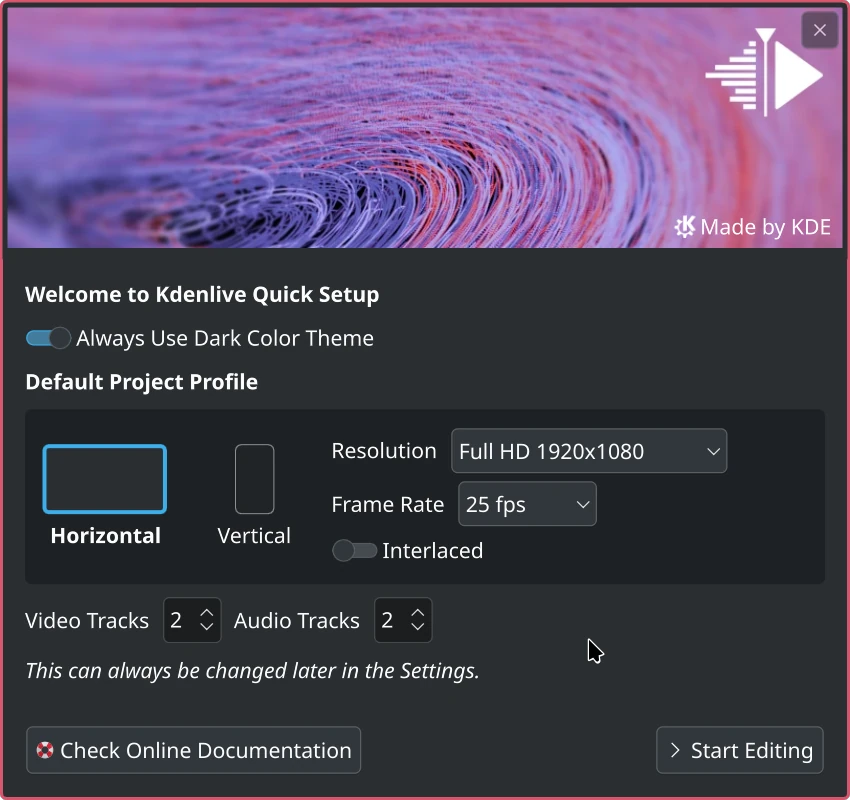

Welcome Screen

We introduced a Welcome Screen to improve the experience for new users, and add some handy shortcuts for everyone. With your feedback this will evolve in the future.

Along with the Welcome Screen, we introduced a vertical layout and optional safe areas to improve editing of 9:16 videos.

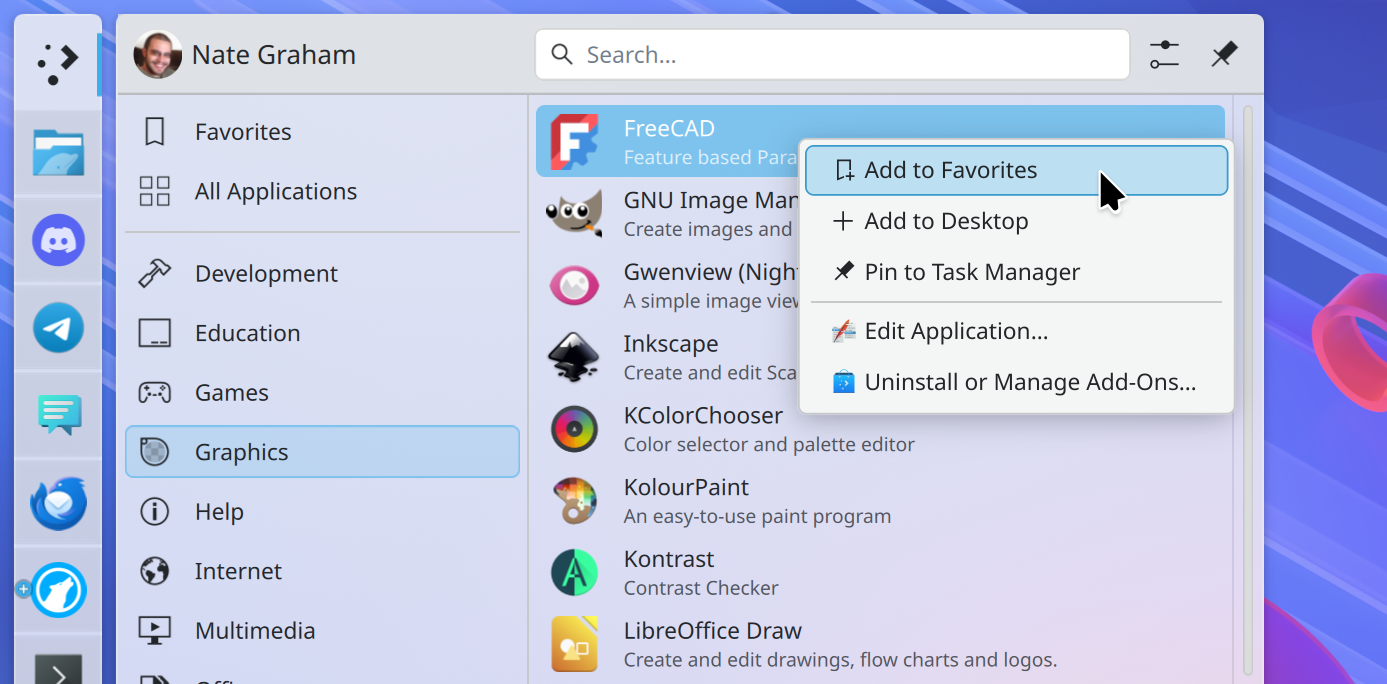

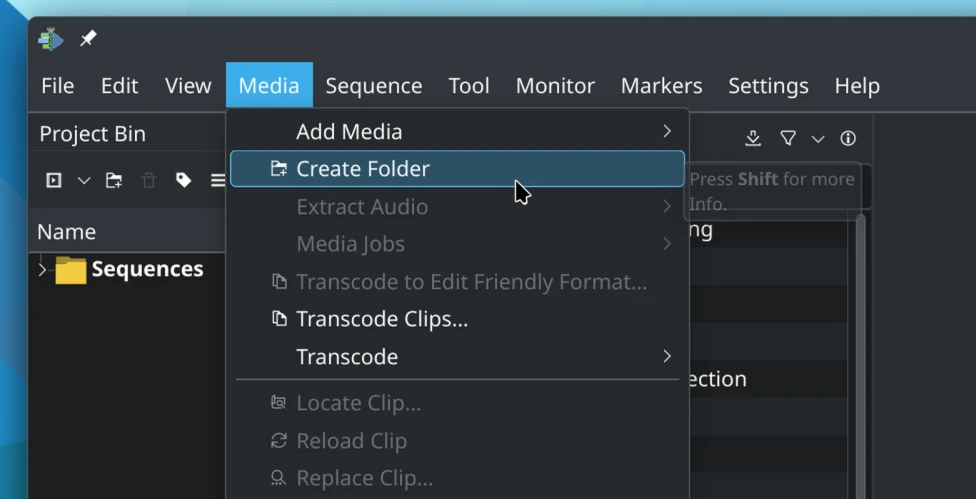

Menu Restructuring

Part of a long running discussion in the team, the menus were re-organized to make them more intuitive. Our long-time users may be confused initially (hopefully not for long, though), but we tried to follow some of the conventions in the professional editing world. For example, we regrouped all file related actions like Render and the Project Settings in the File menu. There might be a few adjustments in the upcoming releases, but the most important changes are in.

Thanks to users and translators feedback, we were able to fix several parts of the user interface that were not picked up properly for translation, making Kdenlive easier to use in your native language.

Monitor

We revamped the monitor view of audio, with a minimap on top allowing to intuitively zoom parts of the audio. (audio monitor)

Markers

For consistency and to avoid confusion, we renamed timeline guides to markers, since the term guide was used interchangeably and therefore confusing, and they were in fact markers for a sequence clip.

Markers can now have a duration that is shown in the timeline, and can be dragged in the timeline from the Markers list.

Other Highlights

Major Bug Fixes

- We fixed more than 15 crash reports

- Windows: Fix render failure when user name contains special characters

- Fix project corruption when copy-pasting a sequence or project file between projects

Packaging

- We fixed the VAAPI support in AppImage, allowing for faster decoding and render time

- Several updates made it into our binaries, like Qt 6.10.1 and FFmpeg 8.0

Last Minute Fixes

Several fixes that will be in the next release have already been included in the 25.12.0 binaries available on kdenlive.org. If you installed Kdenlive from your Linux distro, you will get those fixes with version 25.12.1 (release scheduled for January 2026).

Click to see the list of last minute changes.

- Fix xmlgui related crash starting an older Kdenlive version (< 25.08.3). Commit.

- Keep duration info when moving ranged markers. Commit.

- Don't load Kdenlive in the background if welcome screen is displayed to avoid busy cursor / greyed out screen on Wayland. Commit.

- Fix window does not appear after crash and no welcome screen. Commit.

- Ensure we cannot call a Welcome Screen action before it is connected. Commit.

- FIx possible crash in Welcome Screen trying to open profile or file when mainwindow was not ready yet. Commit.

- Fix changing keyframe type for multiple keyframes not working. Commit. Fixes issue #2104.

- Add AMF encoding profile for Windows. Commit.

- Don't allow saving a custom effect with the name of an existing effect. Commit.

- Fix horizontal editing layout not loaded. Commit.

- Hsvhold similarity must be > 0. Commit.

- Fix copy paste resets keyframe type. Commit. Fixes bug #513053.

- Fix editing transform on monitor discards opacity. Commit. Fixes issue #2108.

- Don't check for readOnly in QStorageInfo on Mac. Commit.

- Fix app not opening after crash and opening project from command line. Commit.

- Don't incorrectly show warning about hidden monitor. Commit.

- Fix project layout not correctly restored if opened from Welcome Screen. Commit.

The full changelog for 25.12.0 is available below.

Give back to Kdenlive

Releases are possible thanks to donations by the community. Donate now!

Need help ?

As usual, you will find very useful tips in our documentation website. You can also get help and exchange your ideas in our Kdenlive users Matrix chat room.

Get involved

Kdenlive relies on its community, your help is always welcome. You can contribute by :

- Helping to identify and triage bugs

- Contribute to translating Kdenlive in your language

- Promote Kdenlive in your local community

For the full changelog continue reading on kdenlive.org.

@mahoutsukai:kde.org

@mahoutsukai:kde.org toscalix

toscalix