Saturday, 13 December 2025

Welcome to a new issue of This Week in Plasma!

This week the team made significant progress on KWin’s Wayland screen support. Specifically, better mirroring and custom modes — both items on the “Known Significant Issues” page — have been implemented for Plasma 6.6! The remaining items on that page are areas of active focus, too, as we race towards the Wayland finish line.

…But wait, there’s more!

Notable New Features

Plasma 6.6.0

The Window List widget lets you filter out windows not on the current screen, virtual desktop, or activity — just like the Task Manager widgets do. (Shubham Arora, plasma-desktop MR #3341)

Discover now lets you install and remove fonts on distros with package managers that use the PackageKit library. (Joey Riches, discover MR #1113)

Notable UI Improvements

Plasma 6.6.0

The “Minimize All” KWin script (which is included by default but not enabled) now also lets you minimize all windows besides the active one using the Meta+Shift+O keyboard shortcut. (Luis Bocanegra, bug #197952)

KWin now sets reasonable default scale factors for newly-connected TVs. (Xaver Hugl, kwin MR #8537)

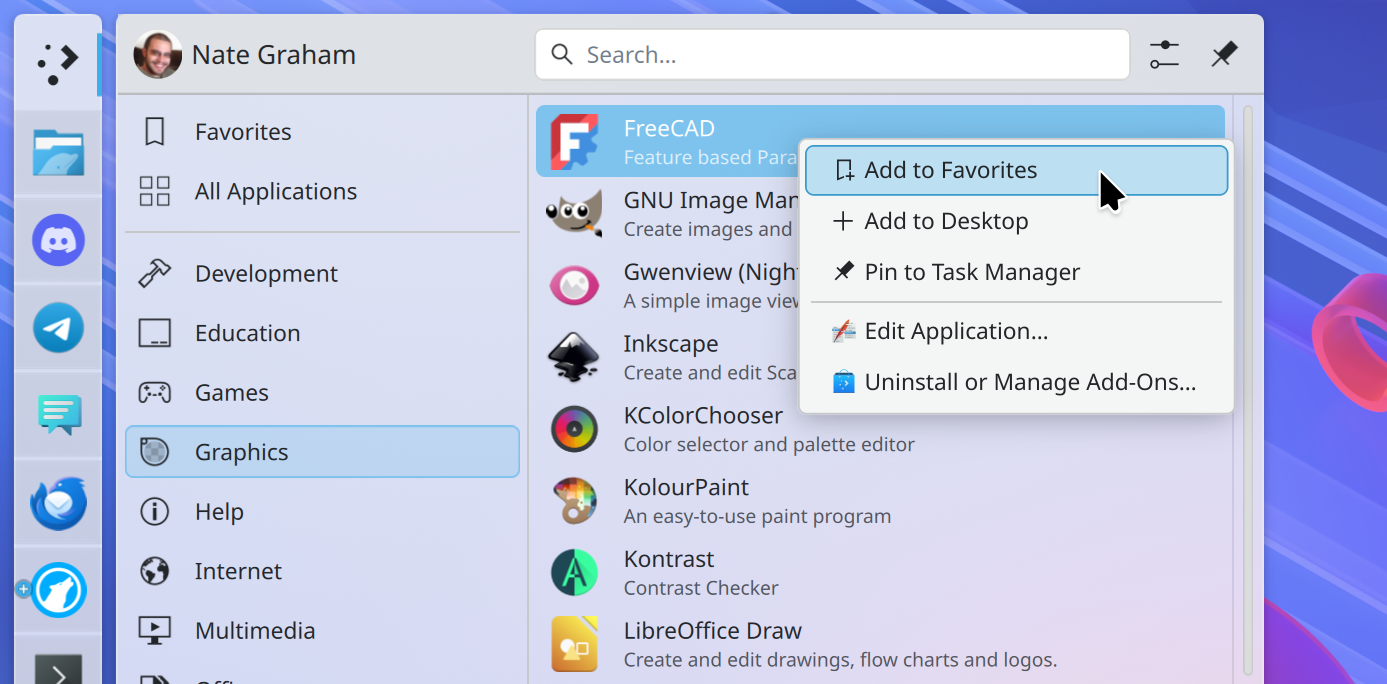

Several related menu items in the context menus of the Kicker/Kickoff/Application Dashboard widgets have been grouped together. (Kisaragi Hiu, plasma-desktop MR #3381)

The kscreen-doctor tool now lets you know that it’s possible to mirror your screens. (Nicolas Fella, libkscreen MR #267)

Notable Bug Fixes

Plasma 6.5.4

Fixed a recent regression with desktop icons not staying on the right screen with some multi-screen arrangements. (Błażej Szczygieł, plasma-desktop MR #3330)

The Meta+P shortcut to open the screen chooser OSD now works on immutable-style distros. (Nate Graham, kscreen MR #440)

Plasma 6.5.5

Fixed a recent regression that caused screen freezes on some hardware right before power management kicked in. We’ve asked distros to backport the fix as ASAP as possible so everyone gets it as quickly. (Xaver Hugl, bug #513151)

Fixed a case where KWin could crash in the X11 session when using an older Valve Index headset. (David Edmundson, bug #507677)

Fixed an issue that could make stray modifier key events get sent to XWayland-using apps, especially when switching to them using keyboard shortcuts involving modifier keys. (Vlad Zahorodnii, link)

Fixed a case where KWin was handling drag-and-dropped text incorrectly, which could make drops fail in some apps. (Vlad Zahorodnii, bug #512235)

Fixed two screen locker issues that could make it slower to unlock via KDE Connect, and make it erroneously flash some text about a fingerprint or smart card reader when they’re not relevant. (Fushan Wen, kscreenlocker MR #296)

Fixed the back button in Folder View widgets’ list view display style. (Christoph Wolk, plasma-desktop MR #3387)

Fixed an issue in System Monitor that made it possible to end critical processes using the keyboard. (Oliver Schramm, bug #510464)

Other bug information of note:

- 5 very high priority Plasma bugs (same as last week). Current list of bugs

- 55 15-minute Plasma bugs (up from 37 last week). Current list of bugs

You might note a large increase in the number of HI priority bugs this week. This is because we’re beginning a quality initiative and using the HI priority level to track bugs to fix quickly. We want to make sure Plasma is getting extra large amounts of polish and stability work these days!

Other Notable Things in Performance & Technical

Plasma 6.5.4

Plugged a GPU memory leak in KDE’s portal implementation. (David Edmundson, bug #494138)

Plasma 6.5.5

Fixed a case where .desktop files with spec-violating names could cause massive amounts of log spam. (David Redondo, bug #512562)

Plasma 6.6.0

Massively improved support for screen mirroring in the Wayland session. Now it works really well! (Xaver Hugl, bug #481222 and kscreen MR #439)

You can now use the kscreen-doctor tool to add custom screen modes, useful for supporting exotic or misbehaving screens in the Wayland session. (Xaver Hugl, bug #456697)

Made the KWin rule to force a titlebar and frame also work for windows of native-Wayland apps. (Xaver Hugl, bug #452240)

You can now move windows with Meta+drag when using a drawing tablet stylus. (Vlad Zahorodnii, bug #509949)

How You Can Help

Donate to KDE’s 2025 fundraiser! It really makes a big difference. In total, KDE has raised over €280,000 during this fundraiser! The average donation is about €25. KDE truly is funded by you!

This money will help keep KDE strong and independent for years to come, and I’m just in awe of the generosity of the KDE community and userbase. Thank you all for helping KDE to grow and prosper!

If money is tight, you can help KDE by directly getting involved. Donating time is actually more impactful than donating money. Each contributor makes a huge difference in KDE — you are not a number or a cog in a machine! You don’t have to be a programmer, either; many other opportunities exist.

To get a new Plasma feature or a bugfix mentioned here, feel free to push a commit to the relevant merge request on invent.kde.org.

ngraham

ngraham

@ivan:kde.org

@ivan:kde.org