Wednesday, 18 February 2026

Hey all! I'm CJ and I'm checking in with a midterm update on the Season of KDE task of automating data collection for the KDE promotional team.

The first term of the two for this Season of KDE task has mostly been a learning experience of what does and doesn't work when it comes to scraping data from the web, laying down our toolset and approach to data collection.

Three subtasks have resulted:

- Create a script that collects follower and post counts from several websites housing KDE's social media accounts

- Create a script that processes information from the Reddit Insights page for the KDE subreddit

- Create a script automating the evaluation of articles discussing KDE tools

The first two of those are mostly completed while the last one is in its research and planning phase. Both finished subtasks came with their own sets of challenges, techniques and tools that I'll detail separately.

Follower and post counts scraper

This is a script I discussed in my first blog post that scrapes follower and post counts from X (formerly known as Twitter), Mastodon, Bluesky, and Threads. The major updates to this script made since then are that it employs a more user and server-friendly usage method and that we've tackled a few issues that came up outside of the script's scraping. On the usage side I've added command-line arguments and an expectation for a JSON file containing the links to scrub from. This makes swapping out social media links easy as well as adding options for scaling up configuration of the script if any further development is needed.

At the point of writing the logic of the script has held up well but the data format we were outputting to, Open Document Format (ODF), wasn't friendly for our specific usage, which is something I touched on in that first blog post. In the end we decided the tools that interface with ODF were too unwieldy to work with from an automation and programmatic standpoint so we're looking into alternatives at the moment. One promising solution is KDE's LabPlot which has a good looking (but experimental) Python API and is FOSS. For now I've set the script up to output to a user-friendly JSON file until we resolve what tool will be leveraged for data analysis in the end.

Another issue came from the input-side of the script in the X/Twitter scraping portion. Many public Nitter instances implement bot-prevention I was unaware of that triggered on an attempted headless server run of the script. With that making simpler scraping methods difficult and also paying respect to those instances' desire not to be botted, I've decided to spin up our own local Nitter instance on the server which is running the script. Now scraping X/Twitter comes much more easily and with a lot less risk of failure.

KDE subreddit Insights scraper

Since that first week we've added another task, being the creation of a script that can add up the weekly influx of new visitors, unique page views, and members of the KDE subreddit utilizing the subreddit's Insight page. This script mostly challenged our ability to automate the login process for Reddit as the usual methods are prevented by browser verification tools.

Reddit implements some version of reCAPTCHA that utilizes a form of invisible reCAPTCHA on their login page. The method of implementation changes based off which version they use, but in the end a score grading the likelihood of a user being a bot or a human is returned to the website upon login. This means that simple HTTP requests are likely not enough to get the job done and that a level of interaction supplied using a browser automation framework is needed to handle the login process.

To that end, we chose to leverage the long-standing Selenium web browser automation framework. Selenium, and many browser-automation frameworks like it, works by launching a full-featured web browser to run its automated tasks. This introduces problems in running these scripts on a headless server but greatly simplifies bot-prevention thwarting and the loading of any JavaScript-sensitive page elements.

With Selenium automating our login process, the only challenge left was to process the HTML data retrieved. Reddit Insights presents its information in the form of bar charts that visualize the daily page views, unique visitors, and subscribers to a subreddit. Some small analysis of the page source revealed that the daily data populating the bar charts are stored with millisecond UNIX timestamp representations of those days. Using BeautifulSoup, it was very easy for me to grab that daily data using those timestamps and sum up the totals needed for our script.

The main challenge this script presents now is how we can get it running on a weekly basis in a headless server. The UI component is non-negotiable so the solution will very likely come in the form of server configuration.

Smaller updates

- Investigated automation of NextCloud data uploads

- Researched how to schedule scripts to run on an interval using systemd unit files

- Wrote technical documentation on the purpose and usage of both scripts developed at the point of writing

- Researched various alternative packages for performing HTTP requests and browser automation tasks

Future

Since the last two subtasks are complete logic-wise outside of any future issues we run into, a new one has been assigned as part of the data collection automation task. The KDE promo team collects various articles about KDE and software related to it and evaluates the contents of those articles as they relate to KDE and how they view whichever KDE tool they discuss. This evaluation process is performed manually which takes up time, so I've been tasked with developing some method of analyzing these articles in an automated fashion.

Along with that new subtask, solving the issues of running browser automation software on a server and what data evaluation software we'll target will greatly benefit us by expanding our options for deploying scripts made in this task and making their data immediately useful for the KDE promo team.

Lessons learned

It's been a lot of fun to tackle the first two tasks. I've had to pull from past experience with APIs, HTML, and HTTP that have been rotting in deeper parts of my brain as well as learn much more about how modern, full-featured websites deploy those tools. I'm a bit anxious about the problem of server deployment since I want these scripts to be as useful and maintainable as possible for the KDE promotion team, but I'm confident we'll find a solution and I'm sure it will feel very rewarding to solve.

Concerning the new subtask, this assignment is a departure from the first two and it's very likely a light and local AI/machine learning method will be looped into this process. That makes it exciting to tackle since it's so different from the last couple of subtasks and incorporates an entirely separate emerging field. I'm very much looking forward to rounding my skills with the new challenges this subtask presents.

The UN Open Source Principles are comprised of eight guidelines and provide a framework to guide the use, development and sharing of open source software across the United Nations. This is part of the UN's Open Source United (OSU) initiative, which aims to coordinate and increase open source efforts across the United Nations system.

According to OSU:

"Across the UN, teams are building powerful digital tools, but much of this work is isolated. Open Source United breaks these silos, encourages collaboration, and makes innovation easier to share and reuse. By working together, we deliver solutions that are more transparent, sustainable, and cost-effective."

Alongside another 119 FLOSS organisations, KDE will support the effort to connect UN teams and their partners, as well as the global community, encouraging them to share, discover and reuse open-source solutions in their work to carry out the UN’s mission worldwide.

Tuesday, 17 February 2026

Qt for MCUs 2.11.2 LTS has been released and is available for download. This patch release provides bug fixes and other improvements while maintaining source compatibility with Qt for MCUs 2.11 (see Qt for MCUs 2.11 LTS released). This release does not add any new functionality.

In my last post, I made a solemn vow to not touch Kapsule for a week. Focus on the day job. Be a responsible adult.

Success level: medium.

I did get significantly more day-job work done than the previous week, so partial credit there. But my wife's mother and sister are visiting from Japan, and they're really into horror movies. I am not. So while they were watching people get chased through dark corridors by things with too many teeth, I was in the other room hacking on container pipelines with zero guilt. Sometimes the stars just align.

Here's what came out of that guilt-free hack time.

Konsole integration: it's actually done

The two Konsole merge requests from the last post—!1178 (containers in the New Tab menu) and !1179 (container association with profiles)—are merged. They're in Konsole now. Shipped.

Building on that foundation, I've got two more MRs up:

!1182 adds the KapsuleDetector—the piece that actually wires Kapsule into Konsole's container framework. It uses libkapsule-qt to list containers over D-Bus and OSC 777 escape sequences for in-session detection, following the same pattern as the existing Toolbox and Distrobox detectors. It also handles default containers: even if you haven't created any containers yet, the distro-configured default shows up in the menu so you can get into a dev environment in one click.

!1183 is a small quality-of-life addition: when containers are present, a Host section appears at the top of the container menu showing your machine's hostname. Click it, and you get a plain host terminal. This matters because once you set a container as your default, you need a way to get back to the host without going through settings. Obvious in hindsight.

The OSC 777 side of this lives in Kapsule itself—kapsule enter now emits container;push / container;pop escape sequences so Konsole knows when you've entered or left a container. This is how the tab title and container indicator stay in sync.

Four merge requests across two repos (Konsole and Kapsule) to get from "Konsole doesn't know Kapsule exists" to "your containers are in the New Tab menu and your terminal knows when you're inside one." Not bad for horror movie time.

Configurable host mounts: the trust dial is real

In the last post, I talked about making filesystem mounts configurable—turning the trust model into a dial rather than a switch. That's shipped now.

--no-mount-home does what it says—your home directory stays on the host, the container gets its own. --custom-mounts lets you selectively share specific directories. And --no-host-rootfs goes further, removing the full host filesystem mount entirely and providing only the targeted socket mounts needed for Wayland, audio, and display to work.

The use case I had in mind was sandboxing AI coding agents and other tools you don't fully trust with your home directory. But it's also useful for just keeping things clean—some containers don't need to see your host files at all.

Snap works now

Here's a screenshot of Firefox running in a Kapsule container on KDE Linux, installed via Snap:

I expected this one to be a multi-day ordeal. It wasn't.

Snap apps—like Firefox on Ubuntu—run in their own mount namespace, and snap-update-ns can't follow symlinks that point into /.kapsule/host/. So our Wayland, PipeWire, PulseAudio, and X11 socket symlinks were invisible to anything running under Snap, resulting in helpful errors like "Failed to connect to Wayland display."

The fix was straightforward: replace all those symlinks with bind mounts via nsenter. Bind mounts make the sockets appear as real files in the container's filesystem, so Snap's mount namespace setup handles them correctly. That was basically it.

While I was in there, I batched all the mount operations into a single nsenter call instead of running separate incus exec invocations per socket. That brought the mount setup from "noticeably slow" to "instant"—roughly 10-20x faster on a cold cache. And the mount state is now cached per container, so subsequent kapsule enter calls skip the work entirely.

NVIDIA GPU support (experimental)

This one's interesting both technically and in terms of where it's going.

Kapsule containers are privileged by design—that's what lets us do nesting, host networking, and all the other things that make them feel like real development environments. The problem is that upstream Incus and LXC both reject their NVIDIA runtime integration on privileged containers. The upstream LXC hook expects user-namespace UID/GID remapping, and the default codepath wants to manage cgroups for device isolation. Neither applies to our containers.

So I wrote a custom LXC mount hook that runs nvidia-container-cli directly with --no-cgroups (privileged containers have unrestricted device access anyway) and --no-devbind (Incus's GPU device type already passes the device nodes through). This leaves nvidia-container-cli with exactly one job: bind-mount the host's NVIDIA userspace libraries into the container rootfs so CUDA, OpenGL, and Vulkan work without the container image shipping its own driver stack.

There's a catch, though. On Arch Linux, the injected NVIDIA libraries conflict with mesa packages. The container's package manager thinks mesa owns those files, and now there are mystery bind-mounts shadowing them. It works, but it's ugly and will cause problems during package updates. I hit this on Arch first, but I'd be surprised if other distros don't have the same issue—any distro where mesa owns those library paths is going to complain.

So NVIDIA support is disabled by default for now. The plan: build Kapsule-specific container images that ship stub packages for the conflicting files, and have images opt-in to NVIDIA driver injection via metadata. Two independent flags control the behavior: --no-gpu disables device passthrough entirely (still on by default), and --nvidia-drivers enables the driver injection.

Architecture: pipelines all the way down

The biggest behind-the-scenes change in v0.2.1 is the complete restructuring of container creation. The old container_service.py was a 1,265-line monolith that did everything sequentially in one massive function. It's gone now.

In its place is a decorator-based pipeline system. Container creation is a series of composable steps, each a standalone async function that handles one concern:

Pre-creation: validate → parse image → build config → store options → build devices Incus API call: create instance Post-creation: host network fixups → file capabilities → session mode User setup: mount home → create account → configure sudo → custom mounts → host dirs → enable linger → mark mapped

Each step is registered with an explicit order number and gaps of 100 between steps, so inserting new functionality doesn't require renumbering everything. The decorator handles sorting by priority with stable tie-breaking, so import order doesn't matter.

This pattern worked well enough that I plan to extend it to other large operations—delete, start, stop—as they accumulate their own pre/post logic.

On the same theme of "define it once, use it everywhere": container creation options are now defined in a single Python schema that serves as the source of truth for the daemon's validation, the D-Bus interface (which now uses a{sv} variant dicts, so adding an option never changes the method signature), and the C++ CLI's flag generation. Add a new option in Python, recompile the CLI, and you've got a --flag with help text and type validation. Zero manual C++ work.

The long-term plan is to use this same schema to dynamically generate the graphical UI in a future KCM. Define the option once, get the CLI flag, the D-Bus parameter, the daemon validation, and the Settings page widget—all from the same schema.

First external contributor

Marie Ramlow (@meowrie) submitted a fix for PATH handling on NixOS—the first external contribution to Kapsule. I don't have a NixOS setup to test it on, so this one's on trust. That's open source for you: someone shows up, fixes a problem you can't even reproduce, and you merge it with gratitude and a prayer.

Testing

The integration test suite grew substantially. New tests cover host mount modes, custom mount options, OSC 777 escape sequence emission, and socket passthrough. The test runner now does two full passes—once with the default full-rootfs mount and once with --no-host-rootfs—to verify both configurations work.

Bugs caught during testing that would have been embarrassing in production: a race condition in the Incus client where sequential device additions could clobber each other (the client wasn't waiting for PUT operations to complete), and Alpine containers failing because they don't ship /etc/sudoers.d by default.

CI/CD: of all the things to break

I finally built out the CI/CD pipelines. They use the same kde-linux-builder image that builds KDE Linux itself—mainly because it's one of the few CI images with sudo access enabled, which we need for Incus operations.

The good news: the pipeline successfully builds the entire project, packages it into a sysext, deploys it to a VM, and runs the integration tests. That whole chain works. I was pretty pleased with myself for about ten minutes.

The bad news: when the first test tries to actually create a container, the entire CI job dies. Not "the test fails." Not "the runner reports an error." The whole thing just... stops. No exit code, no error message, no logs after that point. Nothing.

I'm fairly sure it's causing a kernel panic in the CI runner's VM. Which is, you know, not great.

Debugging this has been miserable. I can't get any logs after the panic because there are no logs—the kernel is gone. I tried adding debug prints before each step in the container creation pipeline to isolate exactly where it dies. The prints don't come through either, probably because of output buffering, or maybe the runner agent doesn't get a chance to stream the output to GitLab before the entire VM goes down.

The weird part: it's not a nested virtualization issue. Regular Incus works fine on the same runner—you can create containers interactively, no problem. And it doesn't reproduce on KDE Linux at all. Something about the specific combination of the CI environment and Kapsule's container creation path is triggering it, and I have no way to see what.

I've shelved this for now. The pipeline is there, the build and deploy stages work, and the tests would work if the runner didn't kernel panic when Kapsule tries to create a container. If anyone reading this has ideas, I'm all ears.

What's next: custom container images

The biggest item on my plate is custom container images. Right now, Kapsule uses stock distribution images from the Incus image server. They work, but they're not optimized for our use case—things like the NVIDIA stub packages I mentioned above need to live somewhere, and "just install them at container creation time" adds latency and fragility.

Incus uses distrobuilder for image creation, so the plan is straightforward: image definitions live in a directory in the Kapsule repo, a CI pipeline invokes distrobuilder to build them, and the images get published to a server.

The "published to a server" part is where it gets political. I talked to Ben Cooksley about hosting Kapsule images on KDE infrastructure, and he's—understandably—not yet convinced that Kapsule needs its own image server. It's a fair pushback. This is all still experimental, and spinning up image hosting infrastructure for a project that might change direction is a reasonable thing to be cautious about.

So for now, I'll host the images on my own server. They probably won't be the default, since the server is in the US and download speeds won't be great for everyone. But they'll be available for testing and for anyone who wants the NVIDIA integration or other Kapsule-specific tweaks. I'll bug Ben again when the image story is more fleshed out and there's a clearer case for why KDE infrastructure should host them.

Beyond that: get the Konsole MRs (!1182 and !1183) reviewed and merged, and figure out why CI kills the kernel. The usual.

Plasma 6.6 makes your life as easy as possible, without sacrificing the flexibility or features that have made Plasma the most versatile desktop in the known universe.

With that in mind, we’ve improved Plasma’s usability and accessibility, and added practical new features into the mix.

Check out what’s new and how to use it in our (mostly) visual guide below:

Highlights

On-Screen Keyboard

Enjoy our new and improved on-screen keyboard

Spectacle Text Recognition

Extract text from screenshots in Spectacle

Plasma Setup

Set up a user account after the operating system has been installed

New Features

Those who like tailoring the look and feel of their environment can now turn their current setup into a new global theme! This custom global theme can be used for the day and night theme switching feature.

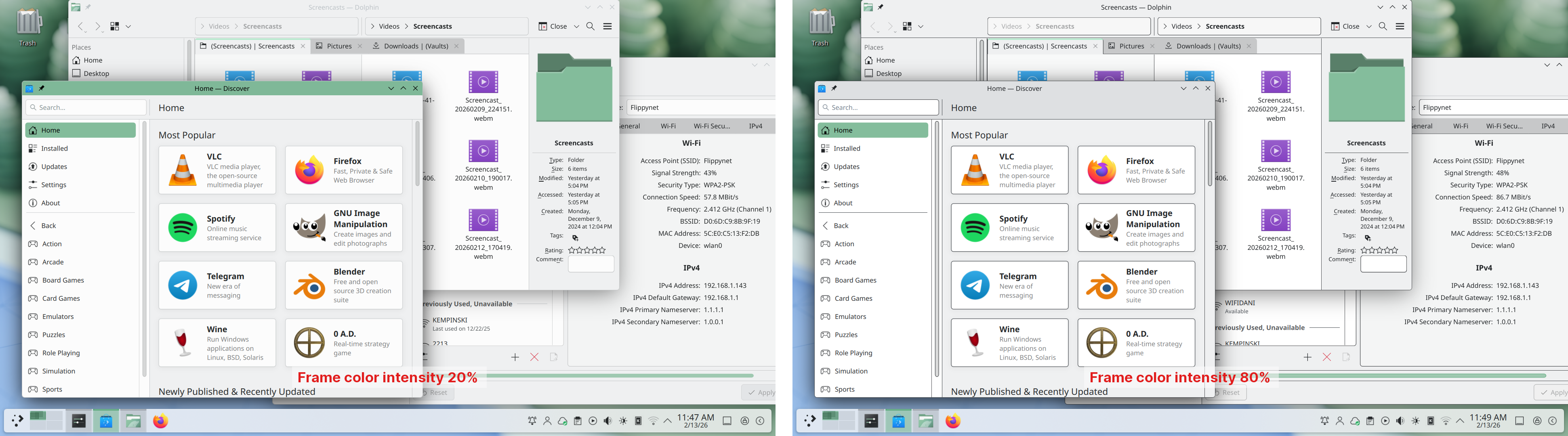

A more subtle way of modifying the look of your apps is by changing the color intensity of every frame:

Choose emoji (Meta+.) skin tones more easily with a new skin tone selector:

A major focus of Plasma 6.6 has been speeding up common workflows. So if the system has a camera, you can quickly connect to a new Wi-Fi network simply by scanning its QR code:

Hover the pointer over any app’s icon playing sound in the task manager, and scroll to adjust its volume:

And save yourself a click by enabling Open on hover in your Windows List widget. You can also filter out windows not on the current desktop or activity:

Hold down the Alt key and double-click on a file or folder on the desktop to bring up its properties:

Accessibility

To help everyone use and enjoy Plasma, we’ve improved accessibility across the board.

If you have colorblindness, check out the filters on System Settings’ Accessibility page, under Color Blindness Correction. Plasma 6.6 adds a new grayscale filter, bringing the total to four filters that account for different kinds of colorblindness:

Still in the area of enhancements for the visually impaired, our Zoom and Magnifier feature has gained a new tracking mode that always keeps the pointer centered on the screen, bringing the total to four modes:

In addition, we added support for “Slow Keys” on Wayland, and the standardized “Reduced Motion” accessibility setting.

Screenshots and Screen Recording

Speaking of accessibility, Spectacle can now recognize and extract text from images it scans. Among other use cases, this makes it easy to write alt texts for visually-impaired users:

You can also filter windows out of a screencast by choosing a special option from the pop-up menu that appears when right-clicking a window’s title bar:

Virtual Keyboard

Plasma 6.6 also features a new on-screen keyboard! Say hello to the brand-new Plasma Keyboard:

Plasma Setup

Plasma Setup is the new first-run wizard for Plasma, and creates and configures user accounts separately from the installation process.

With Plasma Setup, the technical steps of operating system installation and disk partitioning can be handled separately from user-facing steps like setting up an account, connecting to a network, and so on. This facilitates important use cases such as:

- Companies shipping Plasma pre-installed on devices

- Businesses or charity organizations refurbishing computers with Plasma to give them new life

- Giving away or selling a computer with Plasma on it, without giving the new owner access to the previous owner’s data

But That’s Not All…

Plasma 6.6 is overflowing with goodies, including:

- The ability to have virtual desktops only on the primary screen

- An optional new login manager for Plasma

- Optional automatic screen brightness on devices with ambient light sensors

- Optional support for using game controllers as regular input devices

- Font installation in the Discover software center, on supported operating systems

- Choose process priority in System Monitor

- Standalone Web Browser and Audio Volume widgets can be pinned open

- Support for USB access prompts and a visual refresh of other permission prompts

- Smoother animations on high-refresh-rate screens

To see the full list of changes, check out the complete changelog for Plasma 6.6.

In Memory of Björn Balazs

In September, we lost our good friend Björn Balazs to cancer.

An active and passionate contributor, Björn was still holding meetings for his Privact project from bed even while seriously ill during Akademy 2025.

Björn’s drive to help people achieve the privacy and control over technology that he believed they deserved is the stuff FLOSS legends are made of.

Björn, you are sorely missed and this release is dedicated to you.

Plasma Setup, the new wizard that guides users through the initial configuration of KDE Plasma, is having its first release as part of the Plasma 6.6 release!

With Plasma Setup, the technical steps of operating system installation and disk partitioning can be handled separately from user-facing steps like setting up an account, connecting to a network, and so on. This facilitates important use cases such as:

- Companies shipping Plasma pre-installed on devices

- Businesses or charity organizations refurbishing computers with Plasma to give them new life

- Giving away or selling a computer with Plasma on it, without giving the new owner access to the previous owner’s data

This has been several months in the making, as it has been my primary focus ever since I was hired by KDE e.V. last year. The project has seen a ton of work, and we've collaborated with distros and other stakeholders to ensure it meets the needs of the community.

I am very excited to see Plasma Setup finally in the hands of users, and how it will make KDE (and Linux/FOSS in general) more accessible to a wider audience. This is a key piece that was needed in order for Plasma to be more viable and accessible to a whole class of users (non-technical end-users, businesses, governments, etc.).

There are still plenty of improvements that can be made, and contributions are very welcome! If you are interested in contributing, please check out the project on KDE's GitLab: https://invent.kde.org/plasma/plasma-setup

Monday, 16 February 2026

Here is an overview on the new features added to the Quick3D.Particles module for Qt 6.10 and 6.11. The goal was to support effects that look like they are interacting with the scene and to do this without expensive simulations/calculations. Specifically we'll be using rain effect as an example when going trough the new features.

Sunday, 15 February 2026

Tellico 4.2 is available, with some improvements and bug fixes. This release now requires Qt6 (> 6.5) as well as KDE Frameworks 6. One notable behavior change is that when images are removed from the collection, the image files themselves are also removed from the collection data folder.

Users have provided substantial feedback in a number of areas to the mailing list recently, which is tremendously appreciated. I’m always glad to hear how Tellico is useful and how it can be better. Back up those data files!

Improvements:

- Added new data sources for DOI Foundation, Metron, and ISFDB.

- Updated data sources for Dark Horse Comics, Springer Nature, MusicBrainz, Kino.de, and BoardGameGeek (Bug 511824).

- Added option to change the file preview size and increased default to 256×256 px.

- Updated file saving to remove images no longer in collection from the data folder (Bug 509244).

- Added capability to import OnMyShelf JSON files.

Bug Fixes:

- Fixed bug with XML generation for user-locale (Bug 512581).

@shibe_13:matrix.org

@shibe_13:matrix.org

toscalix

toscalix