Hello again everyone!

I’m Derek Lin also known as kenoi, a second-year Math student at the University of Waterloo.

Through Google Summer of Code 2025 (GSoC), mentored by Harald Sitter, Tobias Fella, and Nicolas Fella, I have been developing Karton, a virtual machine manager for KDE.

As the program wraps up, I thought it would be a good idea to put together what I’ve been able to accomplish as well as my plans going forward.

A final look at Karton after the GSoC period.

Research and Initial Work

The main motivation behind Karton is to provide KDE users with a more Qt-native alternative to GTK-based virtual machine managers, as well as an easy-to-use experience.

I had first expressed interest in working on Karton in early Feburary where I made the initial full rewrite (see MR #4), using libvirt and a new UI, wrapping virt-install and virt-viewer CLIs. During this time, I had been doing research, writing a proposal, and trying out different virtual machine managers like GNOME Boxes, virtmanager, and UTM.

You can read more about it in my project introduction blog!

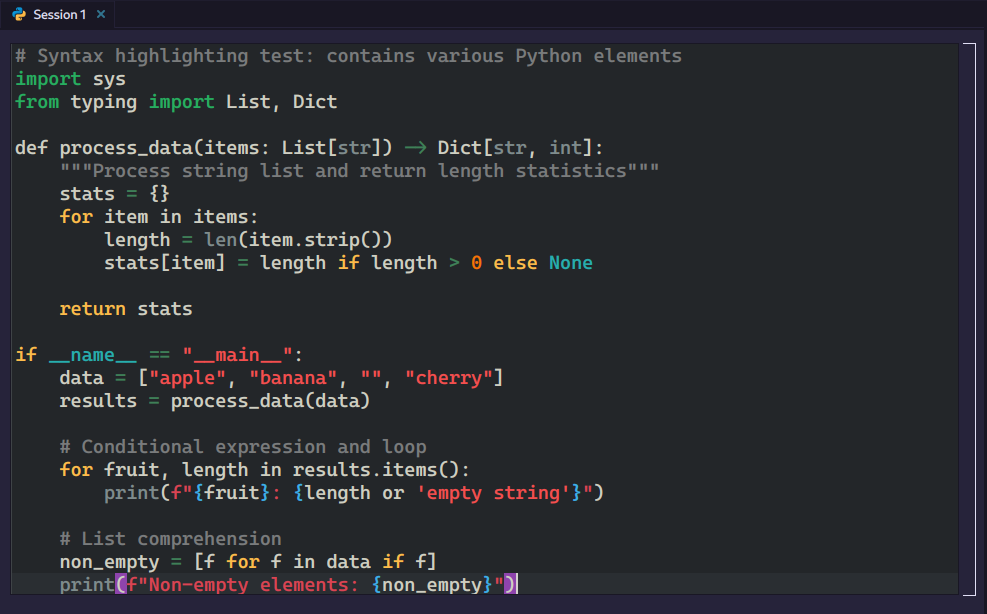

A screenshot of my rewrite in March 8, 2025.

VM Installation

One of my goals for the project was to develop a custom libvirt domain XML generator using Qt libraries and the libosinfo GLib API. I started working on the feature in advance in April and was able to have it ready for review before the official GSoC coding period.

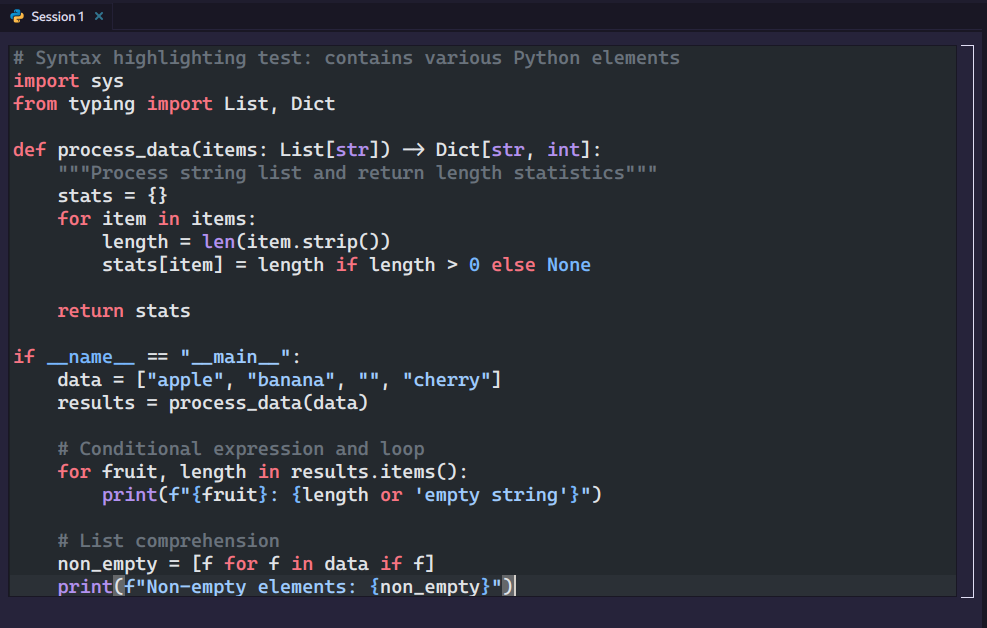

I created a dialogue menu to accept a VM name, installation media, storage, allocated RAM, and CPUs. libosinfo will attempt to identify the ISO file and return a OS short-ID (ex: fedora40, ubuntu24.04, etc), otherwise users will need to select one from the displayed list.

Through the OS ID, libosinfo can provide certain specifications needed in the libvirt domain XML. Karton then fills in the rest, generating a UUID, a MAC address to configure a virtual network, and sets up display, audio, and storage devices. The XML file is assembled through QDomDocument and passed into a libvirt call that verifies it before adding the VM.

VM information (id, name, state, paths, etc) in Karton is parsed explicitly from the saved libvirt XML file found in the libvirt QEMU folder, ~/.config/libvirt/qemu/{domain_name}.xml.

All in all, this addition (see MR #8) completely removed the virt-install dependency although barebones.

A screenshot of the VM installation dialog.

The easy VM installation process of GNOME Boxes had been an inspiration for me and I’d like to improve it in the future by adding a media installer and better error handling later on.

Official Coding Begins!

A few weeks into the official coding period, I had been addressing feedback and polishing my VM installer merge request. This introduced much cleaner class interface separation in regards to storing individual VM data.

SPICE Client and Viewer

My use of virt-viewer previously for interacting with virtual machines was meant as a temporary addition, as it is a separate application and is poorly integrated into Qt/Kirigami and lacks needed customizability.

Previously, clicking the view button would open a virtviewer window.

As such, the bulk of my time was spent working with SPICE directly, using the spice-client-glib library, in order to create a custom Qt SPICE client and viewer (see MR #15). This needed to manage the state of connection to VM displays and render them to KDE (Kirigami) windows. Other features such as input forwarding, audio receiving also needed to be implemented.

I had configured all Karton-created VMs to be set to autoport for graphics which dynamically assigns a port at runtime. Consequently, I needed to use a CLI tool, virsh domdisplay, to fetch the SPICE URI to establish the initial connection.

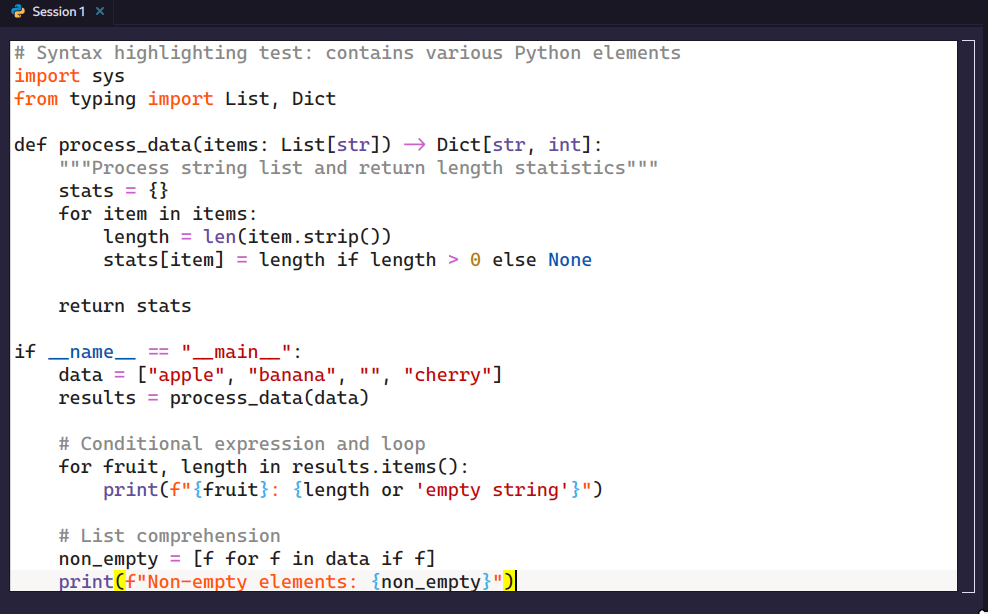

The viewer display works through a frame buffer. The approach I took was rendering the pixel array I received to a QImage which could be drawn onto a QQuickItem to be displayed on the window. To know when to update, it listens to the SPICE primary display callback.

You can read more about it in my Qt SPICE client blog. As noted, this approach is quite inefficient as it needs to create a new QImage for every frame. I plan on improving this in the future.

Screenshots of my struggles getting the display to work properly.

I had to manage receiving and forwarding Qt input. Sending QMouseEvents, mouse button clicks, were straightforward and can be mapped directly to SPICE protocol mouse messages when activated. Keystrokes are taken in as QKeyEvents and the received scancodes, in evdev, are converted to PC XT for SPICE through a map generated by QEMU. Implementing scroll and drag followed similarly.

I also needed manage receiving audio streams from the SPICE playback callback, writing to a QAudioSink. One thing I found nice is how my approach supported multiple SPICE connections quite nicely. For example, opening multiple VMs will create separate audio sources for each so users can modify volume levels accordingly.

Later on, I added display frame resizing when the user resizes the Karton window as well as a fullscreen button. I noticed that doing so still causes resolution to appear quite bad, so proper resizing done through the guest machine will have to be implemented in the future.

Now, we can watch Pepper and Carrot somewhat! (no hardware accelleration yet)

UI

My final major MR was to rework my UI to make better use of screen space (see MR #25). I moved the existing VM ListView into a sidebar displaying only name, state, and OS ID. The right side would then have the detailed information of the selected VM. One my inspirations was MacOS UTM’s screenshot of the last active frame.

When a user closes the Karton viewer window, the last frame is saved to $HOME/.local/state/KDE/Karton/previews. Implementing cool features like these are much easier now that we have our own viewer! I also added some effects for opacity and hover animation to make it look nice.

Finally, I worked on media disc ejection (see MR #26). This uses a libvirt call to simulate the installation media being removed from the VM, so users can boot into their virtual hard drive after installing.

Demo Usage

As a final test of the project, I decided to create, configure and use a Fedora KDE VM using Karton. After setting specifications, I installed it to the virtual disk, ejected the installation media, and properly booted into it. Then, I tried playing some games. Overall, it worked pretty well!

List of MRs

Major Additions:

Subtle Additions:

Difficulties

My biggest regret was having a study term over this period. I had to really manage my time well, balancing studying, searching for job positions, and contributing. There was a week where I had 2 midterms, 2 interviews, and a final project, and I found myself pulling some late nighters writing code at the school library. Though it’s been an exhausting school term, I am still super glad to have been able to contribute to a really cool project and get something work!

I was also new to both C++ and Qt development. Funny enough, I had been taking, and struggling on, my first course in C++ while working on Karton. I also spent a lot of time reading documentation to familiarize myself with a lot of the different APIs (libspice, libvirt, and libosinfo).

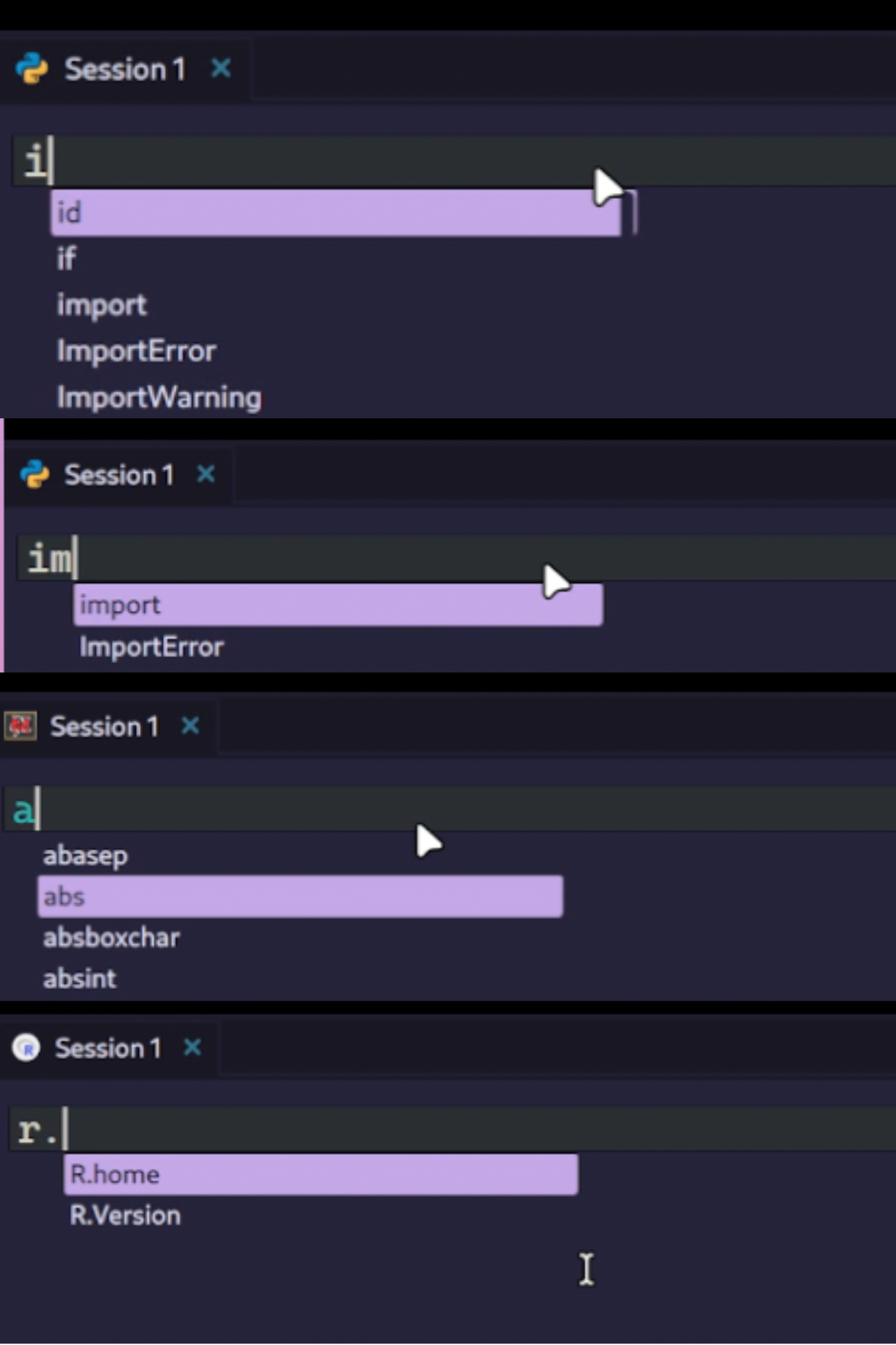

Left: Karton freezes my computer because I had too many running VMs.

Right: 434.1 GiB of virtual disks; my reminder to implement disk management.

What’s Next?

There is still so much to do! Currently, I am on vacation and I will be attending Akademy in Berlin in September so I won’t be able to work much until then. In the fall, I will be finally off school for a 4 month internship (yay!!). I’m hoping I will have more time to contribute again.

There’s still a lot left especially with regards to the viewer.

Here’s a bit of an unorganized list:

- Optimize VM display frame buffer with SPICE

gl-scanout - Improved scaling and text rendering in viewer

- File transfer and clipboard passthrough with SPICE

- Full VM snapshotting through libvirt (full duplication)

- Browse and installation tool for commonly installed ISOs through QEMU

- Error handling in installation process

- Configuration and allow modifying of existing VMs in the application

- Others on the issue tracker

Release?

In its current state, Karton is not feature complete, and not ready for officially packaging and releasing. In addition to the missing features listed before, there have been a lot of new and moving parts throughout this coding period, and I’d like to have the chance to thoroughly test the code to prevent any major issues.

However, I do encourage you to try it out (at your own risk!) by cloning the repo. Let me know what you think and when you find any issues!

In other news, there are some discussions of packaging Karton as a Flatpak eventually and I will be requesting to add it to the KDE namespace in the coming months, so stay tuned!

Conclusion

Overall, it has been an amazing experience completing GSoC under KDE and I really recommend it for anyone who is looking to contribute to open-source. I’m quite satisfied with what I’ve been able to accomplish in this short period of time and hoping to continue to working and learning with the community.

Working through MRs has given me a lot of valuable and relevant industry experience going forward. A big thank you to my mentor, Harald Sitter, who has been reviewing and providing feedback along the way!

As mentioned earlier, Karton still definitely has a lot to work on and I plan continuing my work after GSoC as well. If you’d like to read more about my work on the project in the future, please check out my personal blog and the development matrix, karton:kde.org.

Thanks for reading!

Socials

Website: https://kenoi.dev/

Mastodon: https://mastodon.social/@kenoi

GitLab: https://invent.kde.org/kenoi

GitHub: https://github.com/kenoi1

Matrix: @kenoi:matrix.org

Discord: kenyoy

sebas

sebas

@pabarino:matrix.org

@pabarino:matrix.org

GSoC

GSoC

obiwan_kennedy

obiwan_kennedy