Monday, 19 May 2025

We have moved the deadline for talk submission for Akademy 2025 to the end of the month. Submit your talks now!

https://mail.kde.org/pipermail/kde-community/2025q2/008217.html

We have moved the deadline for talk submission for Akademy 2025 to the end of the month. Submit your talks now!

https://mail.kde.org/pipermail/kde-community/2025q2/008217.html

I recently made a patch to Konsole terminal emulator, that adds to the current tab layout saving system couple more things:

You can find the patch here: ViewManager: Save columns, lines and working directory to tabLayout (!1095)

It's a feature I've seen in other terminal editors, so I wanted to add it to Konsole as well.

Note that this is not in current version of Konsole, but it will be in the next one: 25.07. Unless it gets backported, of course.

In Konsole, you can even in current version save your tab layout:

This produces a JSON file like this:

{

"Orientation": "Vertical",

"Widgets": [

{

"SessionRestoreId": 0

},

{

"SessionRestoreId": 0

}

]

}

Now, as it is currently, it's not that useful. The split sizes won't be saved, for example.

My changes now allow you to save the size of the splits and the working directories, like this:

{

"Orientation": "Horizontal",

"Widgets": [

{

"Columns": 88,

"Command": "",

"Lines": 33,

"SessionRestoreId": 0,

"WorkingDirectory": "/home/akseli/Repositories"

},

{

"Orientation": "Vertical",

"Widgets": [

{

"Columns": 33,

"Command": "",

"Lines": 21,

"SessionRestoreId": 0,

"WorkingDirectory": "/home/akseli/Documents"

},

{

"Columns": 33,

"Command": "",

"Lines": 10,

"SessionRestoreId": 0,

"WorkingDirectory": "/home/akseli"

}

]

}

]

}

As you can see, it saves the Columns, Lines, WorkingDirectory. It also adds empty Command

item, which you can write any command in, like ls -la, or keep it empty.

You can try to use the columns and lines sections to modify the size manually, but I've noticed it's easier just to do it inside Konsole.

Now the old layout file will work too, if the field doesn't exist Konsole won't do anything about it.

Note about the command: Konsole basically pretends to type that command in when it loads, so

the commands don't need a separate Parameter field or anything like that. You could make the command

something like foo && bar -t example && baz --parameter. Konsole then just types

that in and presses enter for you. :)

I made this change because I wanted to run Konsole in following layout:

{

"Orientation": "Horizontal",

"Widgets": [

{

"Orientation": "Vertical",

"Widgets": [

{

"Columns": 139,

"Command": "hx .",

"Lines": 50,

"SessionRestoreId": 0

},

{

"Columns": 139,

"Command": "",

"Lines": 14,

"SessionRestoreId": 0

}

]

},

{

"Columns": 60,

"Command": "lazygit",

"Lines": 66,

"SessionRestoreId": 0

}

]

}

With these items I can get the layout splits as I want, with any commands I want.

Then I have a bash script to run this layout in the directory the script is run at:

#!/usr/bin/env bash

konsole --separate --hold --workdir "$1" --layout "$HOME/Documents/helix-editor.json" &

Now when I go to any project folder and run this script, it will open the Helix text editor, lazygit and empty split the way I want it, without having to make these splits manually every time.

Sure I could use something like Zellij for this but they have so much things I don't need, I just wanted to split the view and save/load that arrangement.

I would like to add a small GUI tool inside Konsole that allows you to customize these layout easily during save process, such as changing the WorkingDirectory and Command parameters.

But for now, you'll have to do it inside the JSON file, but chances are when you want to do layouts like this, you're comfortable editing these kind of files anyway.

I hope some others will find this useful as well, for things like system monitoring etc.

Hope you like it!

(This blog is originally posted to KDE Blogs.)

Hi everyone!

I’m Derek Lin, also known as kenoi. I’m a second-year student at the University of Waterloo and really excited to be working on developing Karton, a virtual machine manager, this summer. This project will be a part of the Google Summer of Code (GSoC) 2025 program and mentored by Harald Sitter, Tobias Fella, and Nicolas Fella. Over the past few months, I’ve been contributing to the project through some merge requests and I hope to get it to a somewhat polished state towards the end of the program!

Currently, GTK-based virtual machine managers (virt-manager, GNOME Boxes) are the norm for a lot of KDE users, but they are generally not well integrated into the Plasma environment. Although there has been work done in the past with making a Qt-Widget-based virtual machine manager, it has not been maintained for many years and the UI is quite dated.

Karton, as originally started by Aaron Rainbolt was planned to be a QEMU frontend for virtualization through its CLI. Eventually, the project ownership was handed over to Harald Sitter and it was made available as a GSoC project. My aim is to make Karton a native Qt-Quick/Kirigami virtual machine manager, using a libvirt backend. Through libvirt, lower-level tasks can be abstracted and it allows for the app to be potentially cross-platform.

If anyone is interested, I wrote a bit more in detail in my GSoC project proposal (although a bit outdated).

I originally became interested in the project back in February this year where I tested out GNOME Boxes, virt-manager, and UTM. I also experimented on the virsh CLI, configuring some virtual machines through the libvirt domain XML format.

My first merge request was a proof-of-concept rewrite of the app. I implemented new UI components to list, view, configure, and install libvirt-controlled virtual machines. This used the libvirt API to fetch information on user domains, and wrapped virt-install and virtviewer CLIs for installing and viewing domains respectively. I had spent a big portion of this time getting know Qt frameworks, libvirt, and just C++ overall, so a big thank you to Harald Sitter and Gleb Popov, who have been reviewing my code!

A few weeks later I also made a smaller merge request building off of my rewrite, adding QEMU virtual disk path management which is where the main repository stands as of now.

In between my school terms in mid-April, I had the amazing opportunity to attend the Plasma Sprint in Graz where I was able to meet many awesome developers who work on KDE. During this time, I worked on a merge request to implement a domain installer (in order to replace the virt-install command call). This used the libosinfo GLib API to detect a user-provided OS installer disk image, getting specifications needed for the libvirt XML domain format. Karton is then able to generate a custom XML file which will make it easier to work off of and implement more features in the future. I had to rework a lot of the domain configuration class structure and shifted away from fetching information from libvirt API calls to parsing it directly from XML.

A libvirt domain XML configuration generated by Karton.

As virt-install is very powerful program, my installer is still hardcoded to work with QEMU and I haven’t been able to implement a lot of the device configuration yet. I also am currently working on addressing feedback on this merge request.

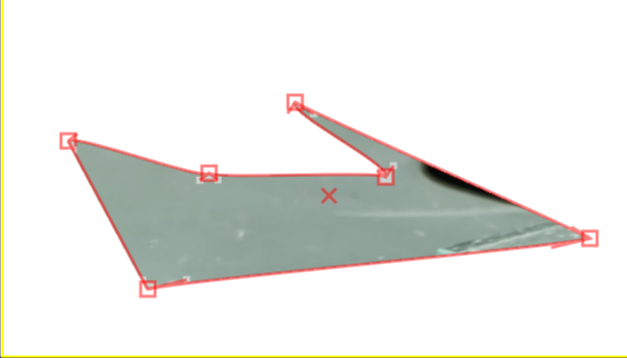

Recently, I also started work on a new custom Qt-Quick virtual machine viewer. It connects to virtual machines through the spice-client-glib library and renders the frames on a QQuickItem with the images it receives from the active virtual machine. This is still very buggy and has yet to support user input.

a very cursed viewer…

Warning: Karton is still under development. I would not recommend running Karton with any VMs that are important as they may break.

Once the domain installer is finished up, I think the majority of my time will be spent on working on and polishing the virtual machine viewer.

Some of the other things I would want to get to during the summer are:

If you have any features you’d like to see in the future, let us know in our Matrix, karton:kde.org!

Thanks for reading! I’m still new to KDE development and virtualization in general, so if you have any suggestions or thoughts on the project, please let me know!

Email: derekhongdalin@gmail.com

Matrix: @kenoi:matrix.org

Discord: kenyoy

I also made a Mastodon recently: mastodon.social/@kenoi

Since Akademy 2024, input handling improvements have been one of three KDE Goals with myself as a co-instigator. You may be wondering why you didn't see a series of dedicated blog posts on this topic, which I had hoped to write. Instead of taking accountability for a longer absence from Planet KDE, here's a quick recap of what's noteworthy and exciting right now.

Plasma 6.4 is scheduled to be released on June 17, 2025. The "soft feature freeze" is now in effect, which means we pretty much know which major changes will be included, only polish and bug-fixing work remains. On the input front, you can look forward to some quality of life improvements:

Nicolas Fella added an option to use a graphics drawing tablet in "Mouse" mode, also known as "relative mode". This allows you to use the stylus on your drawing tablet like you would use a finger on a laptop's touchpad.

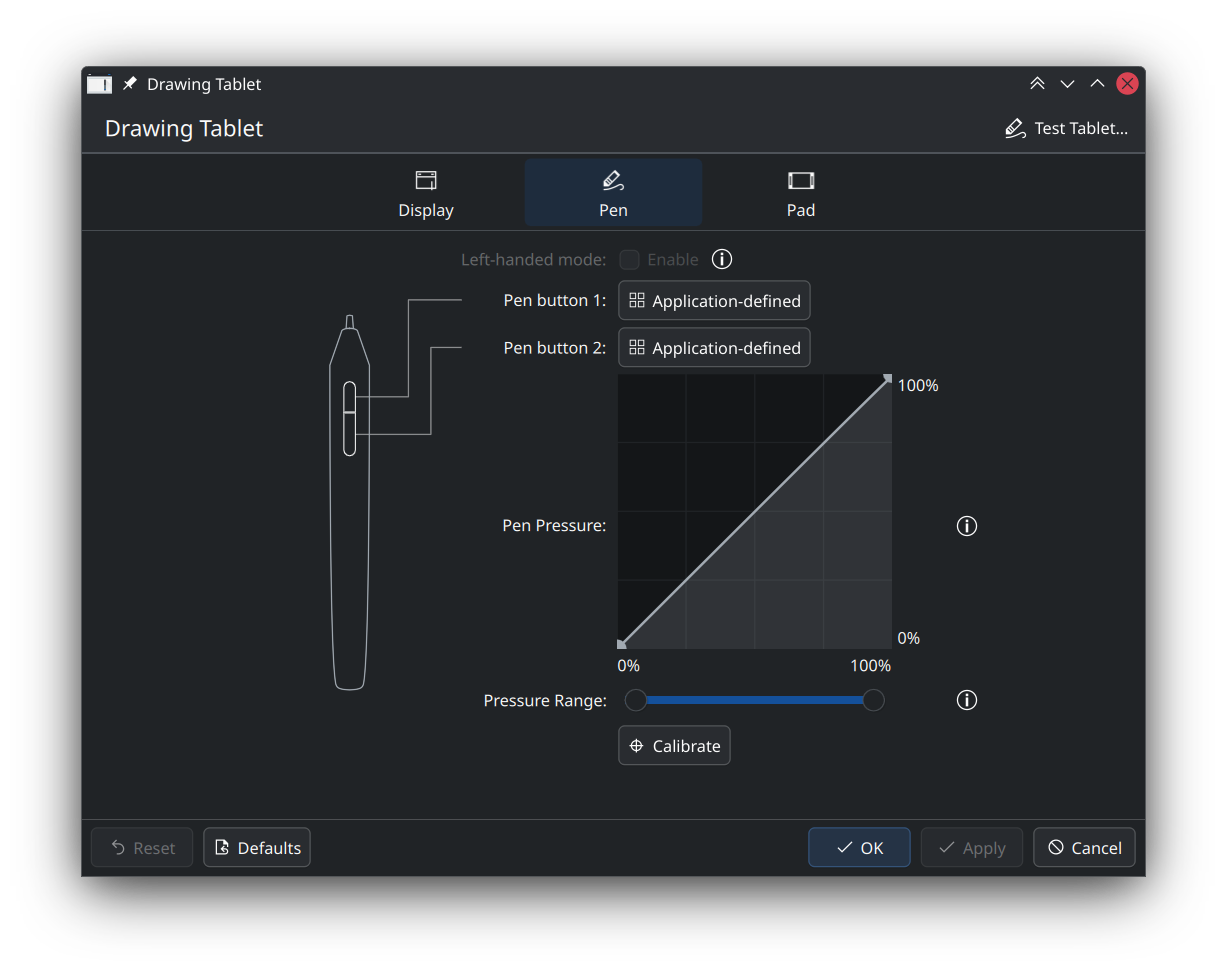

Joshua Goins keeps updating his Art on Wayland website to keep track of current and past drawing tablet improvements. For this release, the Drawing Tablet settings page will now ask for confirmation after re-calibrating your device. He also added a visualization of pen buttons to the settings page, to make it clear which button you're configuring:

Xaver Hugl added a 3-finger pinch gesture for the desktop zoom accessibility feature, which is in addition to Ctrl+Meta+scroll or Meta-"+" / Meta-"-".

Nicolas Fella also implemented the accessibility feature called MouseKeys on Wayland. This lets you move the mouse pointer using numpad keys, and can be enabled in the Accessibility settings page.

Sebastian Parborg added an option to set "move file" as the default drag & drop behavior. This applies across all KDE software. By default, dropping a file in a different folder will continue to ask if it should be moved, copied or linked.

Christoph Wolk in particular just keeps fixing tons of bugs, including many input handling improvements. He's been tackling keyboard navigation issues, scrolling bugs, mouse hover, pasting, in all kinds of widgets and settings and apps. The list just goes on, here are some links from just the last two or so months: 1, 2, 3, 4, 5, 6, 7, 8, 9, 10. (There's more but you get the gist.)

Of course others are also actively fixing issues, like Akseli Lahtinen for custom tiling keyboard shortcuts and Dolphin file renaming. I'm frankly not able to keep up with everyone's contributions, but keep following This Week in Plasma to learn about the most notable ones.

I myself don't have much to show for Plasma 6.4. All my hopes for personally making an impact are staked on future work. I did, however, ask some very nice people if they're open to mentor projects within GSoC 2025. It appears this might pay off.

Nate Graham keeps pointing out that we need more paid developers working on KDE software. Here are some popular ways to make this happen:

On that last bullet point, we recently received some nice funding commitments.

Google is perhaps best known for its repeated abuse of market power, getting convicted for huge fines only to do it again in a slightly different form. They also run a program called Google Summer of Code (GSoC), which is more positive. Every year, numerous students receive a stipend to write Free & Open Source code over the summer, to the benefit of organizations like KDE, mentored by existing developers from the community. On May 8, Google announced the accepted projects for this year. KDE was assigned 15 student projects across a variety of apps and infrastructure efforts. One of these is particularly relevant to our Input Goal.

Starting in June, Yelsin 'yorisoft' Sepulveda will work on improving game controller support in KWin. Yelsin jumped right into the community by asking great questions on Matrix, learned about KDE development by getting several changes merged into Angelfish (KDE's mobile-friendly web browser), and already published an introductory blog post with more details about the project. We're excited to mentor Yelsin and get one of the oldest open Plasma bugs fixed in the process.

NLnet Foundation is an organization that supports open software, open hardware, open data and open standards. At Akademy 2024, Jos van den Oever of NLnet held a talk and encouraged KDE contributors to apply for funding there and elsewhere. Unlike many other granting organizations, NLnet allows individuals to apply for comparatively small grants with very limited bureaucracy, between 5,000 and 50,000 EUR for first-time applicants. Last year, they already supported various improvements in accessibility and drawing tablet support in Plasma.

Natalie Clarius and I applied to NLnet following Akademy 2024. Our project would make multi-touch gestures configurable through System Settings, as well as implement stroke gestures (a.k.a. mouse gestures) for Plasma on Wayland. In April this year, NLnet approved this project together with many other open source initiatives. We've seen a lot of user requests for this functionality, so I'm happy to work on upstreaming this functionality going forward. We're currently ramping up our efforts - stay tuned for actual merge requests and UI designs.

What's also great is InputActions by taj-ny, which is a third-party plugin for KWin that provides customization for multi-touch gestures via text file. Its next release should include stroke gesture support as well. I'm glad that my prototype from last year was useful as a starting point for this. Getting code into upstream KWin and System Settings requires a different approach though, so it still makes sense for the NLnet project to go ahead.

NLnet approved a second KDE-related grant in the same batch, for Accessible KDE File Management by diligent Dolphin maintainer Felix Ernst. In addition to targeted improvements for Dolphin, this project will also benefit the KDE-wide Open/Save dialog, as well as settings for editing shortcuts. Felix with prior experience in accessibility and (obviously) Dolphin is ideally suited for this work.

You may be able to get NLnet funding too. Primary requirements:

Note: Like all such foundations, NLnet has some favorite topics including mobile, accessibility, or federated internet infrastructure. If you're interested in this kind of funding for KDE work, feel free to ask me about more details.

In late April I dropped by at the recent Plasma Sprint 2025 in Graz, which you may have seen in other posts on Planet KDE already. Among many other topics, we briefly discussed the direction of further input-related developments.

Previously, Xuetian Weng (a.k.a. csslayer, the long-term maintainer of the fcitx5 input method framework) proposed a unified way to manage keyboard layout, input methods, and other input related tools. The gist of the proposal is that each input method (IM) would be associated to a keyboard layout in System Settings. In the linked issue, he argues that this is a better fit for Plasma than the approaches of other platforms for configuring language, layout and input methods. I briefly presented Xuetian's proposal at the Sprint and there was a general consensus that this is a sensible way forward.

There was a question about how to deal with devices that don't have a keyboard connected to begin with. We considered some options and compared our thoughts with the actual keyboard hot-plugging behavior of a sprint attendees' Android phone. Same conclusion either way: each connected keyboard should correspond to a separate layout/IM selection, and the absence of a keyboard will likewise correspond to its own input method. Multiple input devices can be supported by switching configurations upon key-press or (dis)connect events. If we can implement this, it should turn out more versatile and still simpler than Plasma's current settings.

We also discussed the virtual keyboard prototype plasma-keyboard and its most important blockers for getting included in Plasma Desktop / Plasma Mobile. It looks like the major concerns have been captured in the issue queue already, so what's needed now is a developer to buckle down and fix them one by one. Also, testing in more languages.

Lots of movement overall. That said, not all of these plans have someone actively working on it. We really do need more hands on deck if we want the Input Goal proposal to be a smashing success. If you are interested to work with the community on input handling, stipend or not, we can help you to help KDE. Drop by in #kde-input:kde.org on Matrix if you need mentorship to guide your contributions, MR reviews to get your patches landed, or any other kind of support.

Here's a small selection of efforts that would really benefit from your development chops:

Let's get these gaps filled. Until next time. And don't forget to drop by at Akademy 2025 in Berlin for more Input Goal discussions!

Hello! I’m Azhar, a Computer Science student who loves OSS projects and contributing to KDE. This summer, I’m excited to be working on the Google Summer of Code (GSoC) project at KDE Community to integrate more KDE libraries into OSS-Fuzz.

While KDE already has some libraries integrated into OSS-Fuzz, such as KArchive, KImageFormats, and KCodecs, there are many more libraries that could benefit from this integration. The goal of this project is to expand the coverage of OSS-Fuzz across KDE libraries, making them more secure and reliable.

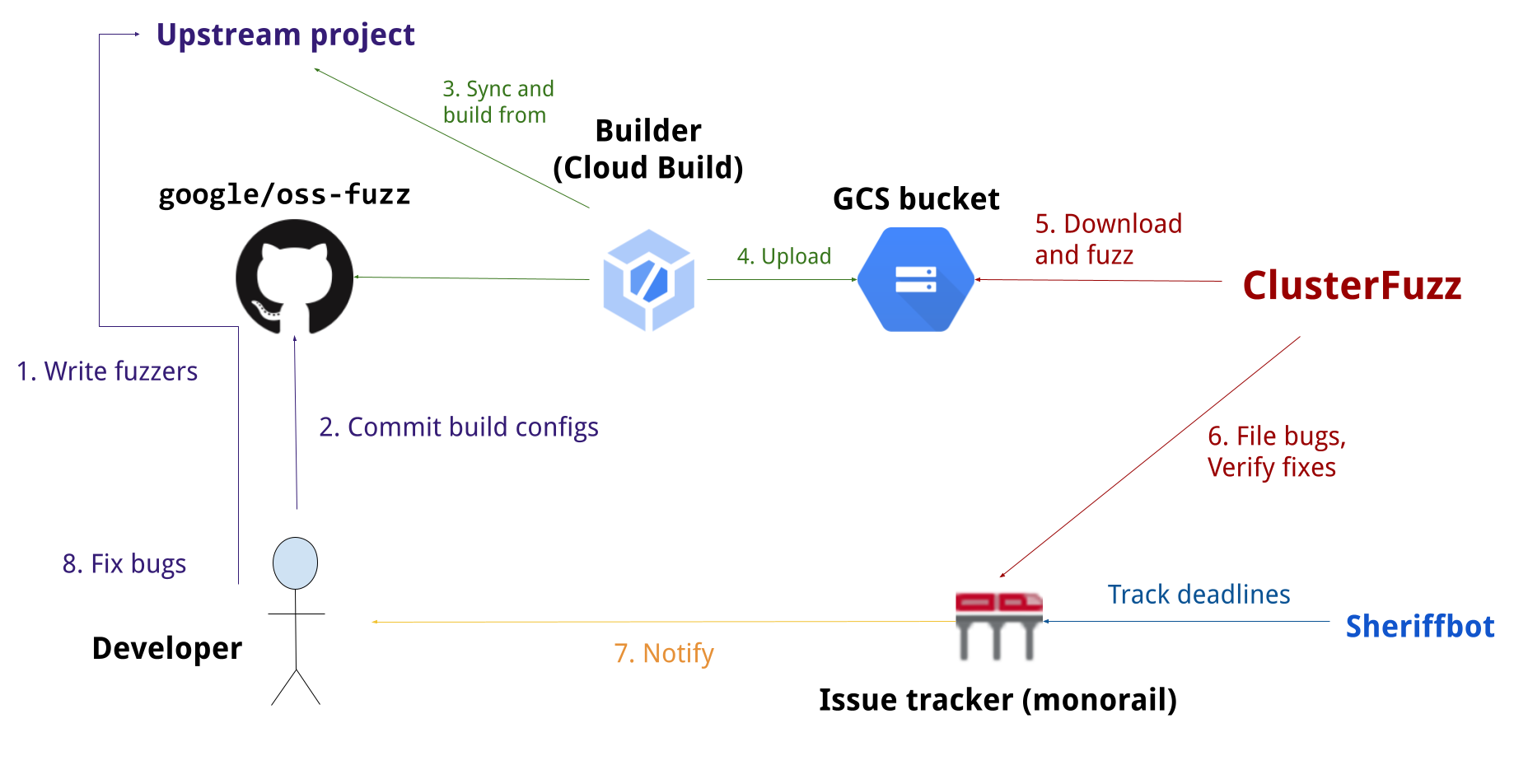

OSS-Fuzz is a SaaS by Google to automatically find bugs and vulnerabilities in open-source projects through fuzz testing. Fuzzing involves feeding random or unexpected data into a software to uncover vulnerabilities that might otherwise go unnoticed. OSS-Fuzz continuously runs fuzz tests on the integrated open-source projects, reporting any crashes or issues found. This helps maintainers identify and fix bugs quickly, improving the overall quality of the software.

As of May 2025, OSS-Fuzz has helped identify and fix over 13,000 vulnerabilities and 50,000 bugs across 1,000 projects.

Source: OSS-Fuzz GitHub repository

Image from OSS-Fuzz GitHub repository, licensed under Apache 2.0.

The main goal of this project is to integrate more KDE libraries into OSS-Fuzz. This involves:

The objective is to integrate as many as KDE libraries possible into OSS-Fuzz by the end of the GSoC period, thereby enhancing the overall security and reliability of KDE software.

The following libraries have been identified for initial integration into OSS-Fuzz:

KFileMetaData is a library for reading and writing metadata in files. It supports various file formats, including images, audio, and video files. KFileMetaData is used by Baloo for indexing purposes. This means that many files may be processed by KFileMetaData without the user’s knowledge, making it a critical library to fuzz.

KMime is a library to assist handling MIME data. It provides classes for parsing MIME messages. KMime is used by various KDE applications, including KMail. This again means that the library may process malformed or unexpected data without the user’s knowledge.

KDE has many thumbnailer libraries, such as KDE-Graphics-Thumbnailers. These libraries are used to generate thumbnails for various file formats, including images, videos, and documents. These thumbnailers are used by Dolphin/KIO to generate previews of files and can be exposed to untrusted data.

Integrating KDE libraries into OSS-Fuzz is an important step towards improving the security and reliability of KDE software. Expanding OSS-Fuzz coverage to more libraries will help KDE maintainers quickly identify and fix bugs before they become problems for users.

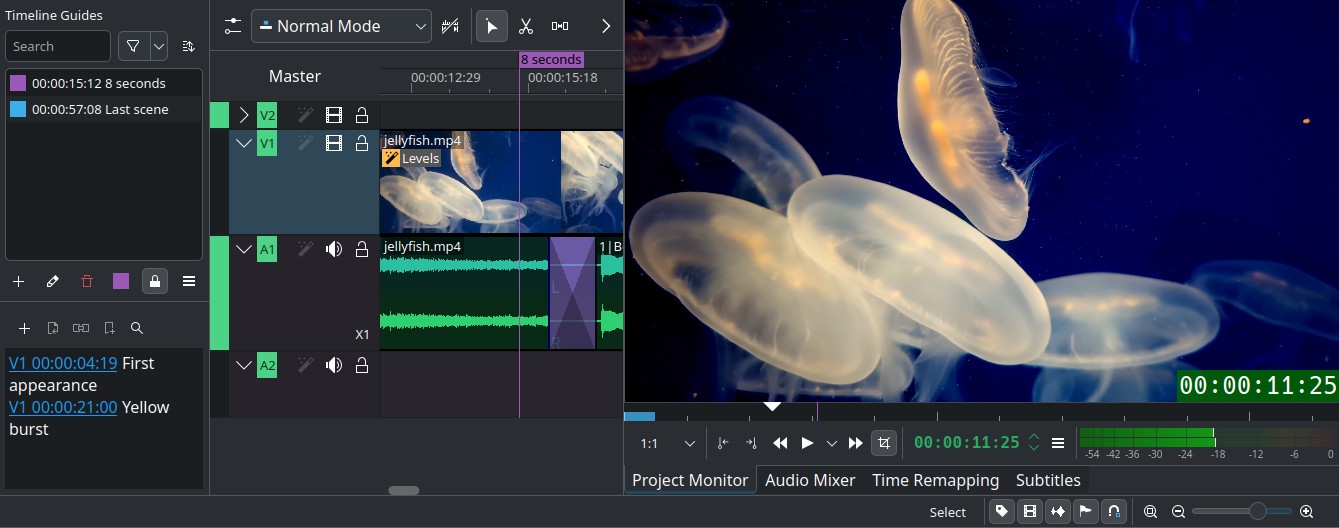

Kdenlive 25.04.1 is now available, containing several fixes and improvements. Fixed bugs and improvements include :

It is nice to note that these two last changes were made by Balooii, a new Kdenlive contributor!

See the full changelog below.

For the full changelog continue reading on kdenlive.org.

Welcome to a new issue of "This Week in KDE Apps"! Every week we cover as much as possible of what's happening in the world of KDE apps.

Wolthera van Hövell continued to work on text rendering this week. She made the text rendering mode editable, so now it's possible to switch between text rendering optimizing speed, legibility, or geometric precision, and an automatic mode (link). She also fixed a bug where the resource system was slow because font metadata was not erased when removing the font (link).

Maciej Jesionowski improved the performance of the status bar by optimizing a function called in the hot path (link).

Balooii optimized the process of downloading the online resource thumbnails. Kdenlive now downloads the thumbnails in parallel and converts them to pixmaps directly in memory (link). They also sped up audio thumbnail generation by 2.5x (link)!

Balooii also fixed some misalignment of the monitor tools which were caused by some rounding errors (link).

Volker Krause and Carl Schwan worked on the departure page of KTrip, redesigning it completely and fixing a few bugs (link 1, link 2, link 3 and link 4).

James Graham ported the message delegate from QML to C++ to reduce the memory usage of the timeline (link) and reworked the implementation of the hover actions (link).

Pierre Ducroquet fixed the SEARCH function (link) and a crash when decoding some formulas (link).

Salvo Tomaselli optimized the battery usage of Qrca by stopping the camera on mobile devices when the Qrca is in the background (link). He also made Qrca remember the last device used (link).

This blog only covers the tip of the iceberg! If you’re hungry for more, check out Nate's blog about Plasma and be sure not to miss his This Week in Plasma series, where every Saturday he covers all the work being put into KDE's Plasma desktop environment.

For a complete overview of what's going on, visit KDE's Planet, where you can find all KDE news unfiltered directly from our contributors.

The KDE organization has become important in the world, and your time and contributions have helped us get there. As we grow, we're going to need your support for KDE to become sustainable.

You can help KDE by becoming an active community member and getting involved. Each contributor makes a huge difference in KDE — you are not a number or a cog in a machine! You don’t have to be a programmer either. There are many things you can do: you can help hunt and confirm bugs, even maybe solve them; contribute designs for wallpapers, web pages, icons and app interfaces; translate messages and menu items into your own language; promote KDE in your local community; and a ton more things.

You can also help us by donating. Any monetary contribution, however small, will help us cover operational costs, salaries, travel expenses for contributors and in general just keep KDE bringing Free Software to the world.

To get your application mentioned here, please ping us in invent or in Matrix.

Hello KDE Community!

My name is Yelsin 'yorisoft' Sepulveda. I'm an engineer with experience in DevOps, Site Reliability, and Cloud Computing. I joined KDE as part of the GSoC application process early last month and have been contributing to a few projects ever since. Miraculously, my GSoC proposal has been selected! Hallelujah! Which means over this summer I'll be working on implementing game controller input recognition into KWin.

Currently, applications directly manage controller input, leading to inconsistencies, the inability of the system to recognize controller input for power management, and unintentionally enabling/disabling "lizard mode" in certain controllers. This project proposes a solution to unify game controller input within KWin by capturing controller events, creating a virtual controller emulation layer, and ensuring proper routing of input to applications. This project aims to address the following issues:

The primary goals of this project are to:

I often spend my time surfing the internet learning new things, spending quality time with family and friends, or picking up new hobbies and skills-such as music! You could say I'm someone who likes to jump between multiple hobbies and interests. As of late, I'm learning a new snare solo and how to build an online brand.

I started my career as a DevOps Engineer and SRE where I learned tools like Jenkins, Docker, and Terraform. I then transitioned to a Solutions Architect role where I worked with many different cloud technologies and helped other companies design their cloud architecture. I am relatively new to contributing to open-source projects but have been an avid user of Linux and open-source tools for over 4 years, and am committed to learning and growing in this community. Check me out on GitHub.

Google Summer of Code (GSoC) is a training/mentorship program that allows new contributors to open source to work on projects for 175 to 350 hours under the guidance of experienced mentors.

KDE will mentor fifteen projects in this year's Google Summer of Code.

Merkuro is a modern groupware suite built using Kirigami and Akonadi. Merkuro provides tools that allow you to manage your contacts, calendars, todos, and soon email messages.

This year, the focus is on making Merkuro more viable on mobile. Pablo will work on removing the QtWidgets dependency from the Akonadi background processes, which will reduce RAM consumption. Shubham Shinde will port some configuration dialogs to QML, making them easier to use on Plasma Mobile. This project will be mentored by Aakarsh MJ, Claudio Cambra, and Carl Schwan.

NeoChat is KDE's Matrix chat client.

Sakshi Gupta will work on adding video call support to NeoChat using LiveKit. This work is mentored by Tobias Fella and Carl Schwan.

KDE Linux is a new distribution the KDE Community is developing.

Desh Deepak Kant will work on a new website for the project. Derek Lin will develop a Virtual Machine Manager named Karton, and Akki Singh will port the ISO Image Writer project to QML. These projects will be mentored by Harald Sitter, Tobias Fella, and Nicolas Fella.

Good news for gamers: Yelsin Sepulveda will work on improving game controller support in KWin. This work will be mentored by Jakob Petsovits and Xaver Hugl.

Cantor is a frontend for many mathematical tools and languages.

Nanhao Lv will work on integrating KTextEditor as the default text editor, replacing the current custom editor. Zheng JiaHong will add support for Python virtual environments to the Python backend. These projects are mentored by Alexander Semke and Israel Galadima.

Azhar Momin will work on adding more KDE libraries to OSS-Fuzz to help identify bugs and security issues through fuzzing. This project is mentored by Albert Astals Cid.

Kdenlive is KDE's video editor.

Ajay Chauhan will work on enhancing timeline markers by supporting range-based markers while maintaining backward compatibility. This project is mentored by Jean-Baptiste Mardelle.

Krita is an outstanding digital painting application.

Ross Rosales will develop a floating action bar for managing layers. This work is mentored by Emmet O'Neill.

GCompris is an educational suite containing many activities.

There is work in progress to also include a management GUI for teachers to create custom datasets. Ashutosh Singh will work on implementing the UI to manage several existing activities. Johnny Jazeix and Emmanuel Charruau will mentor this project.

Srisharan V S will add AI opponents to the Mankala game. This project is mentored by Benson Muite.

Anish Tak will work on improving the mentorship.kde.org website. This project is mentored by Paul Brown and Farid Abdelnour.

Tellico 4.1.2 is available, with a few fixes.