In Konsole Layout Automation (part 1), I wrote about how to automate opening Konsole with different tabs that ran different commands. In this post, I'll talk about doing this for layouts.

Inspiration

In the past, I needed to open two connections to the same host over ssh , and change to two different directories. I opened Konsole with a layout that had two panes. Then, Quick Commands allowed me to run a command in each pane to ssh and change to the right directory. This post will outline how to achieve that and more!

Goal: Launch a Konsole window with one tab that has multiple panes which run commands

💡 Note

For more detailed instructions on using Konsole, please see the output of konsole --help and take a look at the Command-line Options section of the Konsole Handbook

A layout can save and load a set of panes. Unfortuately, it can't do anything else. We can, however, use profiles and the Quick Commands plugin to make the panes more useful.

Use case: See the output of different commands in the same window. For instance, you could be running htop in one pane and open your favorite editor in another.

Here's an overview of the steps:

- Set up a layout

- Use QuickCommands to run things in the panes

Set up a layout

Unfortunately, the online documentation for Konsole command line options doesn't say much about how to create a layout, or the format of its JSON file. It only mentions the command line flag --layout. Also make a note of -e which allows you to execute a command.

Fortunately, creating the layout is pretty easy. Note that a layout is limited to one tab. It will only save the window splits, nothing else. No profiles, directories, etc.

- Set up a tab in Konsole with the splits you want it to have

- Use

View -> Save Tab Layout to save it to a .json file. (I personally recommend keeping these in a specific directory so they're easy to find later, and for scripting. I use ~/konsole-layouts). - You can then use

konsole --layout ~/layout-name.json to load konsole with a tab that has the splits you saved.

Use Quick Commands to do useful things in your layout

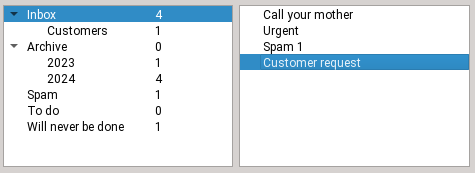

As mentioned above, you can only save splits. you can't associate a profile, or run a command directly like you can with the tilix or kitty terminals. This has been requested. In the meantime, an easy thing you can do is load a layout and then load a profile manually in each pane. This is where Quick Commands come in. These are under Plugins - Quick Commands. (If you don't see this, contact your distro / the place you installed Konsole from).

You can use Quick Commands to run a command in each pane. You can also launch a profile (with different colors etc) that runs a command (part 1 showed how these might be used). Note, however, that running konsole itself from here will launch a new Konsole window.

End each command with || return so that you get to a prompt if the command fails.

Examples

htop || return

So, after you've launched Konsole with your layout as described above, you can do this:

Go to Plugins -> Show Quick Commands

Add commands you'd like to run in this session.

Now, focus the pane and run a command.

Using these steps, I can run htop in one pane and nvtop in the other.

After you've gotten familiar with tabs and layouts, you can make a decently powerful Konsole session. Combine these with a shell function, and you can invoke that session very easily.

This is still too manual!

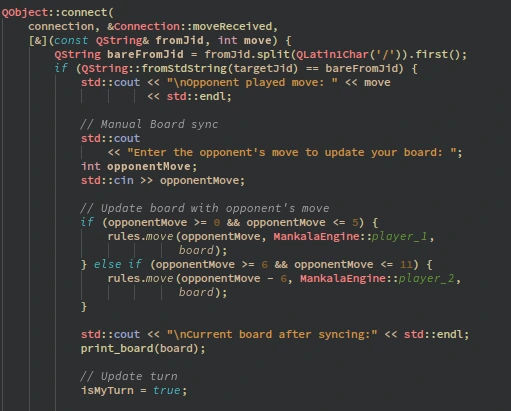

You're right. This post is about automating Konsole and having to click on things is not exactly that. You can use dbus commands in a script to load your tab layout and then run commands in each pane without using Quick Commands.

As we saw in the last post, you can use profiles to customize color schemes and launch commands. We can call those from a script in a layout. The demo scripts used below use dbus, take a look at the docs on scripting Konsole for details.

I'm using the layout file ~/konsole_layouts/monitoring.json for this example.

This layout file represents two panes with one vertical split (horizontal orientation describes the panes being horizontally placed):

{

"Orientation": "Horizontal",

"Widgets": [

{

"SessionRestoreId": 0

},

{

"SessionRestoreId": 0

}

]

}

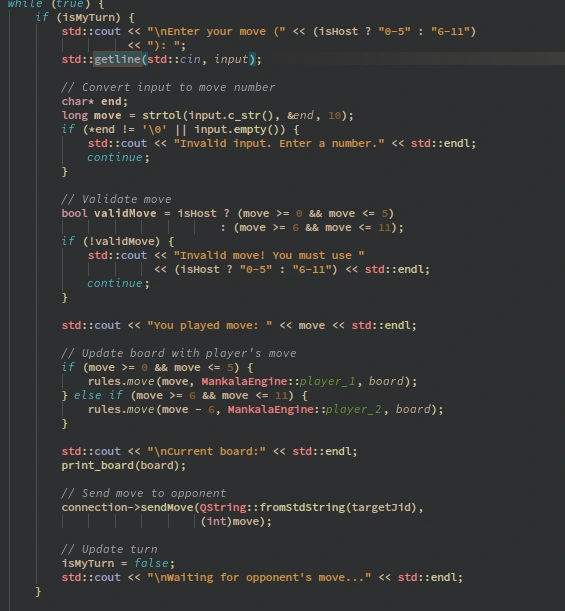

Here's an example of a simple script using that layout, which will launch fastfetch in one pane and btm in the other:

#!/usr/bin/env bash

# Define the commands to run

cmd1="fastfetch"

cmd2="btm"

# Opens a konsole tab with a saved layout

# Change the path to point to the layout file on your system

# KPID is used for renaming tabs

konsole --hold --layout $HOME/konsole_layouts/monitoring.json & KPID=$!

# Short sleep to let the tab creation complete

sleep 0.5

# Runs commands in Konsole panes

service="$(qdbus | grep -B1 konsole | grep -v -- -- | sort -t"." -k2 -n | tail -n 1)"

qdbus $service /Sessions/1 org.kde.konsole.Session.runCommand "${cmd1}"

qdbus $service /Sessions/2 org.kde.konsole.Session.runCommand "${cmd2}"

# Renames the tabs - optional

qdbus org.kde.konsole-$KPID /Sessions/1 setTitle 1 'System Info'

qdbus org.kde.konsole-$KPID /Sessions/2 setTitle 1 'System Monitor'

What it does:

- Loads a layout with 2 panes, horizontally arranged

- Runs

clear and then fastfetch in the left pane; runs btm in the right pane

Wrap-up

That's how you can accomplish opening a number of panes in konsole which run different commands. Using this kind of shortcut at the start of every work / programming session saved a little time every day which adds up over time. The marketing peeps would call it "maximizing efficiencies" or something. I hope some folks find this useful, and come up with many creative ways of making konsole work harder for them.

Known issues and tips

- Running

konsole from a Quick Command will open a new window, even if you want to just open a new tab. - You may see this warning when using runCommand in your scripts. You can ignore it. I wasn't able to find documentation on what the concern is, exactly.

The D-Bus methods sendText/runCommand were just used. There are security concerns about allowing these methods to be public. If desired, these methods can be changed to internal use only by re-compiling Konsole. This warning will only show once for this Konsole instance.

Credits to inspirational sources

- Thanks to the Cool-Konsole-setup repo, where I found an example script for using commands in a layout via

qdbus. Note: The scripts in that repo did not work as-is. - This answer on Ask Ubuntu for improvements on the example scripts.

@nidhish07:matrix.org

@nidhish07:matrix.org

KNRO

KNRO