Today, we bring you a report on the brand-new release of the Maui Project.

Community

To follow the Maui Project’s development or to just say hi, you can join us on our Telegram group @mauiproject

We are present on X and Mastodon:

Thanks to the KDE contributors who have helped to translate the Maui Apps and Frameworks!

Downloads & Sources

You can get the stable release packages [APKs, AppImage, TARs] directly from the KDE downloads server at https://download.kde.org/stable/maui/

All of the Maui repositories have the newly released branches and tags. You can get the sources right from the Maui group: https://invent.kde.org/maui

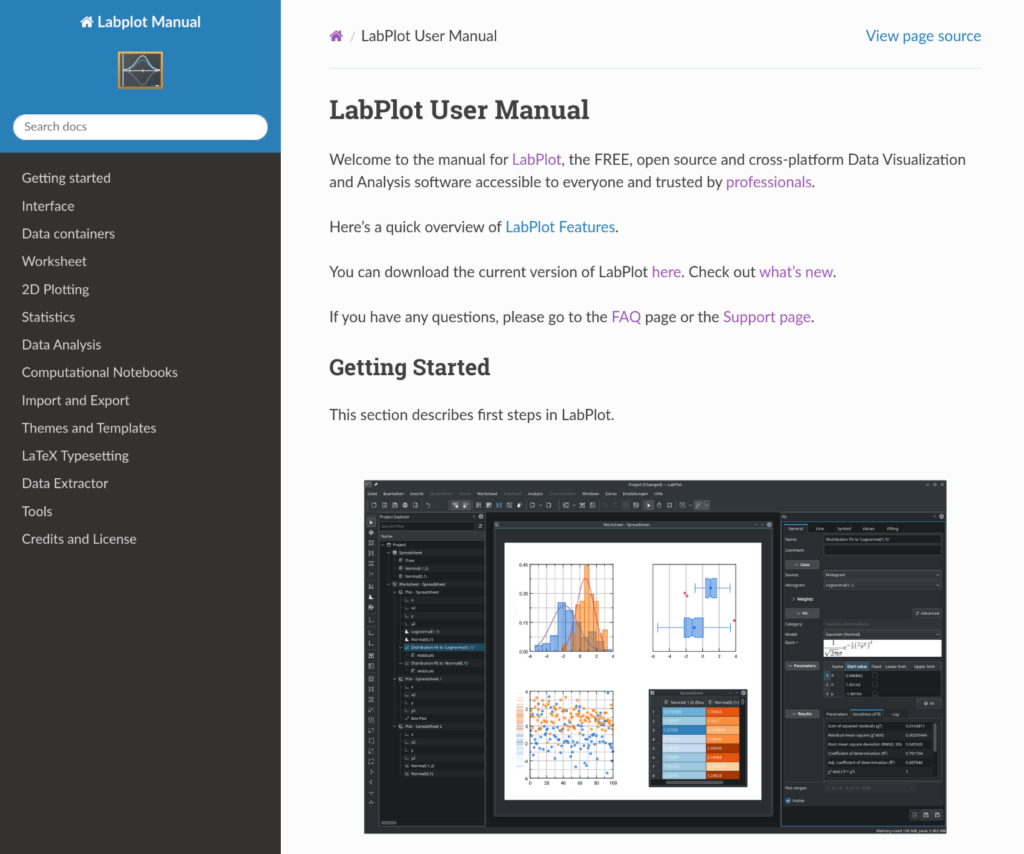

MauiKit 4 Frameworks & Apps

With the previous version released, MauiKit Frameworks and Maui Apps were ported over to Qt6, however, some regressions were introduced and those bugs have now been fixed with this new revision version.

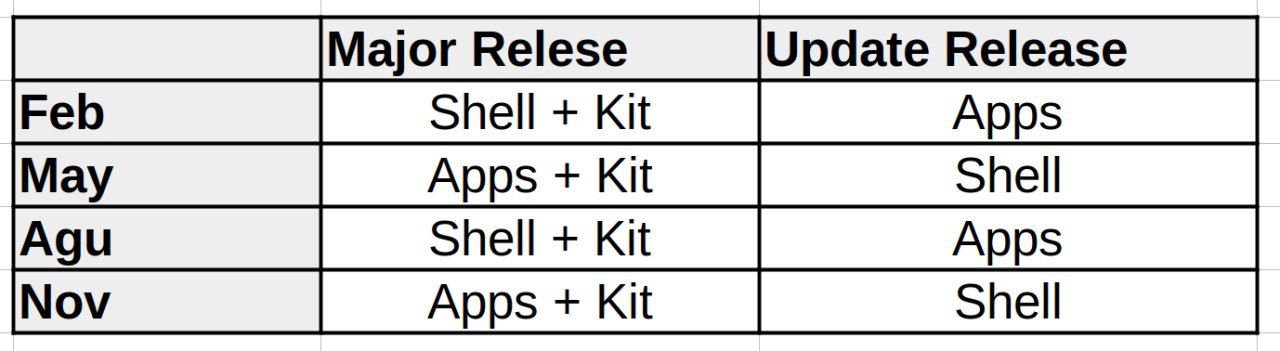

Currently, there are over 10 frameworks, with two new ones recently introduced. They all, for the most part, have been fully documented, and although, the KDE doxygen agent has some minor issues when publishing some parts, you can find the documentation online at https://api.kde.org/mauikit/ (and if you find missing parts, confusing bits, or overall sections to improve – you can open a ticket at any of the framework repos and it shall be fixed shortly after)

A brief list of changes and fixes introduced to the frameworks are the following:

For MauiKit Controls

- MauiKit is now no longer dependent on MauiKit-Style, so any other QQC2 style can be used with Maui Apps (other styles are not supported).

- MauiKit documentation has been updated with notes on the new attached controls properties – https://api.kde.org/mauikit/mauikit/html/classControls.html

- MauiKit fixes the toast area notifications. The toast notifications can now take multiple contextual actions.

- MauiKit Demo app has been updated to showcase all the new control properties

- New controls: TextField, Popup, DropDownIndicator,

- MauiKit fixes the template delegates and the IconItem control

- MauiKit fixes to the Page autohide toolbars

- Update style and custom controls to use MauiKit Controls’ attached properties for level, status, title, etc.

- Display keyboard shortcut info in the MenuItems

- Update MauiKit Handy properties for isMobile, isTouch, and hasTransientTouchInput and fixes to the lasso selection on touch displays

- Added more resize areas to the BaseWindow type

- Check for system color scheme style changes and update accordingly. This works on other systems besides Plasma or Maui, such as Gnome or Android

- The type AppsView has been renamed to SwipeView, and AppViewLoader to SwipeViewLoader

- Update MauiKit-Style to support MauiKit Controls attached properties and respect the flat properties in buttons

- Fixes to the MauiKit bug in the GridBrowser scrollbars policy

- Fixes to the action buttons layout in Dialog and PopupPage controls

- Refresh the icon when a system icon-theme change is detected – a workaround for Plasma is used and for other systems the default Qt API

For the MauiKit Frameworks

- FileBrowsing fixes bugs with the Tagging components

- Fixes to the models using dates. Due to a bug in Qt getting a file date time is too slow unless the UTC timezone is specified

- Update FileBrowsing controls to use the latest Mauikit changes

- Added a new control: FavButton, to mark files as favorites using the Tagging component quickly

- Update and fixes to the regressions in the other frameworks

- ImageTools fixes the OCR page

- TextEditor fixes the line numbers implementation.

All of the frameworks are now at version 4.0.1

All of the apps have been reviewed for the regressions previously introduced in the porting to Qt6; those issues have been solved and a few new features have been added, such as:

- Station, now allows opening selected links externally

- Index fixes to the file previewer and support for quickly tagging files from the previewer

- Vvave fixes to the minimode window closing

- Update the apps to remove usage of the Qt5Compat effects module

- Fix issues in Fiery, Strike, and Agenda

- Fix the issue of selecting multiple items in the apps not working

- Clip fixes to the video thumbnail previews and the opening file dialog

- Implement the floating viewer for Pix, Vvave, Shelf, and Clip for consistency

- Correctly open the Station terminal at the current working directory when invoked externally

- Among many few other details

** Index, Vvave, Pix, Nota, Buho, Station, Shelf, Clip, and Communicator versions have been bumped to 4.0.1

*** Strike and Fiery browser versions have been bumped to 2.0.1

**** Agenda and Arca versions have been bumped to 1.0.1

And as for Bonsai, Era, and other applications still under development, there is still not a ported version to Qt6 as of now

Maui Shell

Although Maui Shell has been ported over to Qt6 and is working with the latest MauiKit4, a lot of pending issues are still present and being worked on. The next release will be dedicated fully on Maui Shell and all of its subprojects, such as Maui Settings, Maui Core, CaskServer, etc.

That’s it for now. Until the next blog post, that will be a bit closer to the 4.0.1 stable release.

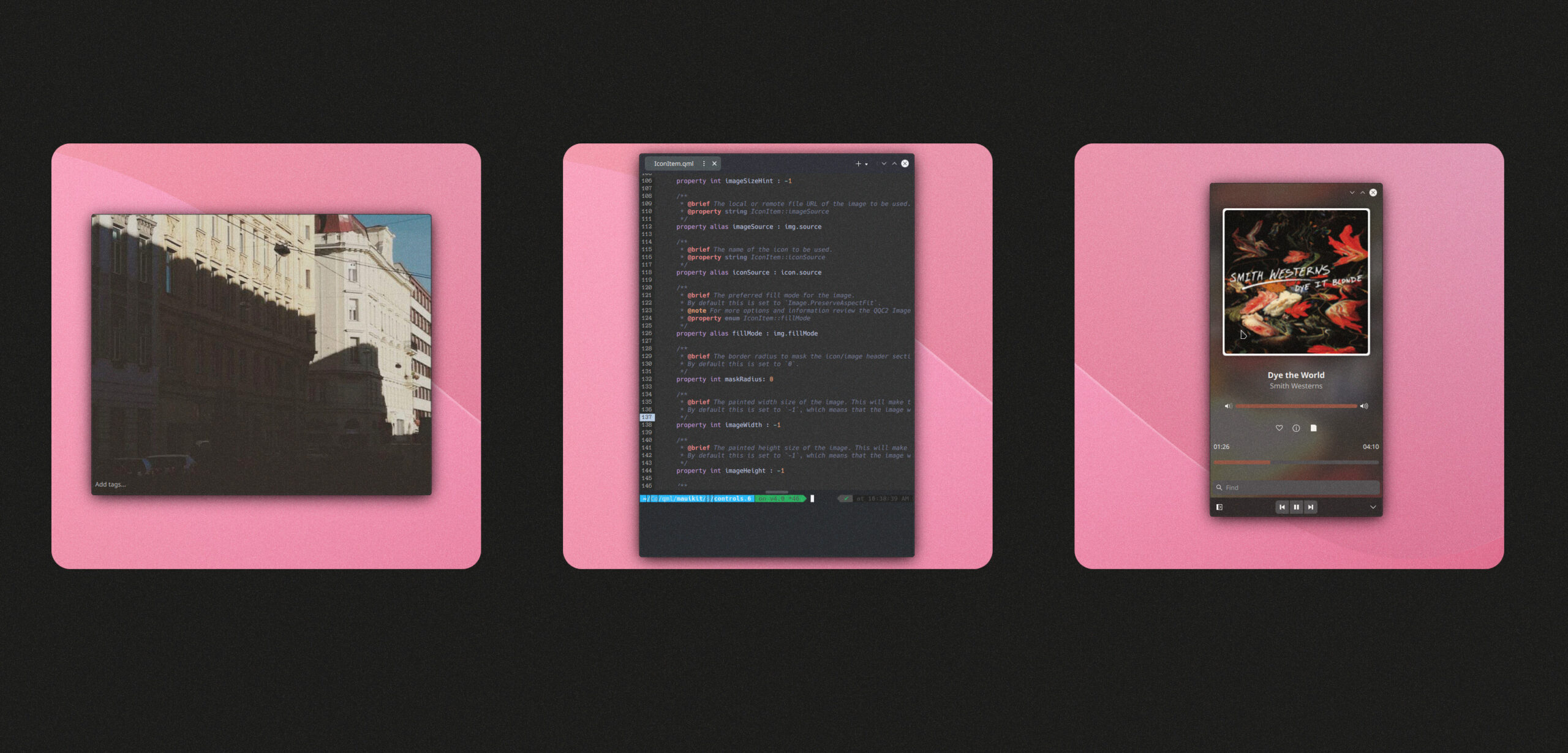

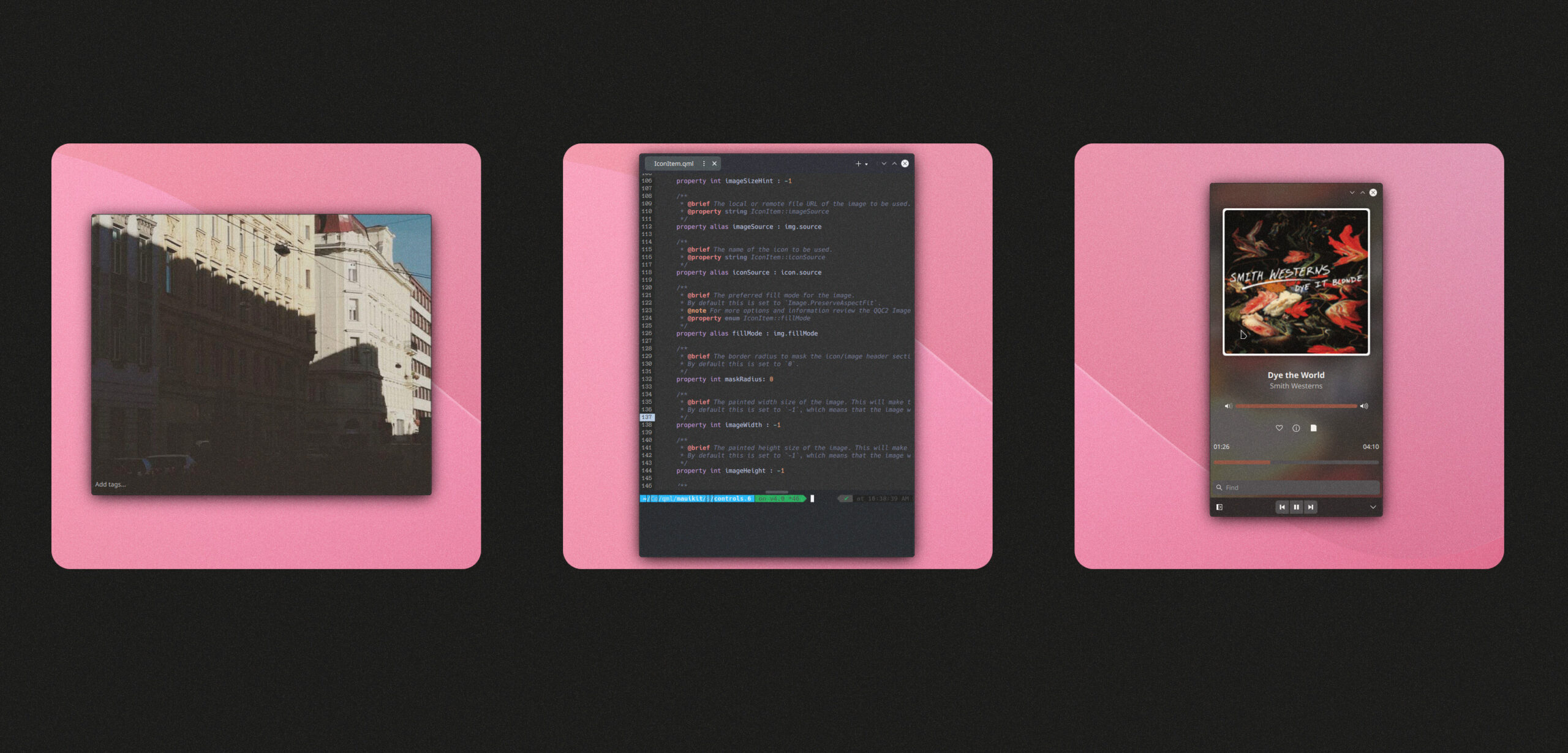

Release schedule

The post Maui Release Briefing #7 appeared first on MauiKit — #UIFramework.

@zzag:kde.org

@zzag:kde.org

smankowski

smankowski

GSoC

GSoC