Monday, 2 February 2026

A recent toot of mine got the response “friends don’t let friends use GPG” which,

I suppose, is true enough. It certainly isn’t the attestation-friendly thing to use,

and the opsec failures that are so easy with GPG-encrypted mail make it a hazard there.

But for some things it’s all we’ve got, and I do like to sign Calamares releases

and incidental FreeBSD things. And I am nominally the maintainer of the

security/gnupg port on FreeBSD. So gpg.fail notwithstanding,

here’s notes on my 2026 GPG key update.

Previously in 2024 and 2025 I wrote down basically the same things:

- Things expire in about 13 months and I’ll have to remember then again,

- You can find my pubkey published on my personal and business sites,

- FreeBSD signature information is used rarely, but is available in the FreeBSD developers OpenPGP keys list,

- Codeberg will have signed commits in the Calamares repository with these keys.

sec rsa4096/0x7FEA3DA6169C77D6 2016-06-11 [SC] [expires: 2027-02-03]

Key fingerprint = 00AC D15E 25A7 9FEE 028B 0EE5 7FEA 3DA6 169C 77D6

uid [ultimate] Adriaan de Groot <groot@kde.org>

uid [ultimate] Adriaan de Groot <adriaan@bionicmutton.org>

uid [ultimate] Adriaan de Groot <adridg@freebsd.org>

uid [ultimate] Adriaan de Groot <adriaan@commonscaretakers.com>

ssb ed25519/0x55734316C0AE465B 2025-03-04 [S] [expires: 2026-08-26]

ssb cv25519/0x064A54E8D698F287 2025-03-04 [E] [expires: 2026-08-26]

ssb ed25519/0x14B6CC381BC256D6 2026-02-03 [S] [expires: 2027-02-28]

ssb cv25519/0xD716006BBA771051 2026-02-03 [E] [expires: 2027-02-28]

Hello everyone!🎉

Welcome to my first blog!

I am Sayandeep Dutta, an undergraduate at SRM University. I learned about the awesome mentorship program, Season of KDE.

Getting Started

I started contributing to Mankala in December 2025. Got to know more about the project, interacted with mentors, and started with some small merge requests. I really like contributing to Mankala. Mankala has been a very interesting game, and the guidance from the community is really good.

Week 1: Development & Design

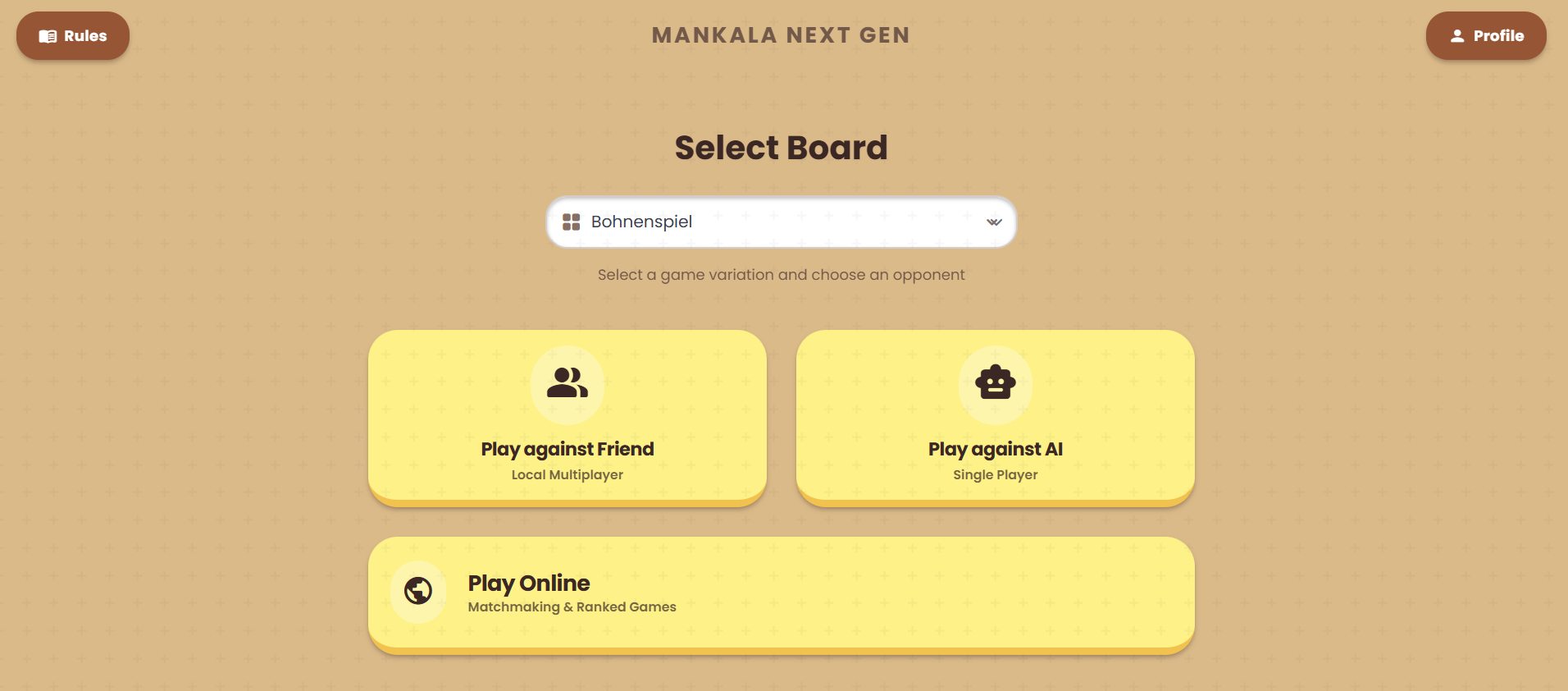

In my first week, I had set up Mankala on my Ubuntu machine and started with the development. I created mockups for the proposed UI changes in MankalaNextGen. The mockups mainly included the main game page, a login page, a home page, and some other pages in the game, which were created and worked upon by me on Figma.

Progress So Far

I had created a merge request updating the MainMenu. Well, there are a couple of pages and components we need to work on, and the Main Menu is the most essential one to start with.

What's next?

In the upcoming week, I plan to:

- Complete the rest of the UI updates.

- Start implementing the new theme of MankalaNextGen.

Thanks for reading. Stay tuned for more updates. 👀

Building Volumetric Light Shafts with Real IES Profiles

You know that feeling when the street lamps on a hazy evening cast these particular patterns of light? I've always been fascinated by those effects, so I decided to build a real-time volumetric lighting system using Qt 6.11's graphics capabilities, leveraging its QML shader integration and 3D rendering pipeline to recreate them with actual IES profiles.

Streetlight patterns in the evening haze

Sunday, 1 February 2026

Hi all! I'm CJ, and I'm participating in Season of KDE 2026 by automating portions of the data collection for the KDE promo team. This post is an update on the work I've done in the first week of SoK.

My mentor gave me a light task to help me get set up and familiarize myself with the tools I'll be using for the rest of the project. The task was to automate the population of a spreadsheet that tracks follower and post counts for X (formerly known as Twitter), Mastodon, BlueSky, and Threads.

The spreadsheet takes the follower and post counts of some of KDE's social media platforms and makes calculations based off that data. Important things to note:

- data from the sites is entered manually

- there are a lot of styles and formulas in the sheet

Fetching Account Data

Grabbing data was mostly no trouble. Mastodon and BlueSky were especially easy to work with. They have a public and well documented API that lets people collect all kinds of data in human-readable formats. One particular endpoint from both sources output account information, including follower and post counts, for a given account in neat JSON files (BlueSky, Mastodon). All it took were GET requests to these endpoints and it was smooth sailing.

X and Threads proved a bit more finnicky. Both of their APIs limit access to much of their functionality usually meaning webscraping methods are the most accessible for grabbing public account data. Threads shows users' follower counts out directly on an account's landing page, so processing a GET request to the URL of KDE's Threads account made it easy to grab. The problem is that there seems to be no direct way to grab the post count either through their API or with webscraping methods. For now, we've chosen to leave that be and circle back when I explore Threads more in the future. X presents a similar problem but there is an open-source frontend alternative named Nitter, instances of which lay all the stats information out in the open. The reliability of this method depends on public Nitter instances being available so it may be worth coming back around to this in a later part of the project, but for now it's a viable solution for getting follower and post counts.

Inputting the Data Into the Spreadsheet

With the data all fetched, all that was left is to add that data to the ODF spreadsheet. I had this down as the easy part of the task but in the end it wasn't so simple. The two major Python packages I found that can interpret and write ODF files: Pandas and pyexcel. Both of these have no problem reading data from the files, but when it comes to saving they don't preserve some elements of the spreadsheet. In the end we went the simple route which is to save the data to a separate ODF file using one of the Python-ODF interfaces and import that into the data sheet. This took a little finagling with formulas to get things working without popping errors into cells the sheet, but in the end we have an output ODF spreadsheet file containing the required data and the original spreadsheet with all the calculations pulling that data into its formulas, removing any requirement of a human interfacing with this portion of data collection.

Learned Lessons

I feel like this week's task was a great first step into data collection automation. It was challenging without being too difficult to make progress on and forced me to explore different avenues for gathering data. On the confidence side, getting a (mostly) successful task out the gate helped me feel more comfortable with the tools and processes that will likely appear throughout the entirety of my SoK experience. Things will scale up from here on out though so I'm also keeping myself in check.

From what I understand some of the most difficult parts of automated data collection come through having to interface with Javascript and not getting banned, both of which I've yet to come face-to-face with in any substantial capacity so far. Along with that I've face unexpected problems, such as the issue with modifying ODF files and that some websites don't play as well with certain browsers, which I don't have an easy way to test for yet. With these in mind I'm trying to tread lightly and be diligent with research and good practice as I continue on.

Saturday, 31 January 2026

Welcome to a new issue of This Week in Plasma!

This week we reached that part of every Plasma release cycle where the bug fixes and polish for the upcoming release are still coming in hot and heavy, but people have also started to land their changes for the next release. So there’s a bit of both here!

Everyone’s working really hard to make Plasma 6.6 a high-quality release. It’s a great time to help out in one way or another, be it testing the 6.6 beta release and reporting bugs, or triaging those bugs as they come in, or fixing them!

Notable New Features

Plasma 6.7.0

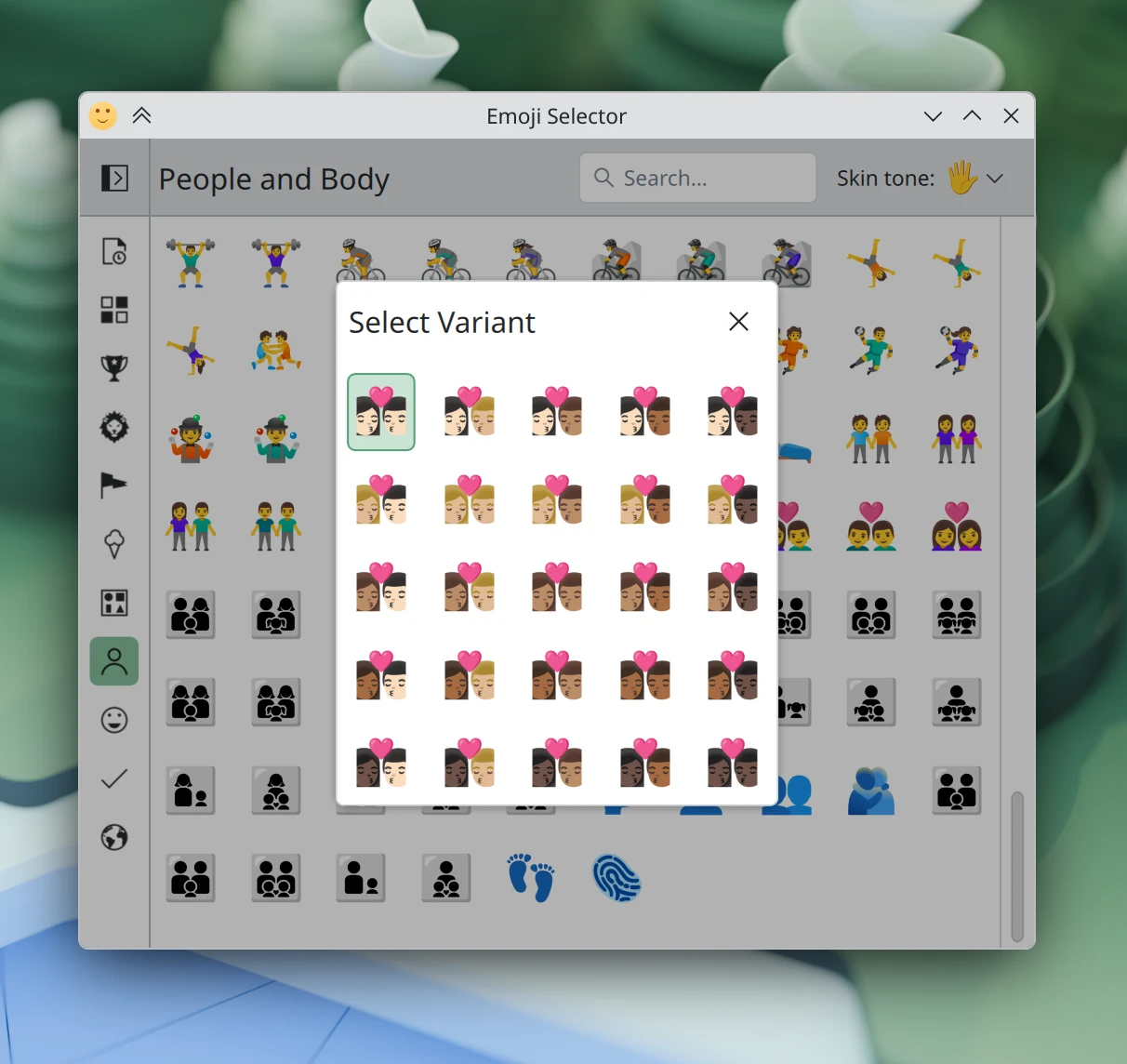

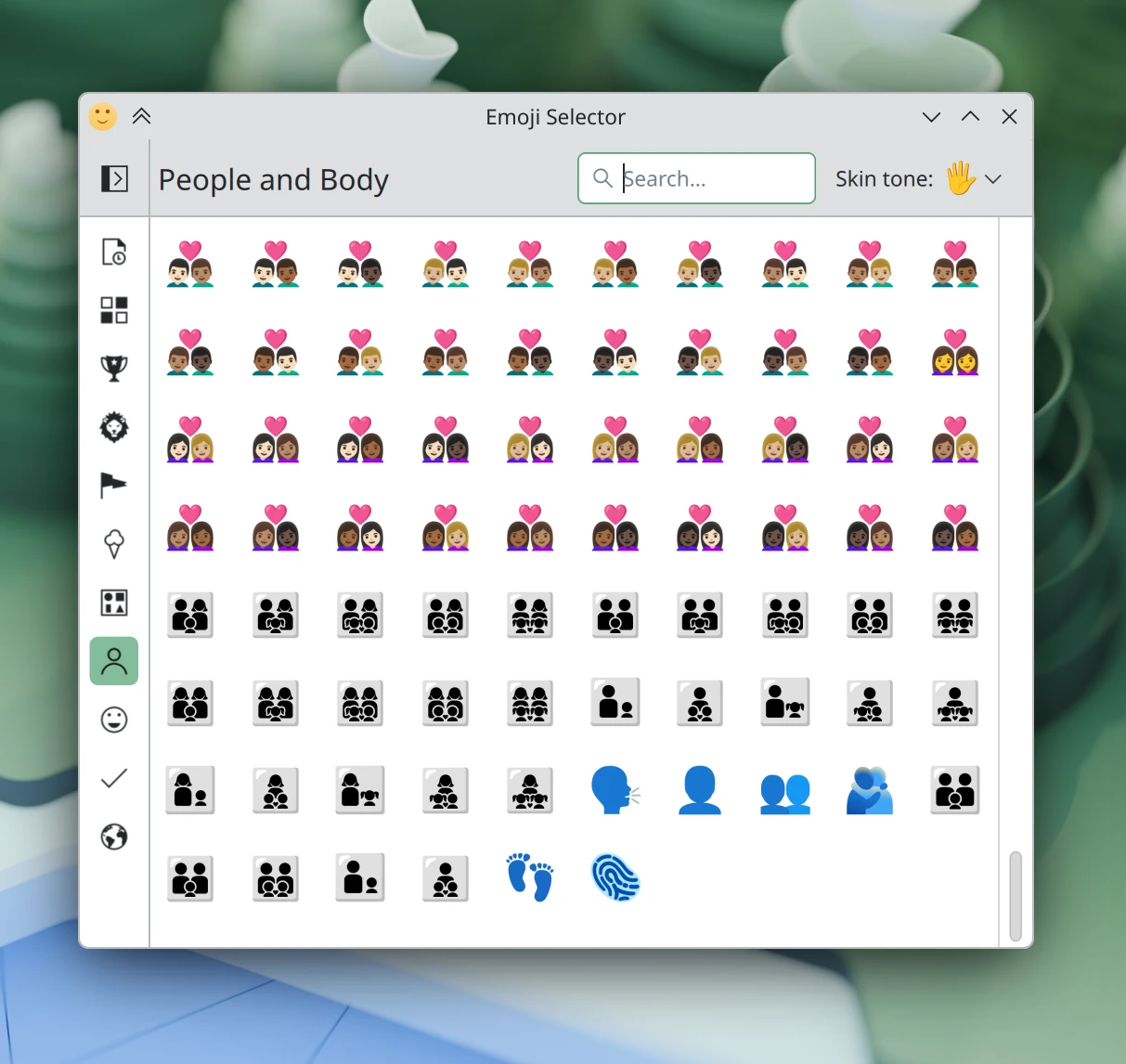

The Emoji Selector window now lets you choose mixed skin tone groupings of emojis using a nice little user-friendly pop-up dialog. (Tobias Ozór, plasma-desktop MR #3426)

You’re now able to set a global keyboard shortcut to clear the notification history. (Taras Oleksyn, KDE Bugzilla #408995)

Notable UI Improvements

Plasma 6.6.0

In the Application Dashboard widget, keyboard focus no longer gets stolen by selectable items that happen to be right under the pointer at the moment the widget is opened. (Christoph Wolk, KDE Bugzilla #510777)

Breeze-themed checkboxes now always have an opaque background, which resolves an issue where their unchecked versions could be hard to see when overlaid on top of images. (David Redondo, KDE Bugzilla #511751)

Plasma 6.7.0

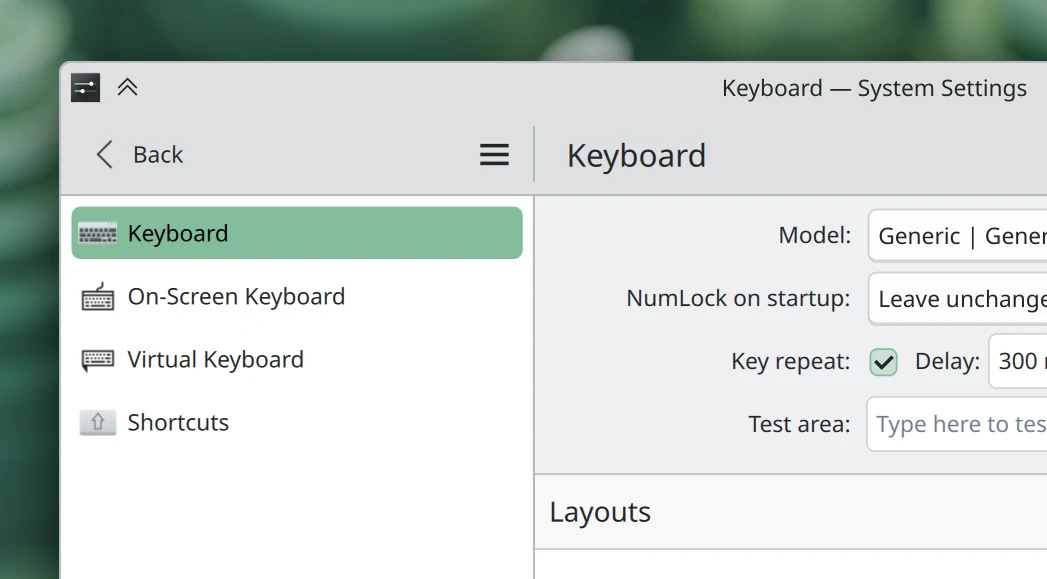

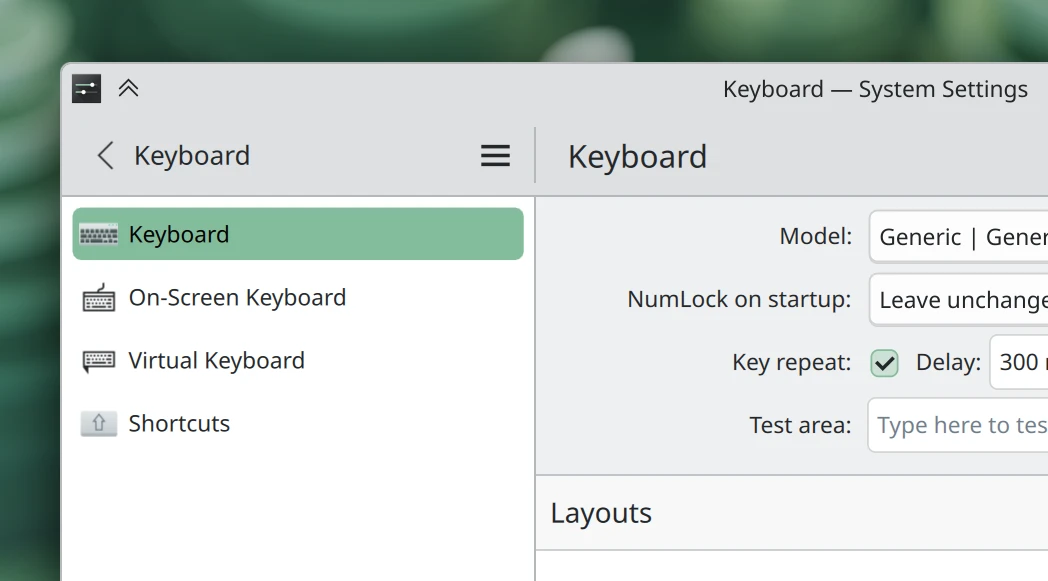

System Settings’ subcategory back button is now more of a traditional back button, eliminating a source of redundant page titles. There are still too many, but this reduces the count by one! (Nate Graham, systemsettings MR #390)

On System Settings’ Notifications page, you can now always preview a notification sound even if sound is currently disabled for that notification. (Thomas Moerschell, plasma-workspace MR #6214)

Plasma’s network settings now expose additional L2TP VPN options that were previously unavailable. (Mickaël Thomas, plasma-nm MR #480)

The old Air Plasma style (a lighter take on the original Oxygen style) is back, with fixes and improvements, too! (Filip Fila, oxygen MR #77)

The Oxygen cursor theme received a small visual fix to improve the appearance of the busy cursor. (Filip Fila, oxygen MR #89)

The cursor theme settings now show more accurate previews, which fixes issues like wobbling cursors, and makes the preview grid feel more stable. (Kai Uwe Broulik, plasma-workspace MR #6240)

System Settings’ various theme chooser pages are now consistent about whether you can delete the active theme (no), and also let you know why certain themes can’t be deleted: because they were installed by the OS, not the “get new stuff” system. (Sam Crawford, plasma-workspace MR #6222)

The Weather Report widget now shows a progress indicator while its popup is open but still loading the weather forecast from the server. (Bogdan Onofriichuk, kdeplasma-addons MR #993)

Frameworks 6.23

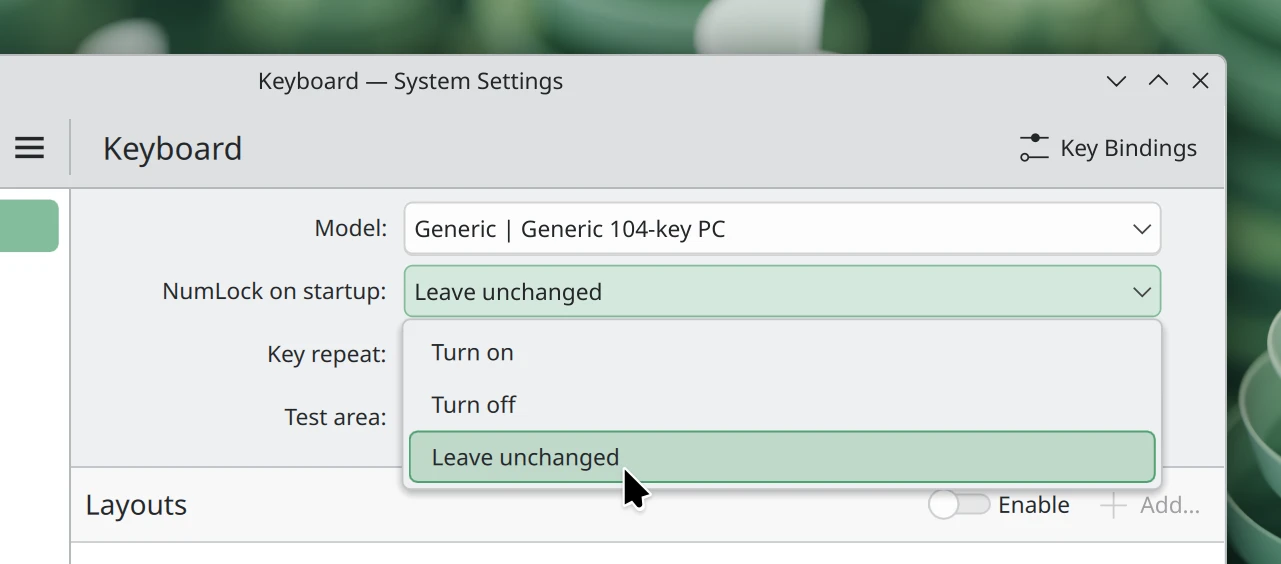

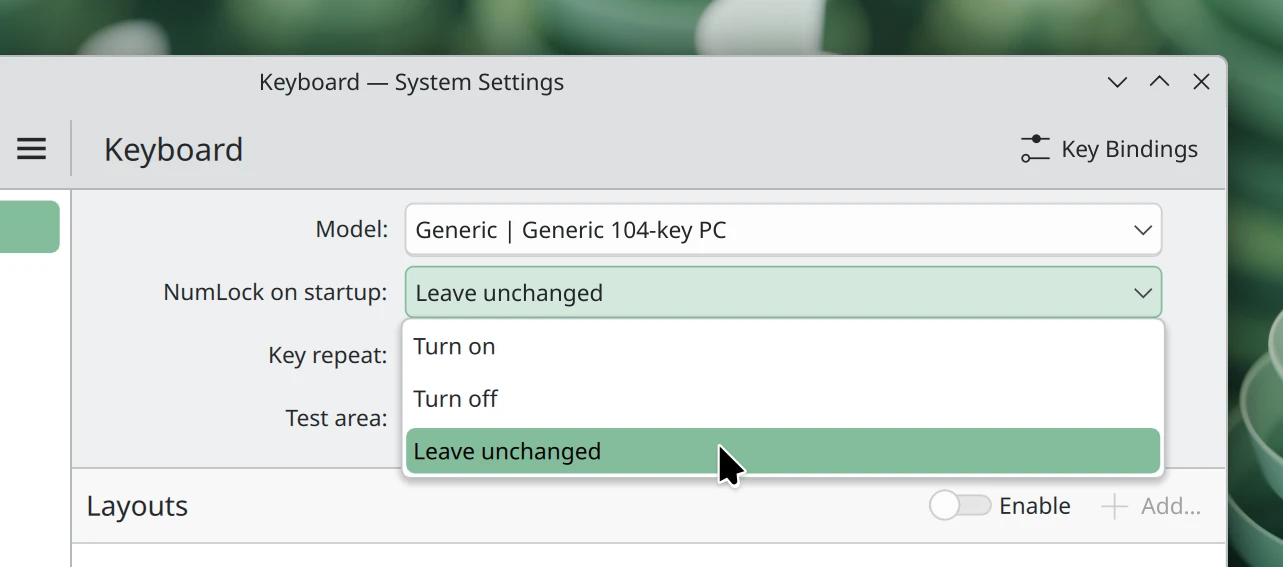

Combobox pop-ups through QtQuick-based apps and System Settings pages now use the standard menu styling, rather than a custom style. (Nate Graham, qqc2-desktop-style MR #497)

Added styling for the new upstream SearchField component that was recently added to QtQuick itself. Now it looks like the KDE version that we created years ago before the Qt version existed. (Manuel Alcaraz Zambrano, qqc2-desktop-style MR #500)

Notable Bug Fixes

Plasma 6.5.6

Fixed an issue on operating systems with asserts turned on (like KDE neon) that could sometimes crash Plasma when you launched apps. (David Edmundson, KDE Bugzilla #513312)

Plasma 6.6.0

Fixed a rare issue that could leave KWin without control of the mouse and keyboard at login. (Vlad Zahorodnii, KDE Bugzilla #511611)

Fixed a case where Plasma could crash when moving around a Weather Report widget on the desktop. (Bogdan Onofriichuk, KDE Bugzilla #514200)

Fixed an issue in Discover when launched with Snap support that could prevent it being launched again after previously being closed. (Aleix Pol Gonzalez, KDE Bugzilla #507217)

Fixed a case where the Plasma Bluetooth pairing wizard would fail to pair devices. (David Edmundson, KDE Bugzilla #495615)

The logout screen no longer fails to take focus if you raised the focus stealing level to “Medium” or higher, and manually de-focusing it no longer breaks your ability to re-focus it. (Vlad Zahorodnii, KDE Bugzilla #514204 and Aleksey Rochev, KDE Bugzilla #511258)

The custom size ruler for Plasma panels no longer sometimes appears on the wrong screen of a multi-screen setup. (Vlad Zahorodnii, plasma-workspace MR #6215)

The “X notifications were received while Do Not Disturb was active” notification is no longer inappropriately saved to the notification history. (Kai Uwe Broulik, plasma-workspace MR #6223)

Fixed two semi-related issues with widgets that made them not let you pick the same color after deleting it, or configure the same mouse action after deleting it. (Christoph Wolk, KDE Bugzilla #514983 and KDE Bugzilla #449389)

The global menu on a newly-cloned panel on a different screen now realizes immediately that it’s on a new screen. (David Redondo, KDE Bugzilla #514907)

Discover’s notification about ongoing updates no longer displays a nonsensically large number of updates under certain circumstances. (Harald Sitter, KDE Bugzilla #513676)

Plasma Browser Integration no longer exports Microsoft Teams calls as controllable media sources; it always omitted them in the past, but Microsoft changed the URL again, so we had to adapt to that once more. (Kai Uwe Broulik, KDE Bugzilla #514870)

You can now move focus from the Application Dashboard widget’s search field using arrow keys, and also type accented characters using dead keys or the compose key as the first character in a search while the search field isn’t explicitly focused. (Christoph Wolk, KDE Bugzilla #511146 and KDE Bugzilla #510871)

Animated wallpapers explicitly set for the lock screen now play their animation as expected. (Taras Oleksyn, KDE Bugzilla #460910)

Undoing the deletion of a panel widget no longer sometimes positions it far from where it was before. (Marco Martin, KDE Bugzilla #515107)

Ending the renaming of an item on the desktop by clicking on another item no longer starts a drag-selection for no good reason. (Akseli Lahtinen, KDE Bugzilla #514954)

The “Defaults” button on System Settings’ Accessibility page now works properly to reset non-default colorblindness modes. (Andrew Gigena, KDE Bugzilla #513489)

Plasma 6.7.0

Fixed a very nasty KWin bug that could, under certain rare circumstances relating to intensive Alt+Tab usage, cause the screen to go black. This change may be backported to Plasma 6.6 if it’s deemed safe enough. (Xaver Hugl, KDE Bugzilla #514828)

Frameworks 6.23

Fixed being unable to paste clipboard entries as text that were copied from a LibreOffice Calc spreadsheet cell and then re-arranged in the clipboard history list. (Alexey Rochev, KDE Bugzilla #513701)

Notable in Performance & Technical

Plasma 6.6.0

KRDP (the KDE library for remote desktop support) no longer requires systemd. (David Edmundson, krdp MR #141)

Plasma 6.7.0

KWin now supports the ext-background-effect-v1 Wayland protocol. This adds support for standardized background effects like blur, opening the door for visual consistency across apps using these effects. (Xaver Hugl, KWin MR #4890)

How You Can Help

KDE has become important in the world, and your time and contributions have helped us get there. As we grow, we need your support to keep KDE sustainable.

Would you like to help put together this weekly report? Introduce yourself in the Matrix room and join the team!

Beyond that, you can help KDE by directly getting involved in any other projects. Donating time is actually more impactful than donating money. Each contributor makes a huge difference in KDE — you are not a number or a cog in a machine! You don’t have to be a programmer, either; many other opportunities exist.

You can also help out by making a donation! This helps cover operational costs, salaries, travel expenses for contributors, and in general just keep KDE bringing Free Software to the world.

To get a new Plasma feature or a bugfix mentioned here

Push a commit to the relevant merge request on invent.kde.org.

Friday, 30 January 2026

Continuing previous efforts to update the “secure passwords” story of the Plasma desktop, I’ve done some integration work between Plasma and oo7.

Oo7 is a relatively recent SecretService provider written in Rust; it’s very nice, lightweight and cross-desktop. Very interesting for us supporting it as a first class citizen.

We want in the end to replace completely the old KWallet system with something based on SecretService, have all our applications migrated transparently with user data as well, if possible with a cross-desktop backend implementation.

We also want it to be as transparent as possible for the end user, not having any complicated first time setup, or dialogs that ask for a manual unlock when not needed. For the user, the whole system should be just invisible, except when looking up their passwords or when client applications add passwords to it themselves.

As a first thing several months ago we did a transparent translation layer for the KWallet DBus interface, so now the “KWallet” service became just a translation layer between the kwallet api and the secretservice one.

The old kwalletd service instead became “ksecretd” now providing only the SecretService interface, and that’s what we use by default now. however, it can be configured to use a different provider, so it can already be used with gnome-keyring or KeepassXC if the user wishes. In that case the user data will be migrated the first time.

Also I’ve written a new application called KeepSecret. It is centered around SecretService, with a much more modern UI compared to KWalletManager and works also much better on mobile, so we can have also a modern password manager on Plasma Mobile.

The last thing that we did so far is integration with a new SecretService provider on the block: oo7.

If oo7 is Just another SecretSerive provider, Won’t it “just work” in Plasma? Not quite, but that’s actually a good thing. Oo7 wants to properly integrate with the environment it runs in, so we need to build that integration.

When you use KWallet, KeepassXC, or gnome-keyring directly, they create their own password dialogs using their own toolkits and styles, therefore potentially looking a bit alien when run in a different environment than the one it it was designed for.

This is not ideal for evaluating options for a possible future replacement of KWallet (which piece by piece we are working towards), as we want the UI part completely integrated with the Plasma desktop.

Instead, oo7 is built on the assumption that the environment it runs on will provide its own dialogs, a bit like the portal system for services like screen sharing and file picking.

This requires active integration both on the Plasma part and the oo7 part, but on the other hand it will look native on Plasma, Gnome, and any other platform that implements the integration hooks. And that’s with the added benefit of sharing the password storage if someone every now and then goes desktop environment hopping.

On the Plasma side we decided to employ a very small daemon which is launched only on demand with dbus activation. It will create the needed system dialogs, pass the user input back to oo7 and then quit when not needed anymore, so it won’t constantly use up system resources.

This is already available in Plasma 6.6: the code for it is here. It’s designed first for oo7, but it can be used by any daemon which wishes to provide native password dialogs, so we might start to use it also from other components in the future, either other SecretService providers or completely different things which have similar needs like Polkit.

On the oo7 part, Harald implemented the integration with its dbus interface, so with oo7 from main branch (or its next release) it can be used in place of KWallet (with all old applications still using the KWallet API using its backend as well), with all data migrated to it, and it will show native UI for its password dialogs… all the pieces of the puzzle slowly falling together

Needless to say this is still at an experimental stage, but it’s an important milestone in getting more independent from the aging KWallet infrastructure.

Let’s go for my web review for the week 2026-05.

Neocities Is Blocked by Bing

Tags: tech, web, search, microsoft, vendor-lockin

Huh? What’s going on there? I don’t see why they would exclude this domain completely, it makes no sense.

https://blog.neocities.org/blog/2026/01/27/bing-block

The Enclosure feedback loop

Tags: tech, ai, machine-learning, gpt, copilot, enclosure, vendor-lockin

Interesting point. As we see the collapse of public forums due to the usage of AI chatbots, we’re in fact witnessing a large enclosure movement. And it’ll reinforce itself as the vendors are training on the chat sessions. What used to be in public will be hidden.

https://michiel.buddingh.eu/enclosure-feedback-loop

No, Cloudflare’s Matrix server isn’t an earnest project

Tags: tech, ai, machine-learning, copilot, matrix, cloudflare, security, failure

Very in depth review of the mess of a Matrix home server vide coded at Cloudflare… all the way to the blog announcing it. Unsurprisingly this didn’t go well and they had to cover their tracks several times. The response from the Matrix foundation is a bit underwhelming, it’s one thing to be welcoming, it’s another to turn a blind eye to such obvious failures. This doesn’t reflect well on both Cloudflare and the Matrix Foundation I’m afraid.

https://nexy.blog/2026/01/28/cf-matrix-workers/

I Was Right About ATProto Key Management

Tags: tech, social-media, bluesky, decentralized

Indeed, it just can’t be called decentralized…

https://notes.nora.codes/atproto-again/

Microsoft Gave FBI BitLocker Encryption Keys, Exposing Privacy Flaw

Tags: tech, microsoft, security, privacy

Are we surprised? Of course not… As soon as you backup the keys on someone else’s server BitLocker can’t do anything to ensure privacy.

ICE takes aim at data held by advertising and tech firms

Tags: tech, advertisement, surveillance, politics, privacy

What a surprise… No really who would have expected this could happen? I heard so many times “I have nothing to hide” over the years. When something like this happens you suddenly wish you were a bit more careful with your privacy and the privacy of the people around you.

https://www.theregister.com/2026/01/27/ice_data_advertising_tech_firms/

The Rise of Sanityware

Tags: tech, privacy, surveillance, attention-economy

Those are indeed getting more popular. In a way that’s unfortunate, we shouldn’t need them so much.

https://thatshubham.com/blog/2026

Blogs Are Back

Tags: tech, rss, blog, tools

Looks like a nice tool to help people to get into RSS.

Places to Telnet

Tags: tech, networking, funny

Telnet is not dead! We still have fun places to turn telnet clients to.

https://telnet.org/htm/places.htm

10 Years of Wasm: A Retrospective

Tags: tech, web, standard, webassembly, history

Nice retelling of the story behind WebAssembly.

https://bytecodealliance.org/articles/ten-years-of-webassembly-a-retrospective

cppstat - C and C++ Compiler Support Status

Tags: tech, c++, standard, tools

Looks like an interesting tool to follow availability of C++ features in compilers.

Why I still teach OpenGL ES 3.0

Tags: tech, graphics, teaching, learning

Good point, it is old but portable and carries the important concepts. This is a good teaching vehicle. Even though it’s unlikely you’d use it in the wild much longer.

https://eliasfarhan.ch/jekyll/update/2026/01/27/why-i-teach-opengles.html

SPAs Are a Performance Dead End

Tags: tech, web, frontend, performance

It’s a solution for a problem long gone. SPAs should be the exception for highly interactive applications not the norm. Most web applications don’t need to be a SPA and would be better off without being one.

https://www.yegor256.com/2026/01/25/spa-vs-performance.html

Functional Core, Imperative Shell

Tags: tech, functional, architecture, tests, tdd

Clearly not a style which works for any and every applications. Still, it’s definitely a good thing to aim towards such an architecture. It brings really nice properties in terms of testability and safety.

https://www.destroyallsoftware.com/screencasts/catalog/functional-core-imperative-shell

How I estimate work as a staff software engineer

Tags: tech, estimates, decision-making

The approach is interesting. I wouldn’t assume it’s doable in every contexts though. What’s sure is that you need to embrace the uncertainty and accept to go with the exercise. Estimates are needed to make decisions and help teams to sync.

https://www.seangoedecke.com/how-i-estimate-work/

Is It Worth It?

Tags: tech, failure, organisation, estimates

Solving paper cuts pay off faster than you’d think.

https://griffin.com/blog/is-it-worth-it

Things I’ve learned in my 10 years as an engineering manager

Tags: tech, engineering, management, leadership

Nice advice, there’s a lot of variation on the role. And yet, some things seem to always be there.

https://www.jampa.dev/p/lessons-learned-after-10-years-as

Because coordination is expensive

Tags: tech, team, organisation, communication, complexity

The complexity and cost in organisations is indeed mostly about coordination. This is a difficult problem and largely unsolved in fact.

https://surfingcomplexity.blog/2026/01/24/because-coordination-is-expensive/

Douglas Adams on the English–American cultural divide over “heroes”

Tags: culture

The contrast is indeed very stark. I got my own bias and fondness for heroic failures.

https://shreevatsa.net/post/douglas-adams-cultural-divide/

Bye for now!

It's that time of year again; another Qt World Summit is approaching! We are looking for speakers, collaborators, and industry thought leaders to share their expertise and insights at the upcoming Qt World Summit 2026 on October 27-28, in Berlin, Germany.

*Please note we are looking for live talks only.

@shibe_13:matrix.org

@shibe_13:matrix.org

[ade]

[ade]