Friday, 30 January 2026

In the past two months since the previous report KDE Itinerary got new vector-based map views and manual control over reservation cancellations, and there has been more work on reverse engineering proprietary train tickets, among many other things.

New Features

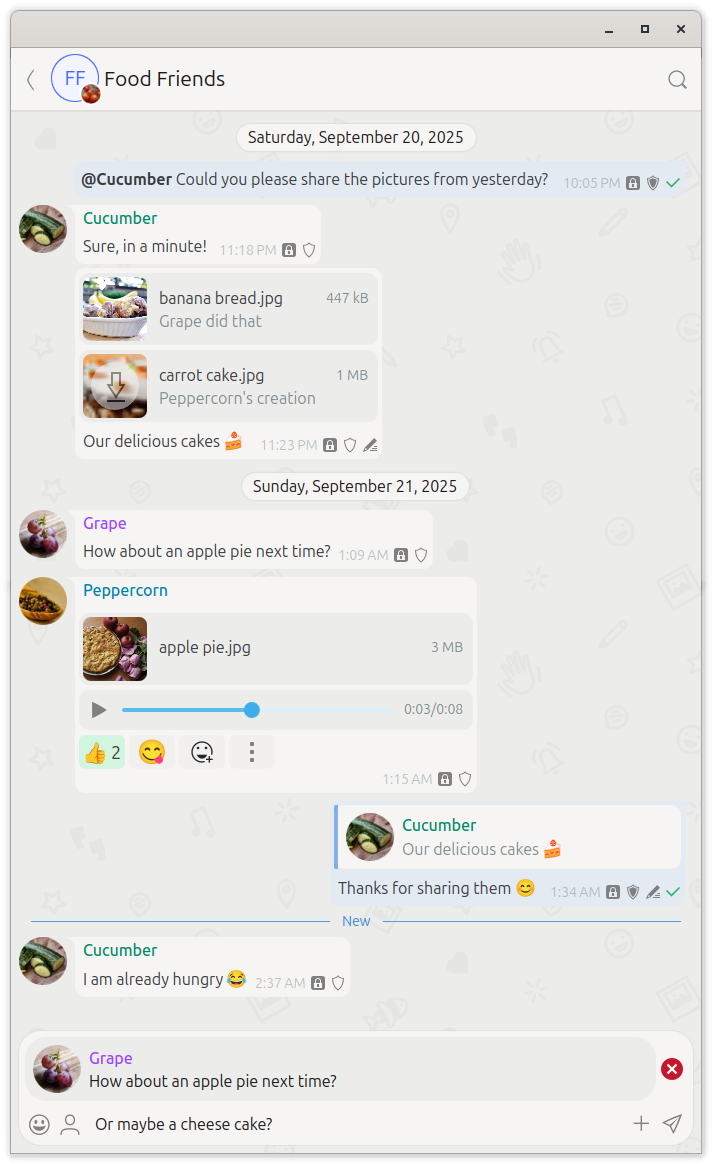

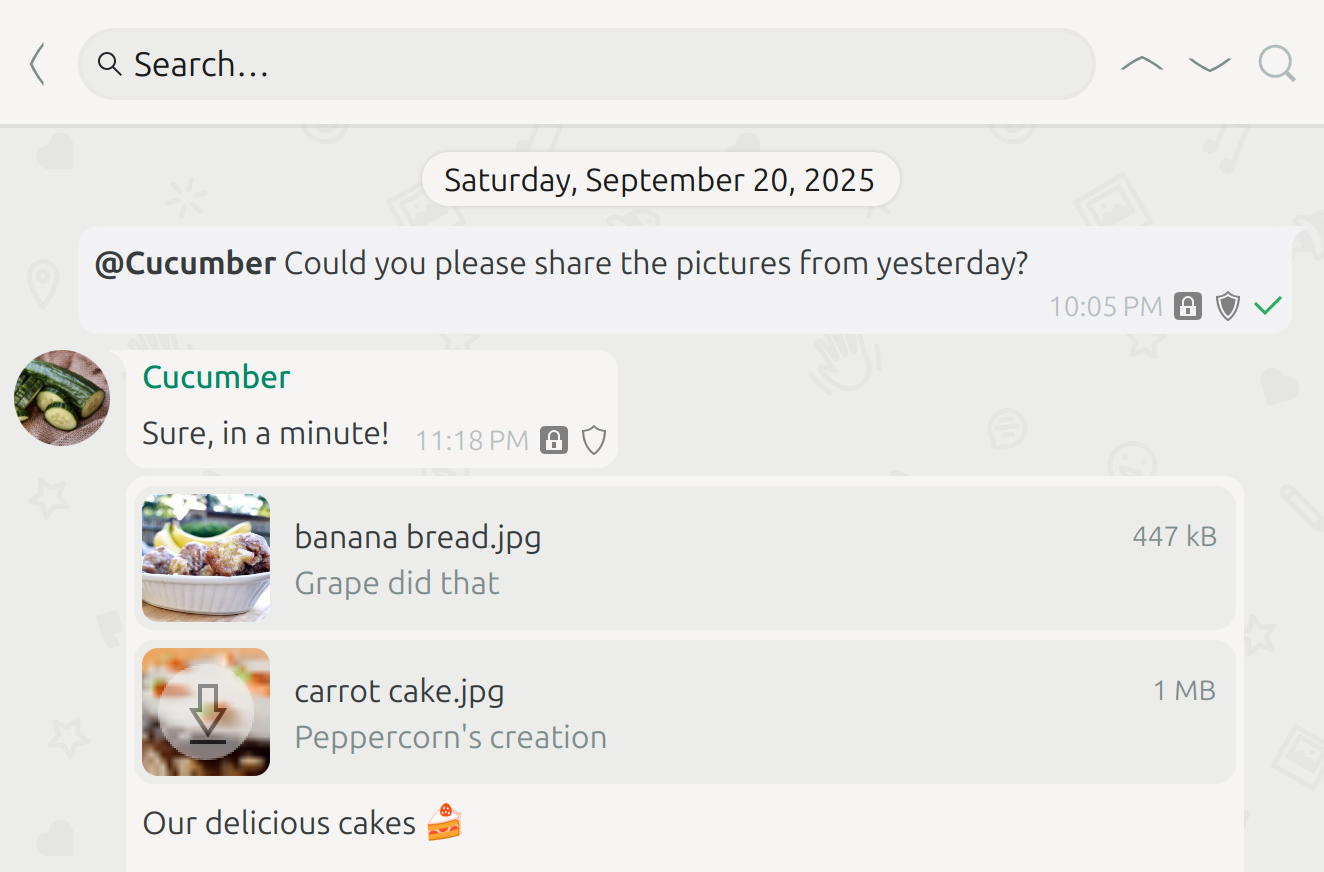

Vector maps

The map views can now use a MapLibre-based vector map instead of the previous raster image map. This gives us smoother and continuous zoom, and should also allow localized labels eventually, something particularly important in regions you can’t even read the local script.

This is enabled in our nightly APK and Flatpak builds, other distribution channels might not have this enabled yet.

Reservation cancellation

It’s now possible to manually mark reservations as canceled, rather than just importing that information from documents. This is useful for keeping unused reservations around but not have them considered for transfers, in the maps or for statistics, e.g. because you still need them for refund claims or travel cost reimbursement.

Canceled reservations are now also clearly marked as such in the timeline view again, something that had gotten lost in the last timeline restyling.

Infrastructure Work

Ticket barcode reverse engineering

Ticket barcodes can contain machine-readable information about the trip and can therefore be very useful for importing tickets into Itinerary. While ticket barcodes on international trips commonly use openly standardized formats for interoperability between different providers, this is unfortunately often not the case for domestic tickets. For those we have to resort to reverse engineering.

Fortunately though, there’s more people interested in what’s in those barcodes, e.g. from a privacy or security perspective, and there’s increasing collaboration.

Specifically, the understanding of the Hungarian domestic ticket format has significantly increased recently, as the result of three different parties exchanging their observations and evaluating their respective findings against each other’s ticket sample pools. A few few magic numbers for product/tariff/discount codes are yet to be figured out, but there’s now a pretty complete Kaitai spec able to decode versions 2 to 6 of the current format, e.g. using the Kaitai Web IDE.

Many of the discoveries made there have a resulted in improvements and fixes for MÁV and Volánbusz tickets in Itinerary.

Documentation on ticket barcodes formats is also slowly being consolidated in the Train Ticket Wiki, where available also in appropriate machine-readable formats (Kaitai, Protobuf, ASN.1, etc).

Reverse geocoding for location codes

The work on automatic geocoding for train and bus reservations covered in the last report has been further expanded:

- Hotel and restaurant reservations are now also automatically geocoded. This makes automatic transfers work in more cases.

- Reverse geocoding on imported tickets only containing train station or airport codes is now also supported. This for example results in proper station names when scanning a train ticket barcode that only contains station codes.

Events

There’s two recent events that covered upcoming changes to Transitous, the public transport routing service used by Itinerary:

- 39C3 end of 2025.

- The Transitous Hack Weekend a few weeks ago.

And more opportunities to meet the Itinerary and Transitous teams are coming up:

- FOSDEM on Jan 31-Feb 1 in Brussels, in particular with the Railways and Open Transport track.

- The next bi-annual OSM Hack Weekend in Karlsruhe on Feb 21-22.

- FOSSGIS-Konferenz on Mar 25-28 in Göttingen. I’ll talk about our OSM indoor router there.

- The KDE Mega Sprint on Apr 6-11 in Graz right before Grazer Linux Tage will probably also include some Itinerary hacking.

Fixes & Improvements

Travel document extractor

- Added or improved travel document extractors for Booking.com, České dráhy, Cytric, Deutsche Bahn, gomus, KLM, MÁV and Volánbusz.

- Fixed a crash on referenced but non-existent PDF image masks (bug 513945).

- Handle RSP6 decoding failures properly, fixing a few fields containing “null” in that case.

- Added support for resolving Hungarian railway station codes.

- Added support for local SNCF pass barcodes in some regions of France.

All of this has been made possible thanks to your travel document donations!

Public transport data

- Added (partial) coverage in Japan and Thailand thanks to Transitous.

- Fixed access parameters to the VVS API for the Stuttgart area in Germany.

All of this also directly benefits KTrip.

Itinerary app

- Mark cancelled intermediate stops on the journey section map.

- Don’t allow to open an empty place context menu.

- Show yearly sections for past trip groups in the trip group list.

- Fix showing entrance date picker for events without valid start date.

- Fix a timer overflow when monitoring for delays for trips in the slightly more distant future.

- Fixed several issues following from inconsistent ordering of timeline entries, such as two elements starting at exactly the same time and having no defined end time. This could reesult in duplicate or lost timeline entries under some circumstances.

- Correctly reset the trip group name field after closing the rename dialog.

- Fix overly aggressive input validation on manually entered flight numbers.

- Continous Flatpak builds for testing the latest development version are now also available for ARM64.

How you can help

Feedback and travel document samples are very much welcome, as are all other forms of contributions. Feel free to join us in the KDE Itinerary Matrix channel.

@vkrause:kde.org

@vkrause:kde.org

[ade]

[ade]