Thursday, 22 February 2024

A new version of Kirigami Addons is out! Kirigami Addons is a collection of helpful components for your QML and Kirigami applications. With the 1.0 release, we are now supporting Qt6 and KF6 and added a bunch of new components and fixed various accessibility issues.

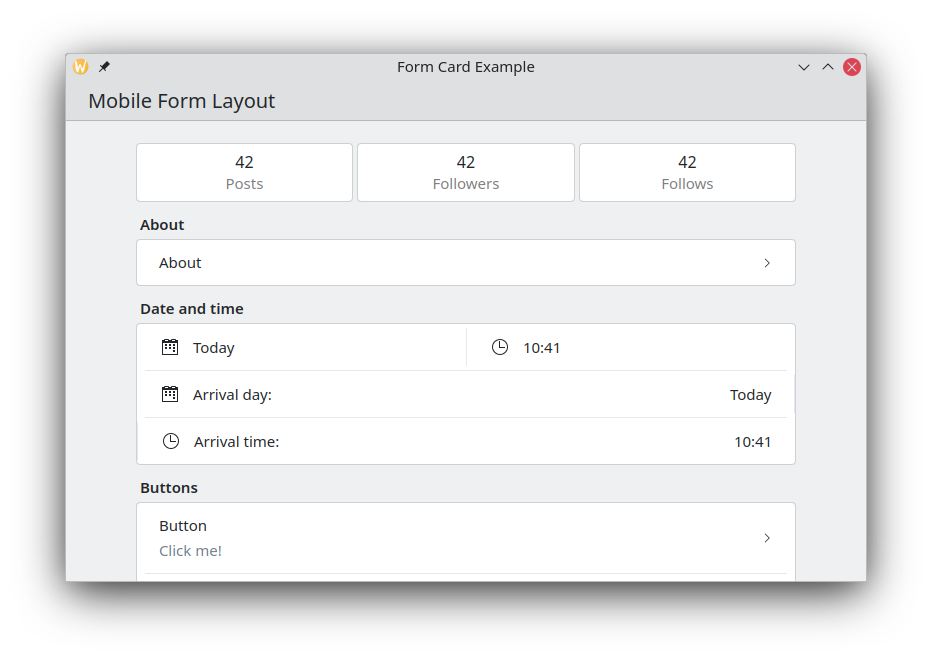

FormCard

We added a bunch of new FormCard delegates:

FormPasswordFieldDelegate: A password fieldFormDataTimeDelegate: A date and/or time delegate with integrated date and time picker which use the native picker of the platform if available (currently only on Android).

The existing delegates also recevied various accessibility issues when used with a screen reader.

Finally we droped the compatibility alias MobileForm.

BottomDrawer

Mathis added a new Drawer component that can be used a context menu or to display some information on mobile.

FloatingButton and DoubleFloatingButton

These two components received significant sizing and consistency improvements which should improve their touch area on mobile.

Packager section

You can find the package on download.kde.org and it has been signed with my GPG key.

CarlSchwan

CarlSchwan

@jriddell:kde.org

@jriddell:kde.org