I have a pile of hard drives. 3.5” Spinning rust. There’s like a dozen of them,

some labeled cryptically (EBN D2), some infuriatingly (1) and

some not-at-all. Probably most of them work. But how to effectively figure

out what is on them? FreeBSD to the rescue.

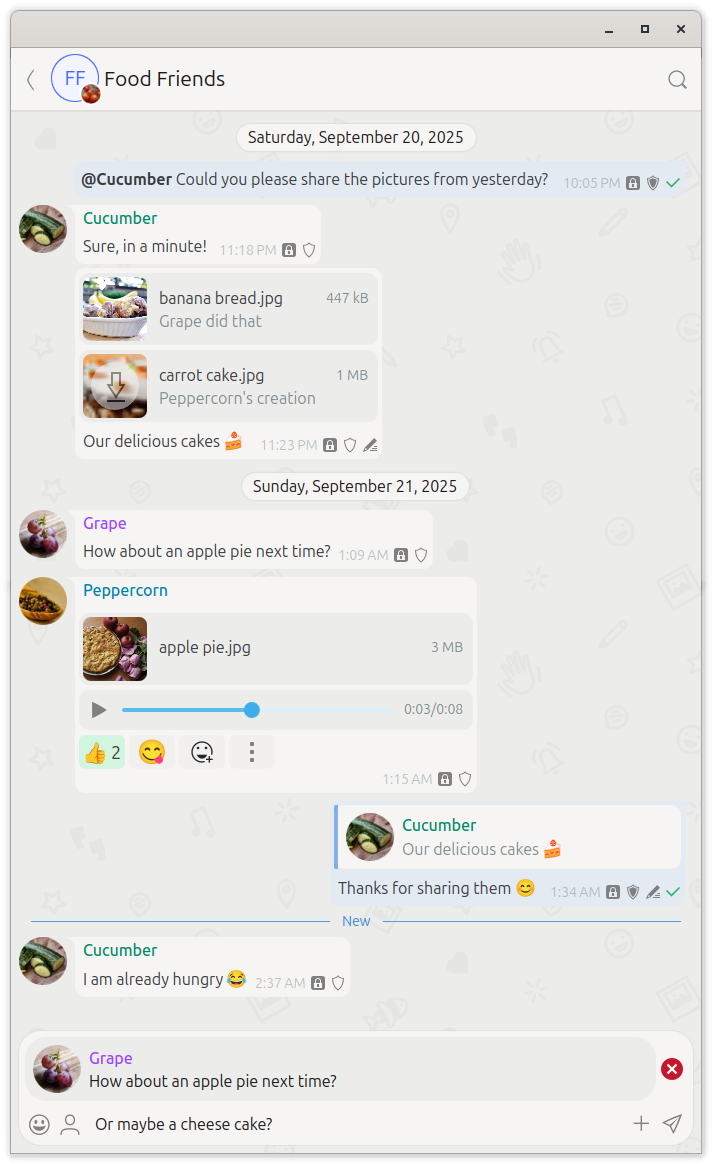

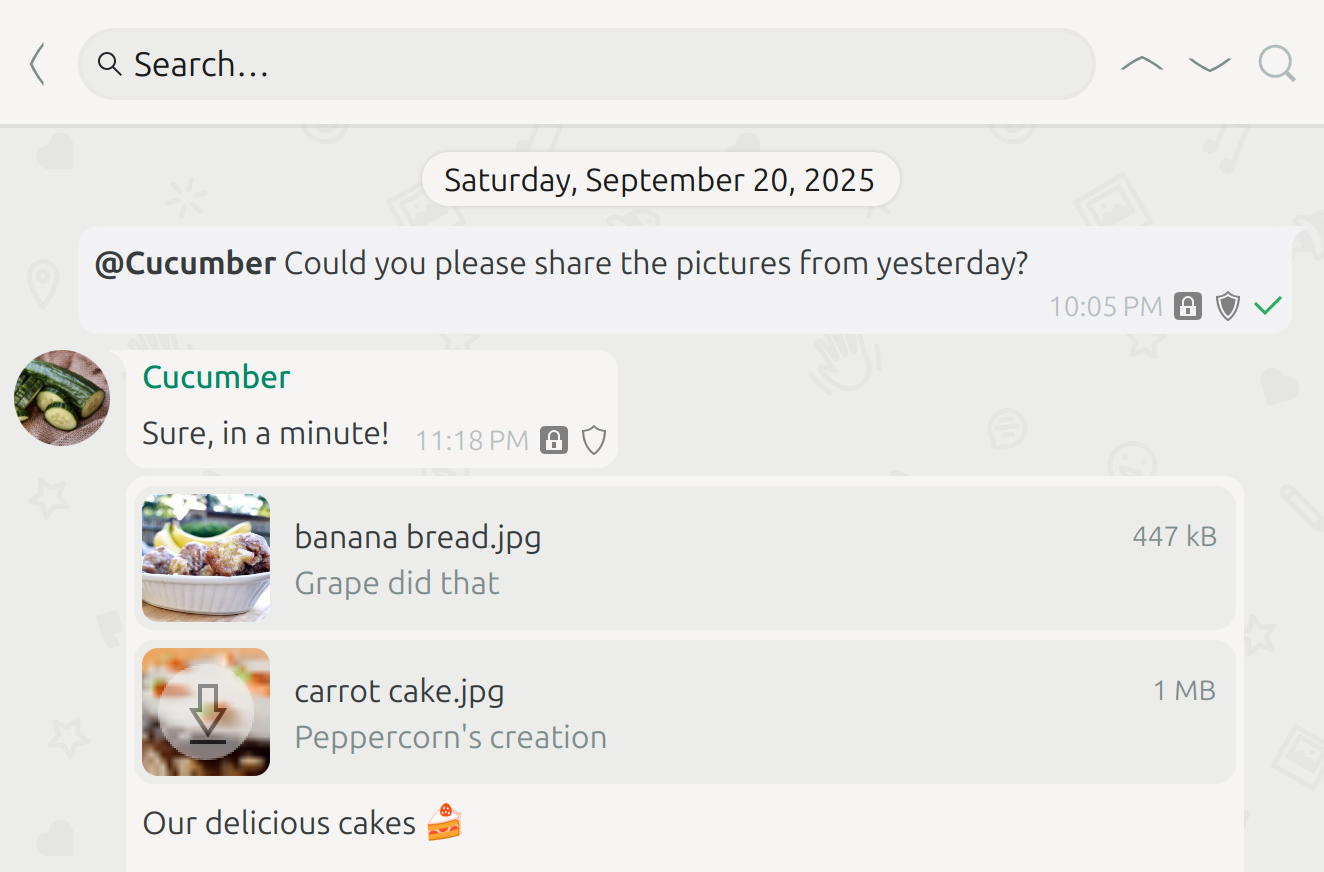

Hotplug Just Works

All the drives are SATA. I do have an IDE drive, I use it for opening beer bottles. And an ST-225

for old-time’s sake. But SATA it is, and there’s spare SATA data- and power-cables dangling out the

side of my PC. This particular machine runs FreeBSD 14.3, and connecting a drive (data first, then power)

yields some messages in the system log. Old-school, the command to read these is still dmesg,

which prints:

ada3 at ahcich0 bus 0 scbus0 target 0 lun 0

ada3: <WDC WD3200AAKS-00SBA0 12.01B01> ATA-7 SATA 2.x device

ada3: Serial Number WD-WMAPZ0561055

ada3: 300.000MB/s transfers (SATA 2.x, UDMA6, PIO 8192bytes)

ada3: Command Queueing enabled

ada3: 305245MB (625142448 512 byte sectors)

That matches information printed on the label of the disk (this drive is from 2007, has a 1

written on it in black marker – it may have been a drive in the English Breakfast Network server back then).

It also tells me that the disk is registered with the system as ada3.

FreeBSD’s disk subsystem is a stack of “GEOM classes”. The geom(4) manpage tells me

that it is a modular disk I/O request transformation framework, but the important bit is geom(8), the command to query the disk subsystem.

Running geom disk list ada3 tells me what is known about disks involved with ada3. This actually doesn’t tell

me much I don’t already know:

Geom name: ada3

Providers:

1. Name: ada3

Mediasize: 320072933376 (298G)

Sectorsize: 512

Mode: r0w0e0

descr: WDC WD3200AAKS-00SBA0

lunid: 50014ee0aabd8fe3

ident: WD-WMAPZ0561055

rotationrate: unknown

fwsectors: 63

fwheads: 16

Well, actually this tells me that the dmesg and geom output are in mibi- and gibi-bytes, and that they’re consistent.

How about partitions on this disk, though? geom part list ada3 tells me (here I’ve removed several partitions, along with many other lines that are not very useful right now; the output is extensive):

Geom name: ada3

scheme: GPT

Providers:

1. Name: ada3p1

Mediasize: 819200 (800K)

efimedia: HD(1,GPT,a43a2da3-bb5f-11e5-8d81-f5e4d894ddb1,0x22,0x640)

label: (null)

type: efi

index: 1

2. Name: ada3p2

Mediasize: 315679277056 (294G)

efimedia: HD(2,GPT,a43c9069-bb5f-11e5-8d81-f5e4d894ddb1,0x662,0x24bff9c0)

label: (null)

type: freebsd-ufs

index: 2

So it is a GPT-partitioned disk, and at least one of the partitions is an “old-fashioned” UFS partition.

That’s FreeBSD before ZFS became the de-facto standard filesystem (maybe just for me). This disk is simple

to deal with (further notes below)!

After I’m done with the disk, I power it down first with

camcontrol standby ada3. I can hear the disk stop spinning

and then pull out the connectors (power first, then data). And move on to

the next disk. After disconnecting, dmesg confirms that I unplugged the correct drive:

ada3 at ahcich0 bus 0 scbus0 target 0 lun 0

ada3: <WDC WD3200AAKS-00SBA0 12.01B01> s/n WD-WMAPZ0561055 detached

(ada3:ahcich0:0:0:0): Periph destroyed

This way I can step through all of the drives in my pile and then jot down

what disk does what (or, in rare cases, decide they can be zeroed out and

re-used for something else).

Dealing with non-GPT disks

The scheme reported by geom part list is GPT if you’re sensible,

but of course it is possible to bump into MBR and BSD disklabels

as well. The geom subsystem abstracts all that away, and the only

realy difference is the names of devices.

Scheme BSD, also known as BSD disklabel,

gives you ada3a and ada3d, rather than numbered partitions.

It is possible to apply this to a whole disk. It is also possible to

have a BSD disklabel inside an MBR partition, but that’s a late-90s kind of setup.

Scheme MBR gives you numbered partitions, but FreeBSD calls these slices

instead of partitions and so you end up with ada3s1 instead of ada3p1.

Dealing with UFS

UFS partitions are pretty simple: mount them somewhere and things will be OK.

I go for read-only, and I have a /mnt/tmp for all my arbitrary-disk-mounting activity.

So mount -o ro /dev/ada3p2 /mnt/tmp it is (ada3p2 is the partition name found earlier).

This particular disk turned out to be my main workstation drive around 2017,

with a handful of still-interesting files on it. Ones I would not have missed

if I hadn’t looked, but it was nice to find a presentation PDF I gave

to SIDN once-upon-a-time.

After looking, umount /mnt/tmp to unmount and release the disk.

After that, power-down and disconnect as described above.

Dealing with ZFS

Slightly newer disks might be ZFS. Here’s the partition information for one:

Providers:

1. Name: ada3p1

Mediasize: 250059309056 (233G)

label: backup0

type: freebsd-zfs

index: 1

It’s a GPT partition with a label, and ZFS on it. That means it’s part of a ZFS pool,

and is probably referred to within the pool by its label. Possibly by its ID.

In any case, ZFS needs to be imported, not mounted, because the filesystems

are contained as part of the storage pool. The command to discover what is

available is zpool import , which doesn’t actually import anything.

pool: zbackup

config:

zbackup ONLINE

gpt/backup0 ONLINE

That’s encouraging: the pool consists of a single drive and everything is online.

To make the filesystems in this pool available, I need to import it. I’ll

force it (-f, because it was probably untimely ripp’d from whatever machine it was

in previously), without mounting any of the filesystems in it (-N), readonly

(-o readonly=on) with a temporary name (-t zwhat), like so: zpool import -f -N -o readonly=on -t zbackup zwhat

After importing the pool, zfs list tells me what filesystems are available:

zwhat 36.2G 189G 25K /zwhat

zwhat/home 36.2G 189G 36.0G /tmp/foo/

I can mount a single ZFS filesystem just like a UFS filesystem.

The ZFS filesystem knows where it would want to be mounted (/tmp/foo, I have no

idea what I was doing back then), but we can treat it like a legacy filesystem:

mount -t zfs zwhat/home /mnt/tmp

After looking, umount /mnt/tmp to unmount and release the disk.

After that, power-down and disconnect as described above.

Dealing with Linux ext4

If the disk comes from a Linux machine, then it may have an ext4 filesystem on it.

I still usually pick that when installing Linuxes. Here’s the partition

information for one:

scheme: MBR

Providers:

2. Name: ada3s2

Mediasize: 64428703744 (60G)

rawtype: 131

type: linux-data

There is ext4 support in the FreeBSD kernel, although it is named ext2

(and some ext4 filesystems use unsupported features, and then it won’t mount).

But for simple cases: mount -o ro -t ext2fs /dev/ada3s2 /mnt/tmp does the job.

Dealing with linux-raid

I found two disks, both WD Caviar Blue, labeled EBN D1 and EBN D2 with similar layouts.

Those are linux-raid disks, and this is something I can’t deal with in FreeBSD.

Heck, I’m not confident I can deal with them under Linux anymore, either.

Providers:

1. Name: ada3s1

Mediasize: 493928031744 (460G)

efimedia: HD(1,MBR,0xa8a8a8a8,0x3f,0x398033d3)

rawtype: 253

type: linux-raid

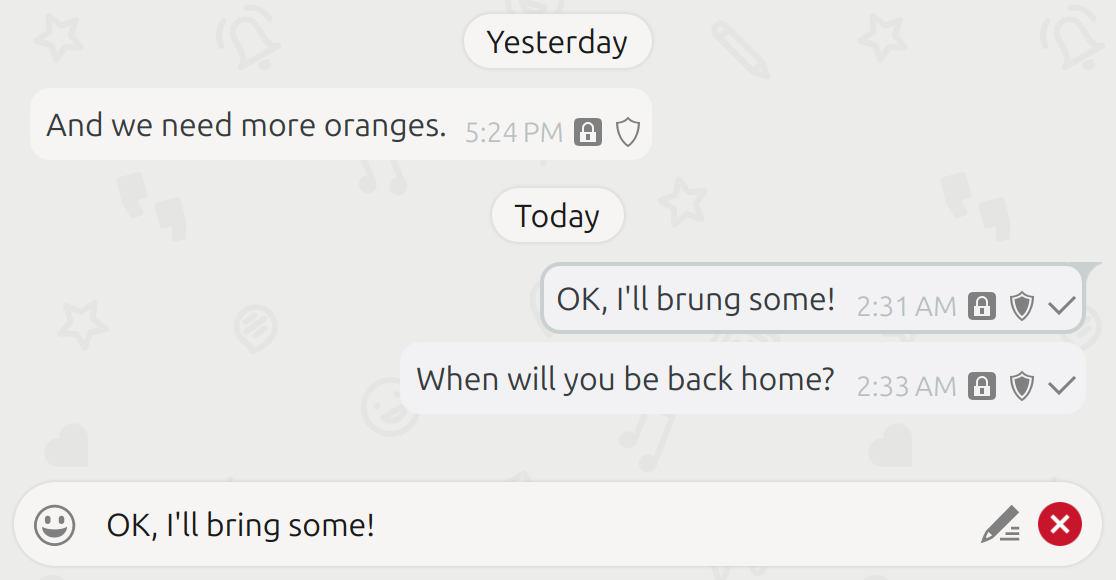

Re-purposing Disks

KDE has a lovely partitioning tool, but I wouldn’t be me if I didn’t go for the command-line approach.

Make sure the disk isn’t mounted anywhere,

but is powered up.

Zero out the first gigabyte or so of the disk: dd if=/dev/zero of=/dev/ada3 bs=1M count=1024

I guess this isn’t strictly necessary, and geom warns during this operation that the

GPT is corrupt and the backup GPT (at the other end of the disk) should probably be used. Ignore that.

Destroy the partition table some more: geom part destroy -F ada3 , now it is really dead.

Make a new GPT partition table on the disk: geom part create -s gpt ada3

Start adding partitions to the partition table. I (now) use labels with the last digits of the drive’s serial-number.

This drive gets a gigabyte of swap (just in case) and the rest is a ZFS partition which I can add to a pool later.

geom part add -t freebsd-swap -s 1G -l swap-159666 ada3geom part add -t freebsd-zfs -l zfs-159666 ada3

Why the labels-with-serial-numbers? Well, that’s so that I can subsequently create a ZFS pool

from labeled partitions, and it remains obvious where the parts of the pool come from

and also prevents name-collisions from naming everything backup0 and so.

@vkrause:kde.org

@vkrause:kde.org

[ade]

[ade]