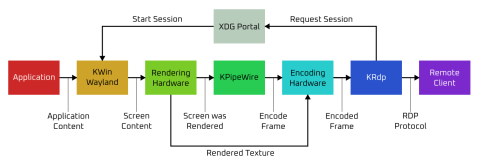

With this blog I would like to introduce KRdp, which is a new library implementing the required glue to create a server exposing a KDE Plasma Wayland session over the RDP protocol. It also contains a command-line based server which will allow remote clients to control the current Plasma Wayland session.

Remote Desktop Support for Wayland

With the increase in people working from home the past years and other remote use cases, it has become increasingly important to be able to control a running computer remotely. While on X11 there are several existing solutions, for Wayland the choices are rather more limited. Currently the only way of allowing remote control of a Plasma Wayland session is through Krfb, which uses VNC1 for streaming to the client.

Unfortunately, VNC is not ideal, for various reasons. So to provide a better experience we started looking at other options, eventually settling on building something around the RDP protocol. Fortunately, because of the work done for Krfb and various other projects, we do have all the parts implemented to allow remote desktop control, the "only" thing left was to glue everything together.

Why RDP?

This raises the obvious question of "why use RDP?". There were several things we considered when starting with this. From the start, we knew we wanted to build upon something pre-existing, as maintaining a protocol takes a lot of time and effort that are better spent elsewhere within KDE. Especially because a custom protocol would also mean maintaining the client side. Both VNC and RDP have many existing clients that can be used, which means we can focus on the server side instead.

Another major consideration is performance. VNC sends uncompressed images of the full screen over the wire, which means it requires a lot of bandwidth and performance suffers accordingly. While I have seen some efforts to change this, they are not standardised and it is unclear what clients would support those. RDP, on the other hand, has a documented extension called the "Graphics Pipeline" that allows using H.264 to compress video, greatly reducing the needed bandwidth.

The main drawback of RDP is that it is owned by Microsoft and developed for the needs of Windows. While a potential problem, the protocol is openly documented and, equally important for us, there is an extensive open-source implementation of both server and client side of the protocol in the form of FreeRDP. This means we do not need to bother with the details of the protocol and can instead focus on the higher level of gluing everything together.

While I am talking about client support and performance since those were some of our main considerations, RDP has many more documented extensions that, long term, would allow us to greatly enhance the remote desktop experience by adding features such as audio streaming, clipboard integration and file sharing.

Other Considerations

While the protocol was one major thing that needed to be considered for a good remote desktop experience, it was not the only part. As mentioned, we technically have all the pieces needed to enable remote desktop, but some of those pieces needed some additional work to really shine. One example of this is the video encoding implemented in KPipeWire.

During development of KRdp it became clear that using pure software encoding was a bottleneck for a responsive remote desktop experience. We tried several things to improve its performance, but ultimately concluded that we would need to use hardware encoding for the best experience. This resulted in KPipeWire now being able to use VA-API for hardware accelerated video encoding, which not only benefits KRdp but also Spectacle once it is released with KDE Plasma 6.

Another part is the KDE implementation of the FreeDesktop Remote Desktop portal. While it would be possible to directly communicate with KWin to request remote input and a video stream, we preferred to use the portal so that it would be possible to run KRdp from within a sandboxed environment. However, the current implementation is fairly limited, only allowing you to choose to accept or reject a remote desktop request. We are working on adding some of the same features to the remote desktop portal as are already available for the screencasting portal. This includes screen selection and remembering the session settings.

KRdp

So all that leads us back to KRdp, which is a library that implements the glue to tie all these parts together to allow remote desktop using the RDP protocol. It uses the FreeDesktop Remote Desktop portal interface to request a video stream and remote input from KWin, uses KPipeWire to encode the video stream to H.264 and FreeRDP to send that to a remote client and receive input from that client. The long term goal is to integrate this as a system service into KDE Plasma, with a fairly simple System Settings page to enable it and set some options.

Trying it Out

For those who want to try it out, we are releasing an alpha as a Flatpak bundle

that can be downloaded from here. This Flatpak, when run using

flatpak run org.kde.krdp -u {username} -p {password} will start a server

and listen for incoming connections from remote hosts. See the readme for more

details and known issues. The Flatpak bundle was built from this code.

If you encounter any other issues, please file them at bugs.kde.org.

Discuss this blog post at discuss.kde.org.

While strictly speaking the protocol used by VNC is called Remote Framebuffer or RFB, VNC is the general term that is used everywhere, so that is what I am using here as well. ↩

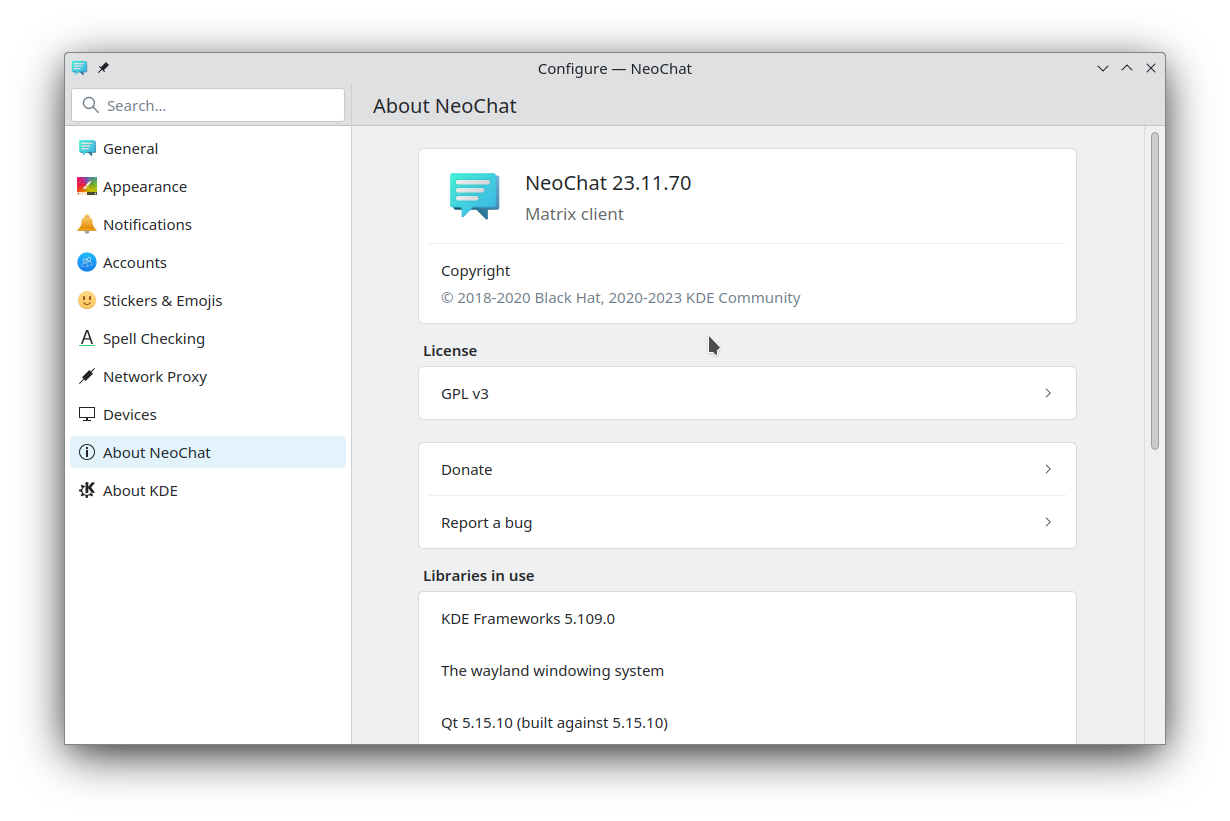

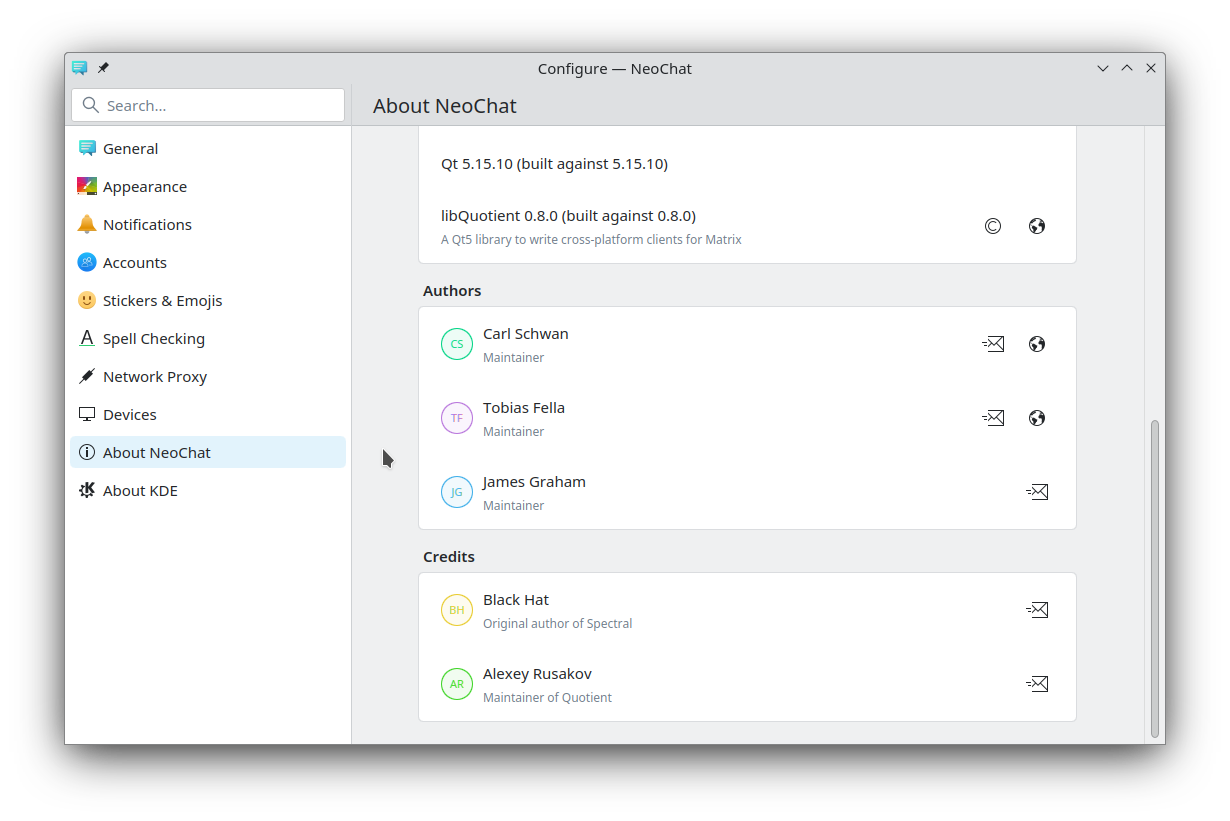

CarlSchwan

CarlSchwan

@sitter:kde.org

@sitter:kde.org

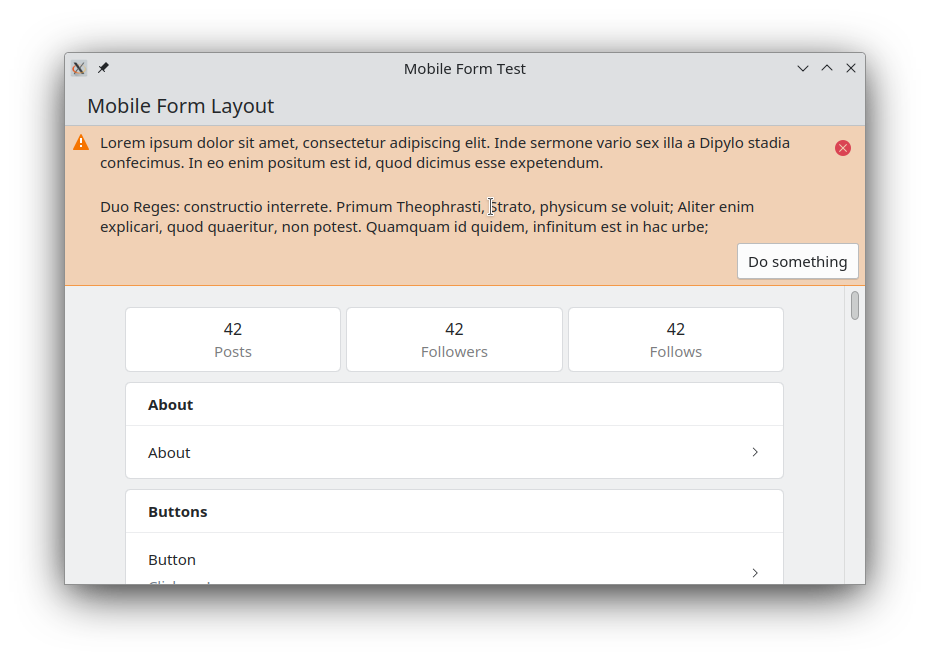

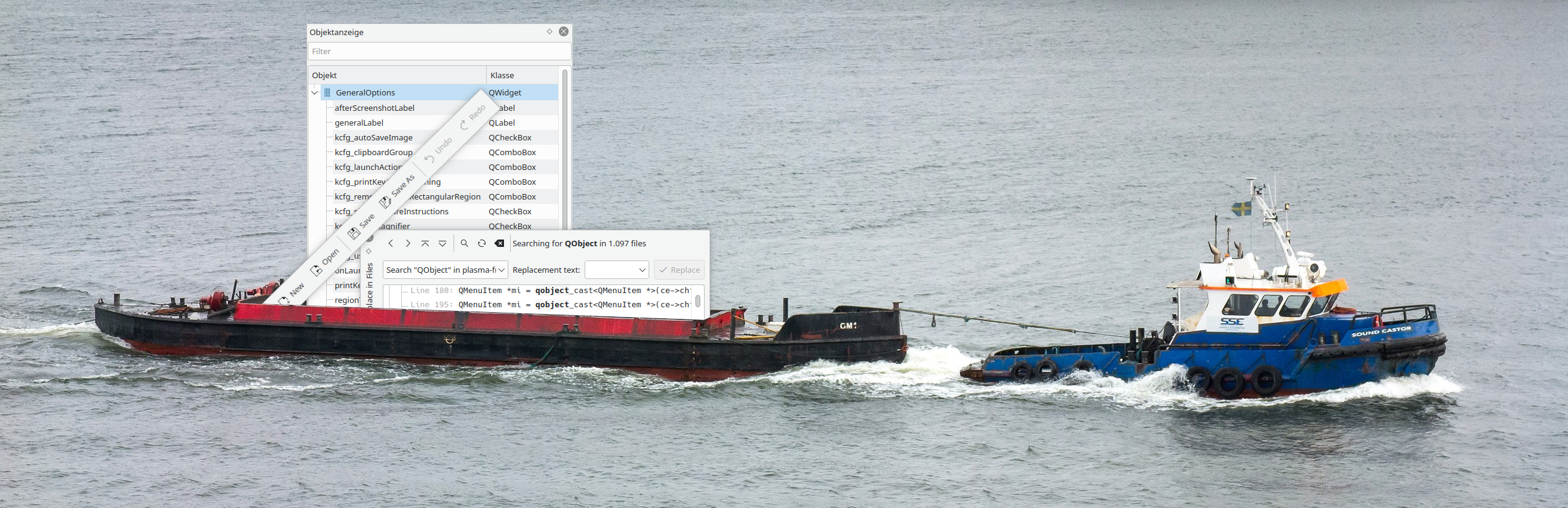

Most of our desktop applications have a toolbar, sometimes they

even have multiple toolbars next to or stacked on top of each other.

More complex desktop applications such as Krita, Kdenlive or LabPlot often

consist of multiple sub-windows, docks, tabbed views, etc.

Docks and toolbars can be undocked, moved around and arranged freely and when dragged over a part

of a window snap back into the window. This allows the user to customize their

work environment to their liking and needs.

This worked fine on X because it lets you do anything, this post explores the situation

on Wayland.

Most of our desktop applications have a toolbar, sometimes they

even have multiple toolbars next to or stacked on top of each other.

More complex desktop applications such as Krita, Kdenlive or LabPlot often

consist of multiple sub-windows, docks, tabbed views, etc.

Docks and toolbars can be undocked, moved around and arranged freely and when dragged over a part

of a window snap back into the window. This allows the user to customize their

work environment to their liking and needs.

This worked fine on X because it lets you do anything, this post explores the situation

on Wayland.